I am currently having an issue where I cannot search for UUID's in my logs. For instance, I have a fieldname "log" and in there is a full log, for example:

"log": "time=\"2022-10-10T07:46:00Z\" level=info msg=\"message to endpoint (outgoing)\" message=\"{8503fb5a-3899-4305-8480-6ddc0f5df296 2022-10-10T09:45:59 02:00}\"\n",

I want to get this log in elastic search, and via Postman I send this:

{

"query": {

"match": {

"log": {

"analyzer": "whitespace",

"query": "8503fb5a-3899-4305-8480-6ddc0f5df296"

}

}

},

"size": 50,

"from": 0

}

As a response I get:

{

"took": 930,

"timed_out": false,

"num_reduce_phases": 2,

"_shards": {

"total": 581,

"successful": 581,

"skipped": 0,

"failed": 0

},

"hits": {

"total": {

"value": 0,

"relation": "eq"

},

"max_score": null,

"hits": []

}

}

But when I search on "8503fb5a" alone, then I get the wanted results. This means the dashes are still causing issues, but I thought using the whitespace analyzer should fix this? Am I doing something wrong?

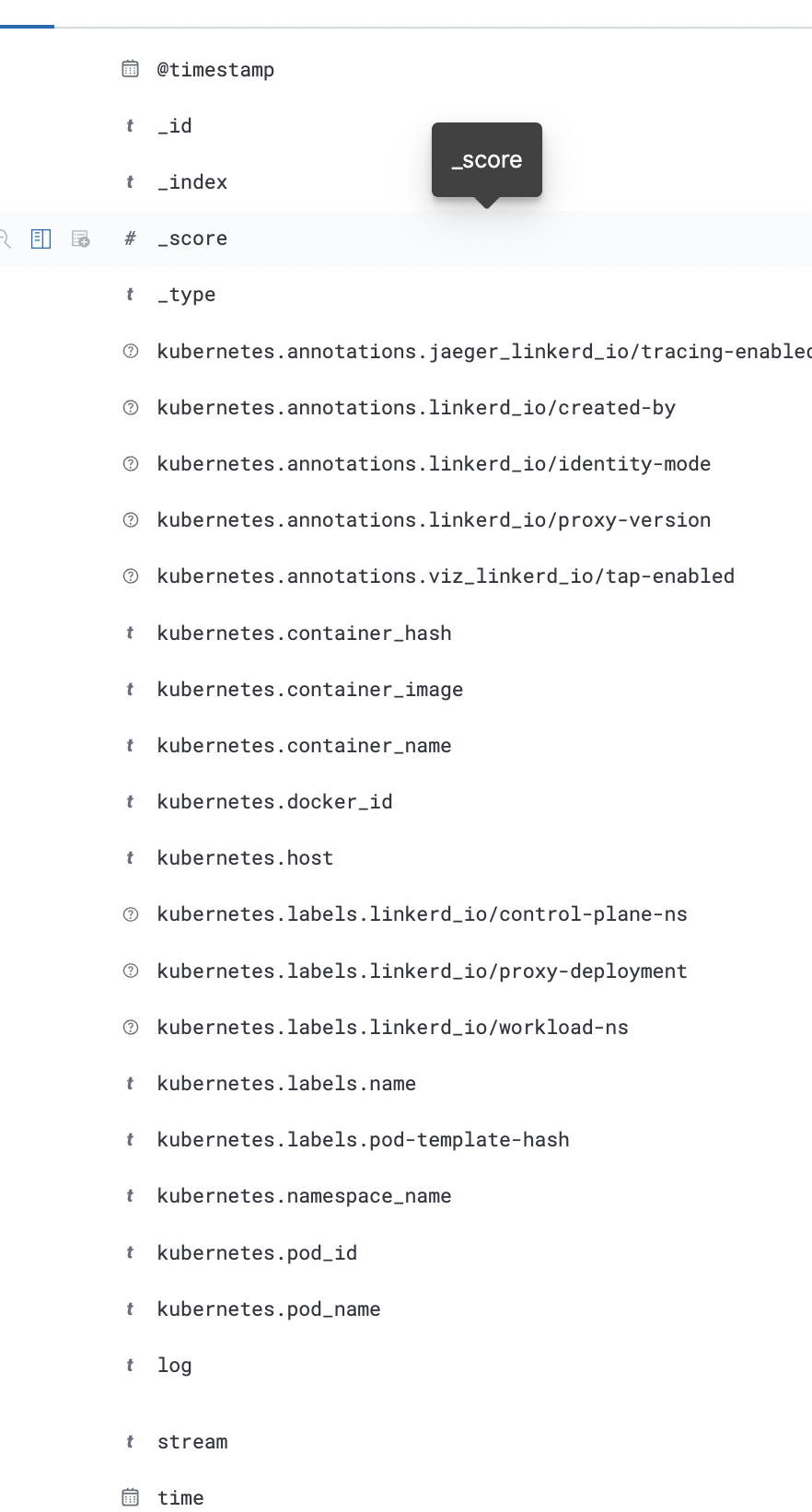

These are the fields I have.

CodePudding user response:

You not required to use whitespace analyzer.

You have 2 option to search entire UUID.

First, You can use match query with operator set to and:

{

"query": {

"match": {

"log":{

"query": "8503fb5a-3899-4305-8480-6ddc0f5df296",

"operator": "and"

}

}

}

}

Second, You can use match_phrase query which will search for exact match.

{

"query": {

"match_phrase": {

"log": "8503fb5a-3899-4305-8480-6ddc0f5df296"

}

}

}