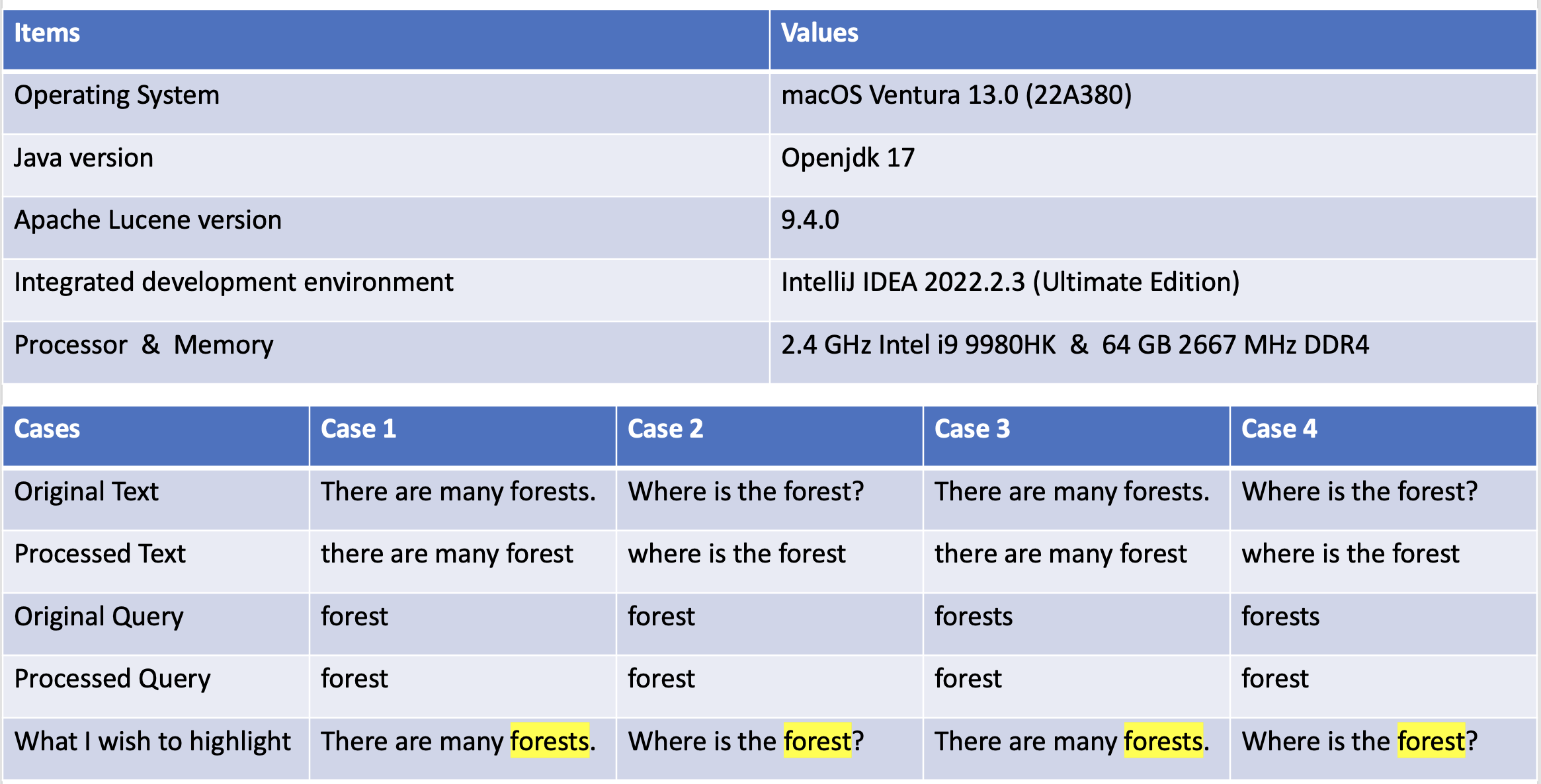

I am building an index for about 1000 text files (containing titles, abstracts, paragraphs, etc) using Apache Lucene. I've already succeeded in building indexes and querying for words that match the text files. However, when I query for the word "forest" for instance, it doesn't return any results containing "forests".

Thus, I use Porter Stemming to get stemmed text and build the index for the derived text (not the original one). Also, I stem the words in the query using Porter. Here comes the problem. If I use the Highlighter in Lucene, the highlighted words that will be presented to users are the stemmed ones like "abund" which is expected to be the original word "abundant".

// FIXME: using Porter, however the text has already changed when building index

try {

String abstractString = TermPreprocessing.getStemmedTerms(xPath.evaluate(ABSTRACT_XPATH, XMLDocument).trim());

// Here, I use [org.tartarus.snowball.ext.PorterStemmer] to get stemmed the text

// the [TextField] is not the original text

System.out.println(abstractString);

Field abstractField = new TextField("abstract", abstractString, Field.Store.YES);

luceneDocument.add(abstractField);

} catch (Exception exception) {

exception.printStackTrace();

}

// I build the index for original text, too

I build an index for both the original and the stemmed text, which, of course, nearly double the size of the index files. Still, I fail to highlight the correct words, since I query for the stemmed text, but I would like to highlight the original one.

// This is the way I query, stemming the words first

public static void main(String[] args) throws IOException, ParseException {

// ... obtaining the directory

IndexReader indexReader = DirectoryReader.open(fsDirectory);

IndexSearcher indexSearcher = new IndexSearcher(indexReader);

StandardAnalyzer standardAnalyzer = new StandardAnalyzer();

MultiFieldQueryParser multiFieldQueryParser = new MultiFieldQueryParser(MULTI_FIELDS, standardAnalyzer);

Query query = multiFieldQueryParser.parse(getStemmedTerms(EXPRESSION)); // Here, I process the query string

TopDocs topDocs = indexSearcher.search(query, 20);

for (ScoreDoc scoreDoc : topDocs.scoreDocs) { ... }

I've gone through some solutions on Google, however, due to Lucene version issues, they might not work. How to build index correctly if I use Porter Stemmer? Thank you very much.

CodePudding user response:

An alternative approach is to use a custom analyzer, and then use the SnowballPorterFilterFactory for both index creation and for queries (and for highlighting).

So, for example, as a re-usable class, using Lucene 9.4.0:

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.miscellaneous.ASCIIFoldingFilterFactory;

import org.apache.lucene.analysis.core.LowerCaseFilterFactory;

import org.apache.lucene.analysis.custom.CustomAnalyzer;

import org.apache.lucene.analysis.en.EnglishPossessiveFilterFactory;

import org.apache.lucene.analysis.core.StopFilterFactory;

import org.apache.lucene.analysis.snowball.SnowballPorterFilterFactory;

import org.apache.lucene.analysis.miscellaneous.KeywordRepeatFilterFactory;

import org.apache.lucene.analysis.miscellaneous.RemoveDuplicatesTokenFilterFactory;

import org.apache.lucene.analysis.standard.StandardTokenizerFactory;

class MyAnalyzer {

public Analyzer get() throws IOException {

Map<String, String> snowballParams = new HashMap<>();

snowballParams.put("language", "English");

Map<String, String> stopMap = new HashMap<>();

stopMap.put("words", "stopwords.txt");

stopMap.put("format", "wordset");

return CustomAnalyzer.builder()

.withTokenizer(StandardTokenizerFactory.NAME)

.addTokenFilter(LowerCaseFilterFactory.NAME)

.addTokenFilter(StopFilterFactory.NAME, stopMap)

.addTokenFilter(ASCIIFoldingFilterFactory.NAME)

.addTokenFilter(EnglishPossessiveFilterFactory.NAME)

.addTokenFilter(KeywordRepeatFilterFactory.NAME)

// here is the Porter stemmer step:

.addTokenFilter(SnowballPorterFilterFactory.NAME, snowballParams)

.addTokenFilter(RemoveDuplicatesTokenFilterFactory.NAME)

.build();

}

}

At query time (and when highlighting), use the same custom analyzer.

You can obviously change which of the other filters you add/remove in the analyzer, to meet your specific needs. You do not necessarily need to use all the ones I have included in the above example.

For highlighting, I use a custom highlighter based on Lucene's Highlighter, into which I also pass my custom analyzer.

This highlights the matched content of the original text - and not just the stem.