So, I have the following two dataframes:

DF1 looks like following:

--------- -----------

| Name | Money |

--------- -----------

| A | 50.3 |

| B | 26.9 |

| C | 11.4 |

| A | 35.8 |

| B | 59.2 |

| A | 90.8 |

| C | 23.5 |

| D | 23.5 |

| D | 54.6 |

| E | 78.0 |

| A | 12.3 |

| F | 20.3 |

| A | 57.1 |

--------- -----------

DF2 looks like following (list of unique names):

---------

| Name |

---------

| A |

| C |

| D |

---------

What kind of join will give me the following (only keeping A, C and D that appear in DF2):

--------- -----------

| Name | Money |

--------- -----------

| A | 50.3 |

| C | 11.4 |

| A | 35.8 |

| A | 90.8 |

| C | 23.5 |

| D | 23.5 |

| D | 54.6 |

| A | 12.3 |

| A | 57.1 |

--------- -----------

CodePudding user response:

Select * from df1

where df1.name in (

Select name from df2)

Sorry if dirty, written on my phone.

CodePudding user response:

df = df1.alias("t0").join(

df2.alias("t1"),

on=f.col("t0.Name") == f.col("t1.Name"),

how='left'

).select(

f.col("t0.Name"),

f.col("t0.Money")

)

CodePudding user response:

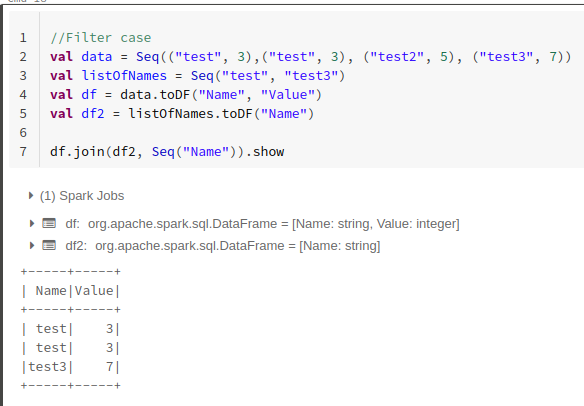

imo simple inner join will be ok here, it is going to filter records from df1 with names which do not exists in df2 which is what you expect

df1.join(df2,d1("name") === d2("name"),"inner")

In the title you asked about duplicates, duplicated record are going to stay there after inner join, if you want to remove them you can use distinct

you can find sample inner join below (inner is default type of join in Spark and that's why i just could skip 3rd parameter here)