I am quite new in ADF so thats why i am asking you for any suggestions.

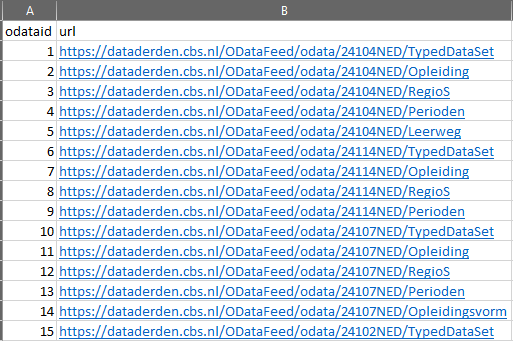

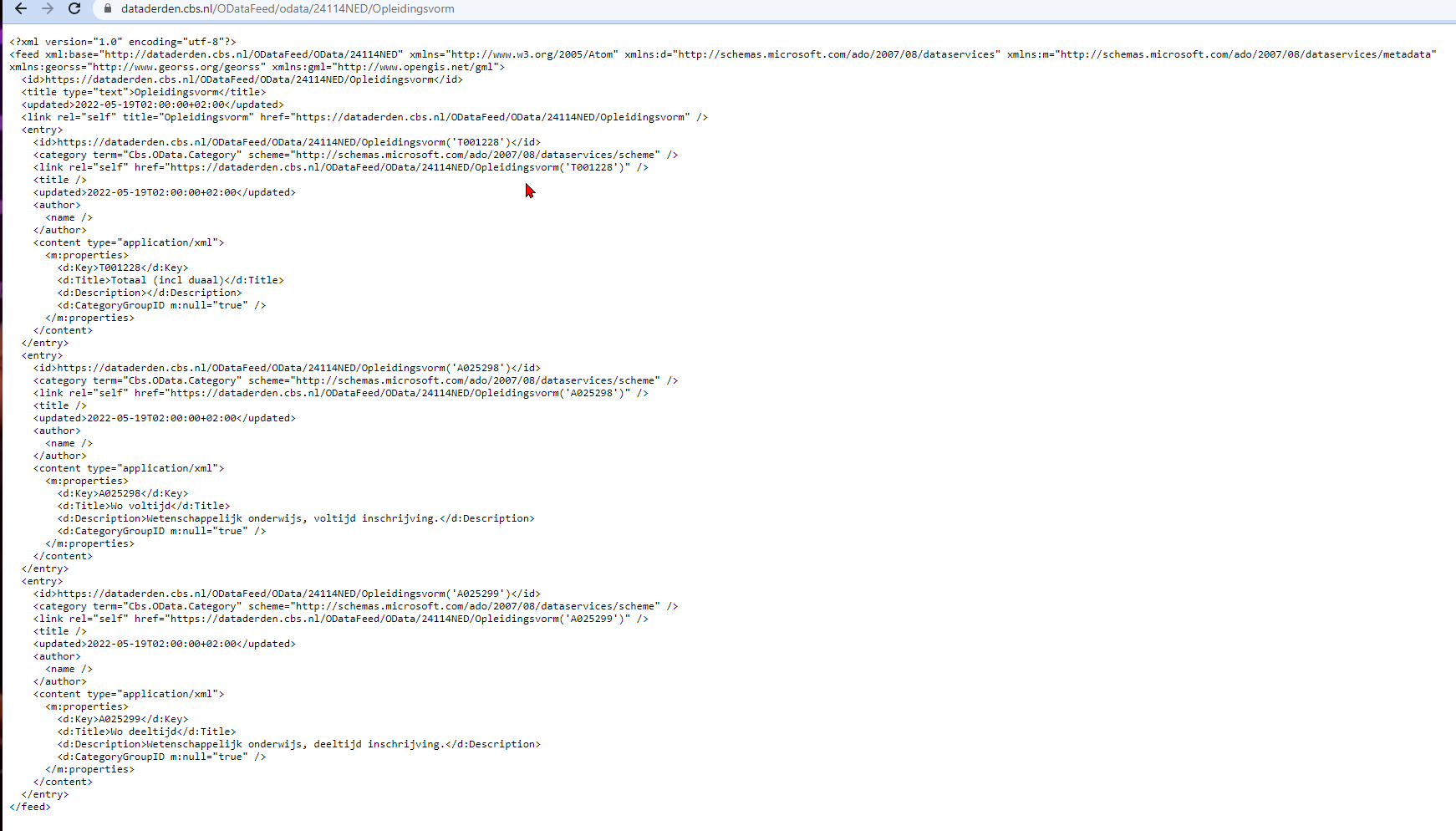

The use case: I have a csv file which contains unique id and url's (see image below). i would like to use this file in order to export the value from various url's. In the second image you can see a example of the data from a url.

So in the current situation i am using each url and insert this manually as a source from the ADF Copy Activity task to export the data to a SQL DB. This is very time consuming method.

How can i create a ADF pipeline to use the csv file as a source, and that a copy activity use each row of the url and copy the data to Azure SQL DB? Do i need to add GetMetaData activity for example? so how?

Many thanks.

CodePudding user response:

use a look up activity that reads all the data,Then use a foreach loop which reads line by line.Inside foreach use a copy activity where u can able to copy response to the sink.

CodePudding user response:

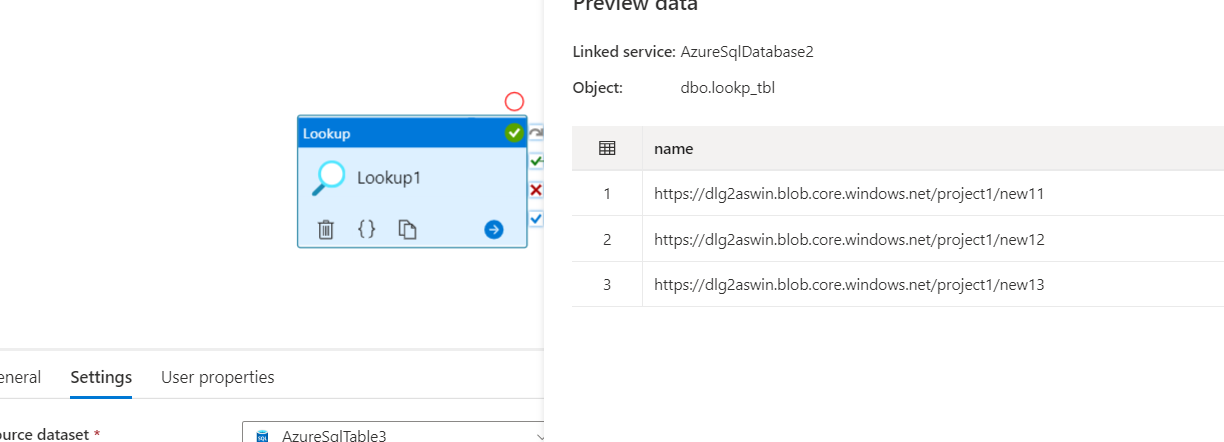

In order to copy XML response of URL, we can use HTTP linked service with XML dataset. As @BeingReal said, Lookup activity should be used to refer the table which contains all the URLs and inside for each activity, Give the copy activity with HTTP as source and sink as per the requirement. I tried to repro the same in my environment. Below are the steps.

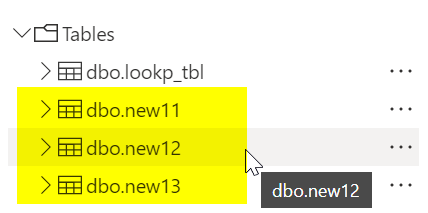

- Lookup table with 3 URLs are taken as in below image.

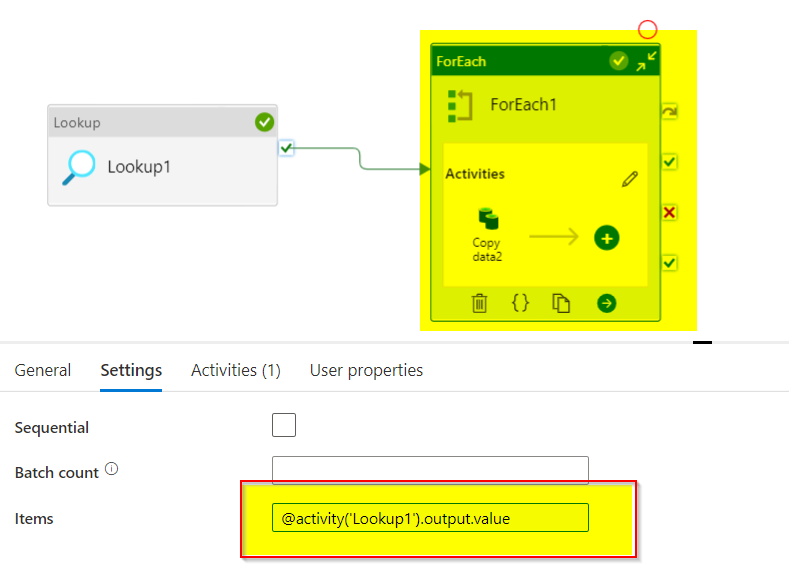

For-each activity is added in sequence with Lookup activity.

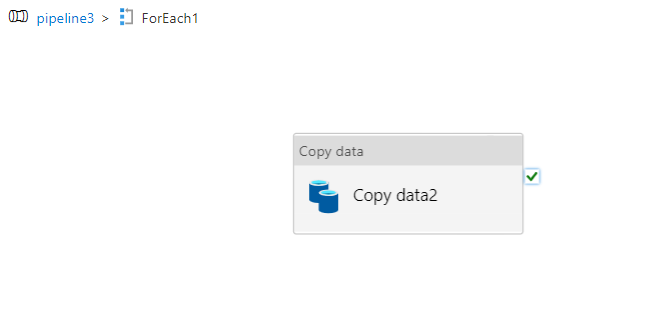

Inside For-each, Copy activity is added. Source is given as HTTP linked service.

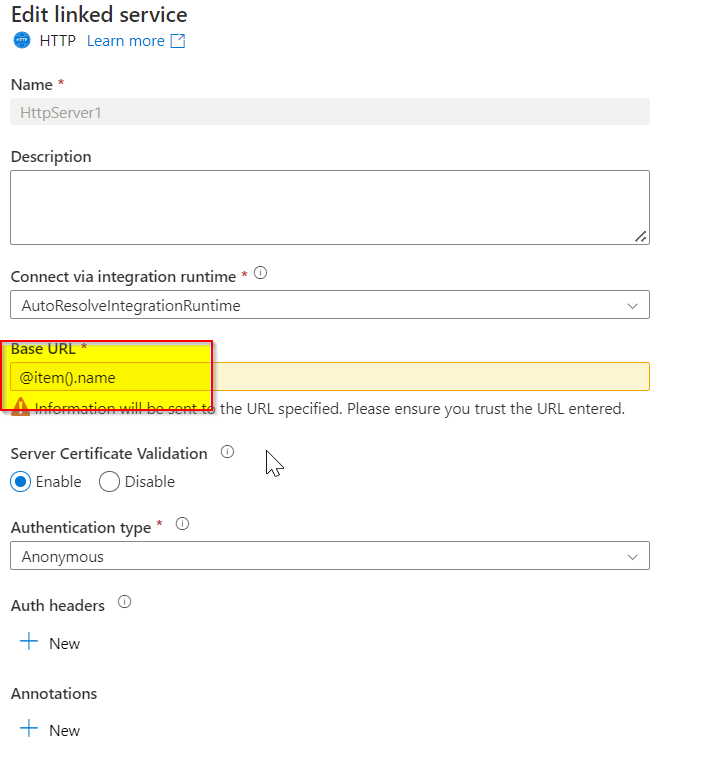

In HTTP linked service, base URL,

@item().nameis given. name is the column that stored URLs in the lookup table. Replace the name with the column name that you gave in lookup table.

In Sink, azure database is given. (Any sink of your requirement is to be used). Data is copied to SQL database.