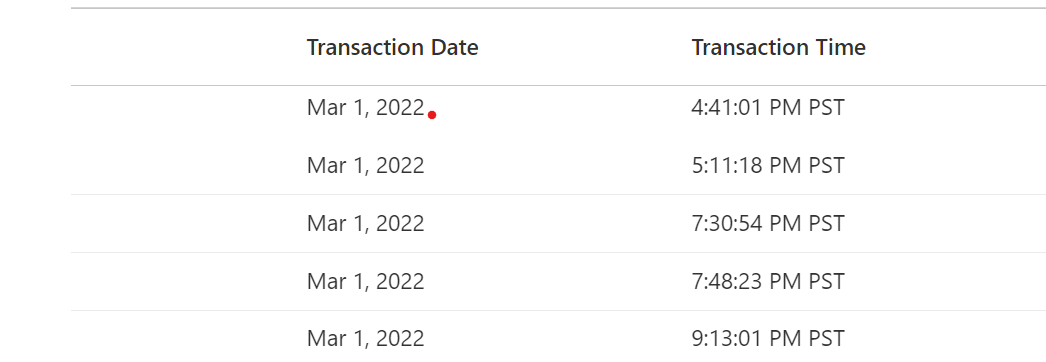

I am exploring different date formats and trying to convert date formats to others. Currently, I m stuck in a scenario where I have input dates and times as below:

I was able to convert it to a date timestamp using concatenation

concat_ws(' ',new_df.transaction_date,new_df.Transaction_Time)

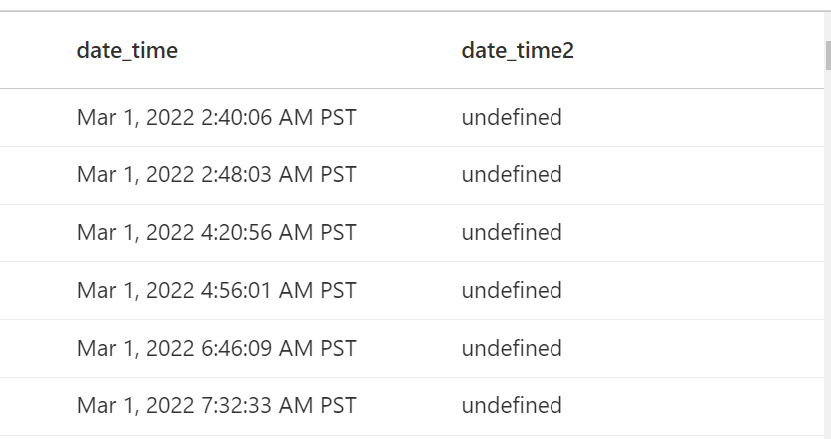

While I m trying to use

withColumn("date_time2", F.to_date(col('date_time'), "MMM d yyyy hh:mmaa")) with ('spark.sql.legacy.timeParserPolicy','LEGACY')

It is displayed as 'undefined'

I am looking for pointers/code snippets to extract YYYY-MM-DD HH:MM:SS in CET (input is in PST) as below

| input_date_time | output (in CET) |

|---|---|

| Mar 1, 2022 01:00:00 PM PST | 2022-03-01 22:00:00 |

CodePudding user response:

Parse PST string to timestamp with timezone in UTC. Then convert to "CET" time:

import pyspark.sql.functions as F

df = spark.createDataFrame(data=[["Mar 1, 2022 01:00:00 PM PST"]], schema=["input_date_time_pst"])

df = df.withColumn("input_date_time_pst", F.to_timestamp("input_date_time_pst", format="MMM d, yyyy hh:mm:ss a z"))

df = df.withColumn("output_cet", F.from_utc_timestamp("input_date_time_pst", "CET"))

[Out]:

------------------- -------------------

|input_date_time_pst|output_cet |

------------------- -------------------

|2022-03-01 21:00:00|2022-03-01 22:00:00|

------------------- -------------------

Note - The 2022-03-01 21:00:00 above is Mar 1, 2022 01:00:00 PM PST displayed in UTC.