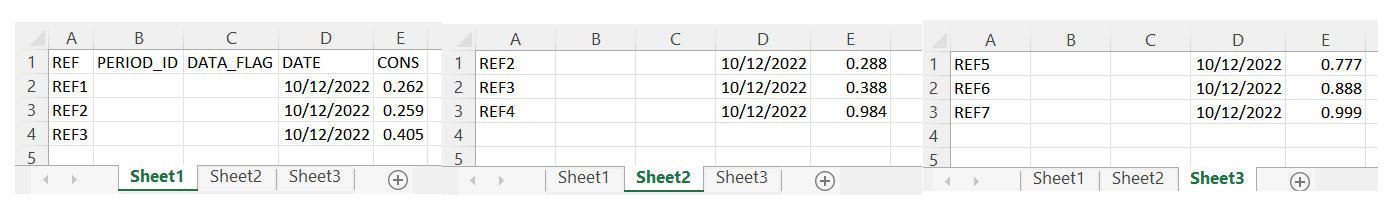

I have imported a .xlsx file with multiple tabs (around 3 million rows) where column headers exist on first tab only, just data with no headers on remaining tabs. I now have a pandas datafreamne with some information that won't be required removed;

import pandas as pd

#load in .xlsx with multiple tabs as a dataframe

filepath = "Consumption_20221111_testdata.xlsx"

df_dict = pd.read_excel(filepath, sheet_name=None, header=None)

df_all = pd.concat(df_dict.values(), ignore_index=True)

df_all = df_all.drop(columns=df_all.columns[2]) #remove column "PERIOD_ID"

df_all = df_all.drop(columns=df_all.columns[2]) #remove column "DATA_FLAG"

df_all = df_all.drop(df_all.index[-1]) #remove the last row (details of query run on SQL database that produced .xlsx which is the last tab of the .xlsx)

df_all = df_all.dropna(how='all') #remove columns that are all 'NaN'

df_all[1] = pd.to_datetime(df_all[1][1]) #convert column from object to datetime

print(df_all)

print(df_all.dtypes)

My dataframe has an index noted for column and rows, but the date column now has a date instead of a header?

| 0 | 1 | 4 | |

|---|---|---|---|

| 0 | REF | 2022-10-12 | CONS |

| 1 | REF1 | 2022-10-12 | 0.262 |

| 2 | REF2 | 2022-10-12 | 0.259 |

| 3 | REF2 | 2022-10-12 | 0.405 |

I need to group the data on reference and date, aggregating the consumption to compare month to month but first I need to have a dataframe I can reference!

df_all["Month"] = df_all[1].dt.month

df_all = df_all.groupby(by=[1, "Month"], as_index=False).agg({"CONS":sum})

df_all

resulted in;

KeyError: "Column(s) ['CONS'] do not exist"

CodePudding user response:

Here is a proposition using method chaining :

df_all = (

pd.concat(pd.read_excel("Consumption_20221111_testdata.xlsx",

header=None, sheet_name=None), ignore_index=True)

.T.set_index(0).T

.rename_axis(None, axis=1)

.loc[:, ["REF", "DATE", "CONS"]]

.assign(MONTH= lambda x: x["DATE"].dt.month)

.groupby(by=["DATE", "MONTH"], as_index=False).agg({"CONS":sum})

)

# Output :

print(df_all)

DATE MONTH CONS

0 2022-10-12 10 5.25

NB: The input Excel was made with some of the rows you shared plus some random values.