I have a dataframe column which is of type string and has dates in it. I want to cast the column from string to date but the coolumn contains two types of date formats.

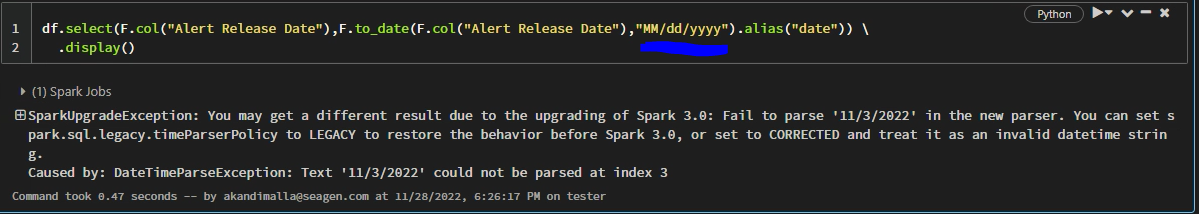

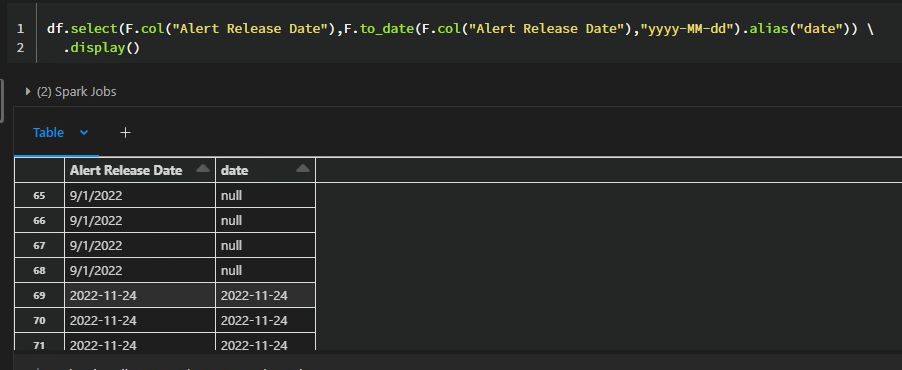

I tried using the to_date fuction but it is not working as expected and giving null values after applying function.

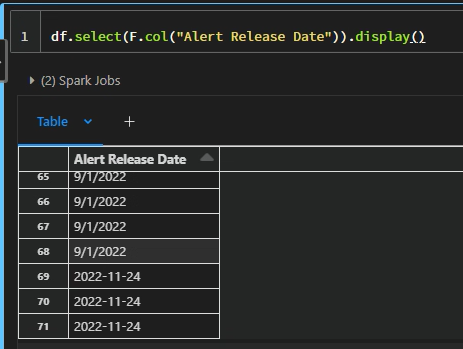

Below are the two date formats I am getting in the df col(datatype - string)

I tried applying the to_date function and below are the results

Please let me know how we can solve this issue and get the date column in only one format

Thanks in advance

CodePudding user response:

You can use pyspark.sql.functions.coalesce to return the first non-null result in a list of columns. So the trick here is to parse using multiple formats and take the first non-null one:

from pyspark.sql import functions as F

df = spark.createDataFrame([

("9/1/2022",),

("2022-11-24",),

], ["Alert Release Date"])

x = F.col("Alert Release Date")

df.withColumn("date", F.coalesce(F.to_date(x, "M/d/yyyy"), F.to_date(x, "yyyy-MM-dd"))).show()

------------------ ----------

|Alert Release Date| date|

------------------ ----------

| 9/1/2022|2022-09-01|

| 2022-11-24|2022-11-24|

------------------ ----------