I am building a job in AWS Glue and my table has a column named as booking with string data type. It includes some null values along with date information. While converting string to date using **pySpark **data frame, these null values are causing issue.

| booking | name |

|---|---|

| 11.01.2022 | John |

| null | max |

I tried multiple work around.

use fillna() & fill() to replace null value with dummy date as 01.01.1900. Unfortunately glue job throws an error stating that ImportError: cannot import name 'fill' from 'pyspark.sql.functions' My idea was to replace null values with dummy date and then convert it using to_date function.

writing when condition:

df_spark = df_spark.withColumn( "booking", when(df_spark.booking.isNull(), "01.01.1900") .otherwise(df_spark.booking) )

df_spark = df_spark.withColumn( "buchungs_datum", to_date(df_spark["buchungs_datum"],"dd.MM.yyyy") )

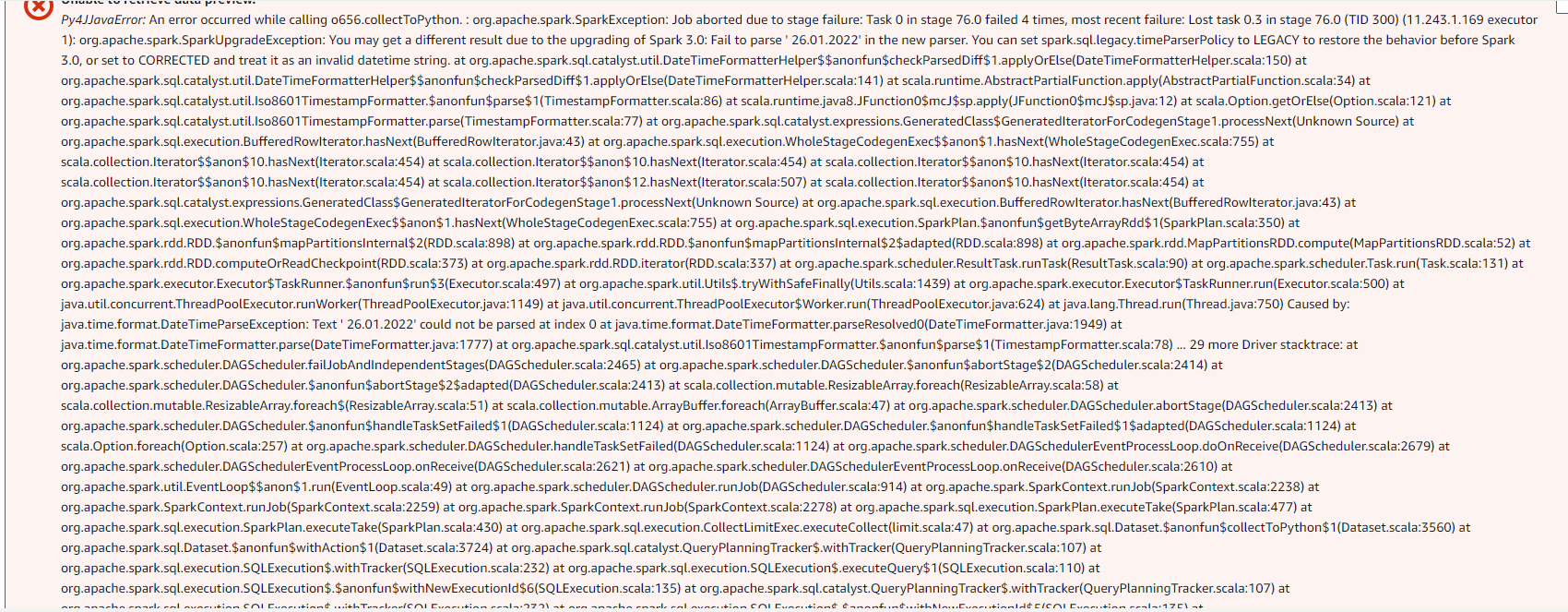

This also throws super weird error message (attached with the screenshot)

- Below statement also does not work.

df_spark = df_spark.withColumn( "booking", to_date(df_spark["booking"], None) )

Could you please help me with the correct method here?

Many thanks in advance.

CodePudding user response:

Use coalesce function in ‘WithColumn’. This will replace null to value you provide.

CodePudding user response:

Unfortunately glue job throws an error stating that ImportError: cannot import name 'fill' from 'pyspark.sql.functions'

The fill is a method that you call on a specific DataFrame so you don't have import it. It is part of PySpark natively, so if rest of PySpark code runs fine, then there shouldn't a problem with it. You can refer to docs on how to use it here - https://spark.apache.org/docs/latest/api/python/reference/pyspark.sql/api/pyspark.sql.DataFrameNaFunctions.fill.html#pyspark-sql-dataframenafunctions-fill