I would like to change the repeated comments with word "same" but keep the original ones and change the ID like below. However, some comments are not matched exactly such as the last three.

df = {'Key': ['111', '111','111', '222*1','222*2', '333*1','333*2', '333*3','444','444', '444'],

'id' : ['', '','', '1','2', '1','2', '3','', '','',],

'comment': ['wrong sentence', 'wrong sentence','wrong sentence', 'M','M', 'F','F', 'F','wrong sentence used in the topic', 'wrong sentence used','wrong sentence use']}

# Create DataFrame

df = pd.DataFrame(df)

print(df)

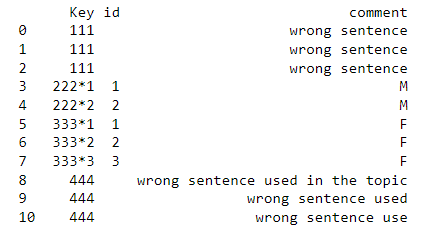

the input :

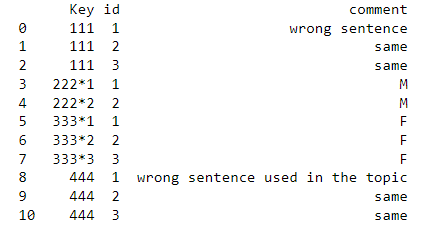

Desired output :

CodePudding user response:

ind = df['comment'].str.contains('wrong sentence')

def my_func(x):

if len(x['comment'].values[0]) > 1 and len(x) > 1 and ind[x.index[0]]:

df.loc[x.index[1:], 'comment'] = 'same'

df.loc[x.index, 'id'] = range(1, len(x) 1)

df.groupby('Key').apply(my_func)

print(df)

Output

Key id comment

0 111 1 wrong sentence

1 111 2 same

2 111 3 same

3 222*1 1 M

4 222*2 2 M

5 333*1 1 F

6 333*2 2 F

7 333*3 3 F

8 444 1 wrong sentence used in the topic

9 444 2 same

10 444 3 same

Here, contains is used to match 'wrong sentence'. The result is a boolean mask.

Groupby is applied on the 'Key' column, the grouping result is passed to the user-defined function: my_func. Where the conditions are checked string is greater than 1, strings greater than 1 and matches the word 'wrong sentence'.

loc is used to reset values.

CodePudding user response:

Use:

#test substrings to mask

m = df['comment'].str.contains('wrong sentence')

#create consecutive groups only for matched mask and create counter

counter = df.groupby((~m).cumsum().where(m)).cumcount().add(1)

#assign counter only for matched rows

df.loc[m, 'id'] = counter[m]

#assign same for duplicates - it means if counter values greater like 1

df.loc[counter.gt(1) & m, 'comment'] = 'same'

print (df)

Key id comment

0 111 1 wrong sentence

1 111 2 same

2 111 3 same

3 222*1 1 M

4 222*2 2 M

5 333*1 1 F

6 333*2 2 F

7 333*3 3 F

8 444 1 wrong sentence used in the topic

9 444 2 same

10 444 3 same

If need also test duplicates per Key groups:

m = df['comment'].str.contains('wrong sentence')

counter = df.groupby(['Key',(~m).cumsum().where(m)]).cumcount().add(1)

df.loc[m, 'id'] = counter[m]

df.loc[counter.gt(1) & m, 'comment'] = 'same'

print (df)

Key id comment

0 111 1 wrong sentence

1 111 2 same

2 111 3 same

3 222*1 1 M

4 222*2 2 M

5 333*1 1 F

6 333*2 2 F

7 333*3 3 F

8 444 1 wrong sentence used in the topic

9 444 2 same

10 444 3 same