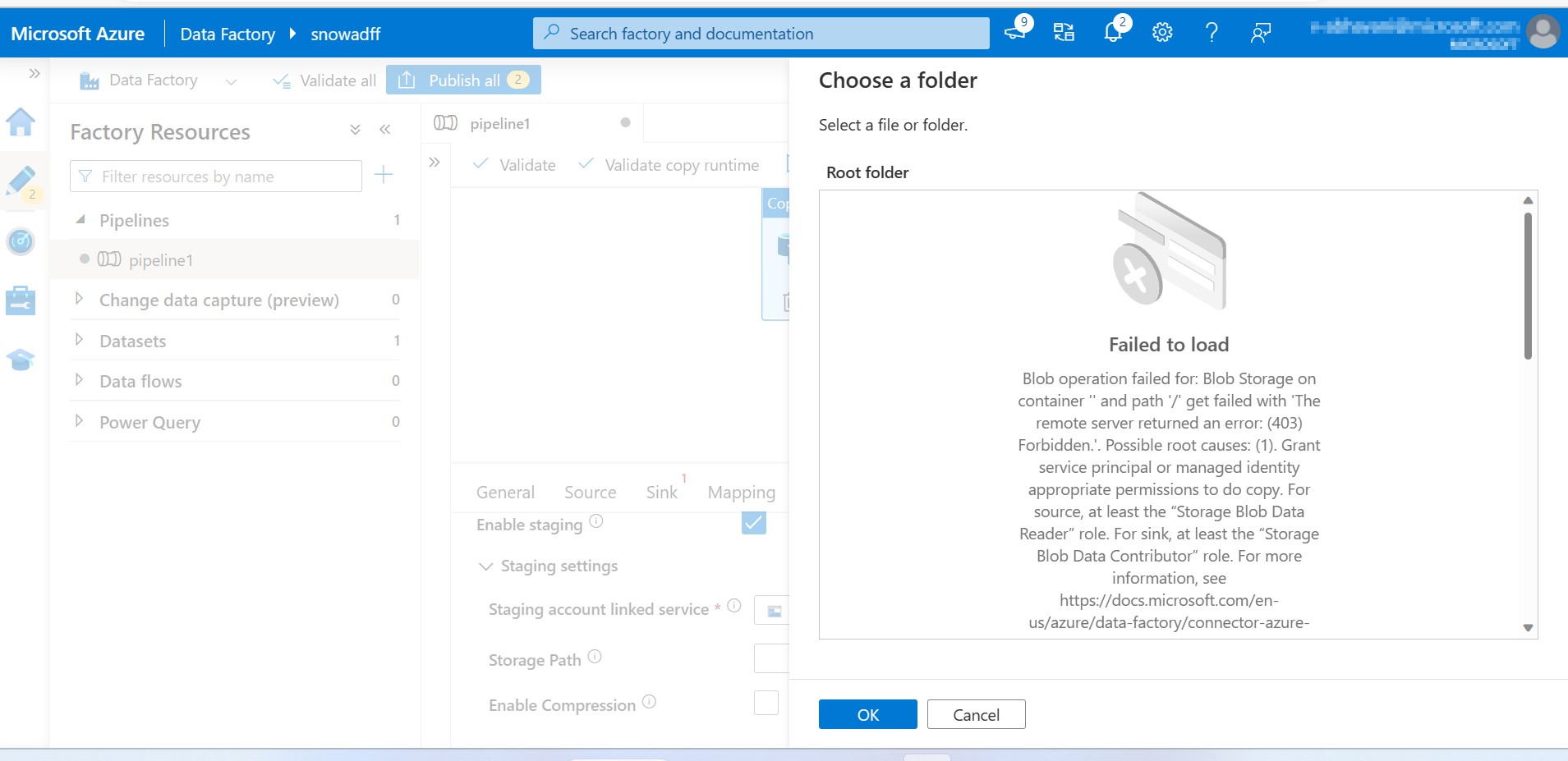

I was able to blob test connection and it's successful, but when I attempt to look for the storage path it shows this error. screenshot

Full error:

Failed to load Blob operation failed for: Blob Storage on container '' and path '/' get failed with 'The remote server returned an error: (403) Forbidden.'. Possible root causes: (1). Grant service principal or managed identity appropriate permissions to do copy. For source, at least the “Storage Blob Data Reader” role. For sink, at least the “Storage Blob Data Contributor” role. For more information, see https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-blob-storage?tabs=data-factory#service-principal-authentication. (2). It's possible because some IP address ranges of Azure Data Factory are not allowed by your Azure Storage firewall settings. Azure Data Factory IP ranges please refer https://docs.microsoft.com/en-us/azure/data-factory/azure-integration-runtime-ip-addresses. If you allow trusted Microsoft services to access this storage account option in firewall, you must use https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-blob-storage?tabs=data-factory#managed-identity. For more information on Azure Storage firewalls settings, see https://docs.microsoft.com/en-us/azure/storage/common/storage-network-security?tabs=azure-portal.. The remote server returned an error: (403) Forbidden.StorageExtendedMessage=Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.

Context: I'm trying to copy data from SQL db to Snowflake and I am using Azure Data Factory for that. Since this doesn't publish, I enable the staged copy and connect blob storage.

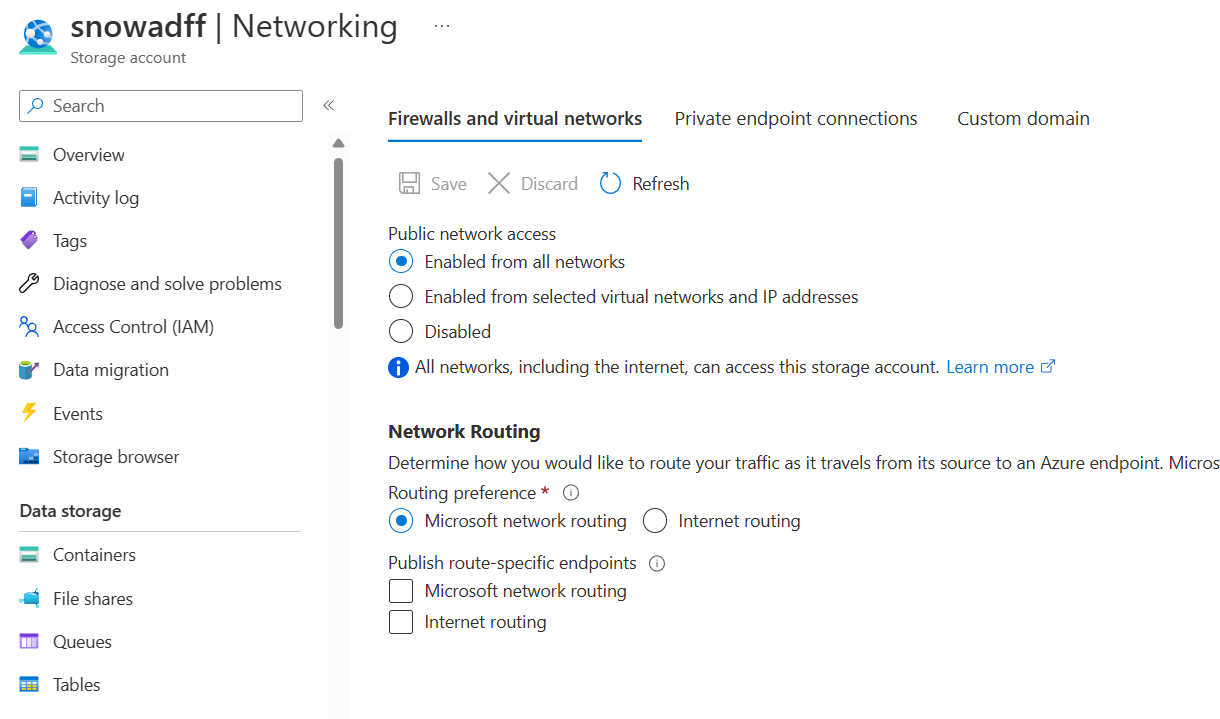

I already tried to check network and it's set for all network. I'm not sure what I'm missing here because I found a youtube video that has it working but they didn't show an issue related/similar to this one. https://www.youtube.com/watch?v=5rLbBpu1f6E.

I also tried to retain empty storage path but trigger for copy data pipeline isn't successfully to.

Full error from trigger:

Operation on target Copy Contacts failed: Failure happened on 'Sink' side. ErrorCode=FileForbidden,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Error occurred when trying to upload a blob, detailed message: dbo.vw_Contacts.txt,Source=Microsoft.DataTransfer.ClientLibrary,''Type=Microsoft.WindowsAzure.Storage.StorageException,Message=The remote server returned an error: (403) Forbidden.,Source=Microsoft.WindowsAzure.Storage,StorageExtendedMessage=Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.

CodePudding user response:

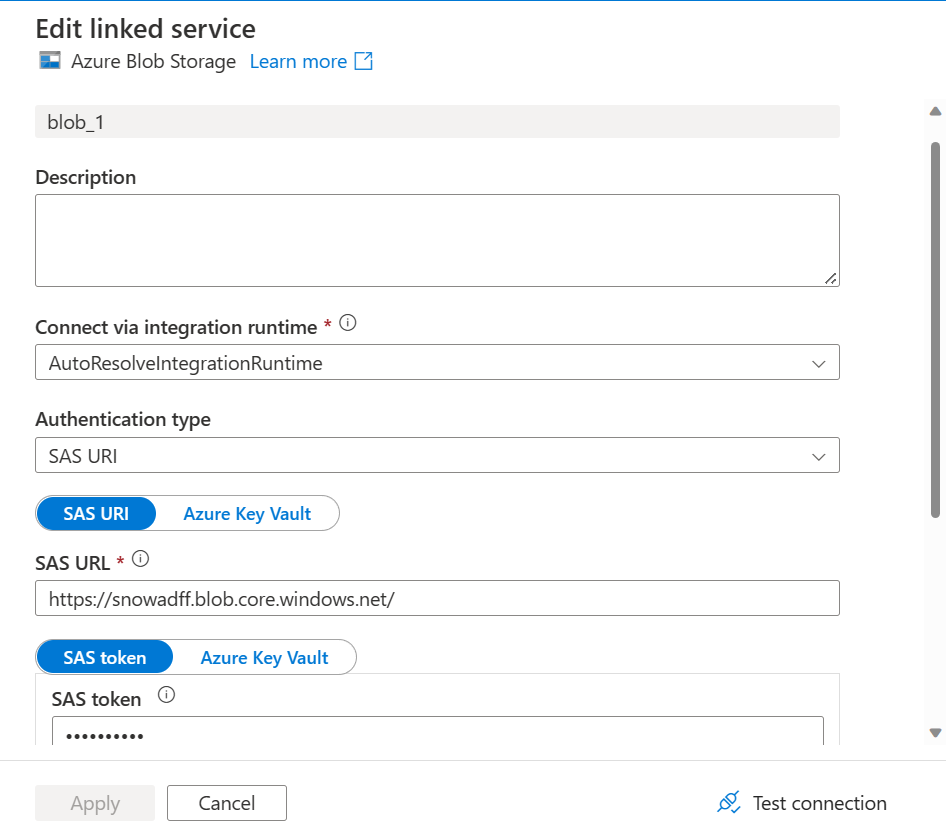

I created Blob storage and generated SAS token for that. I created a blob storage linked service using SAS URI It created successfully. Image for reference:

When I try to retrieve the path I got below error

I changed the networking settings of storage account by enabling enabled from all networks of storage account Image for reference:

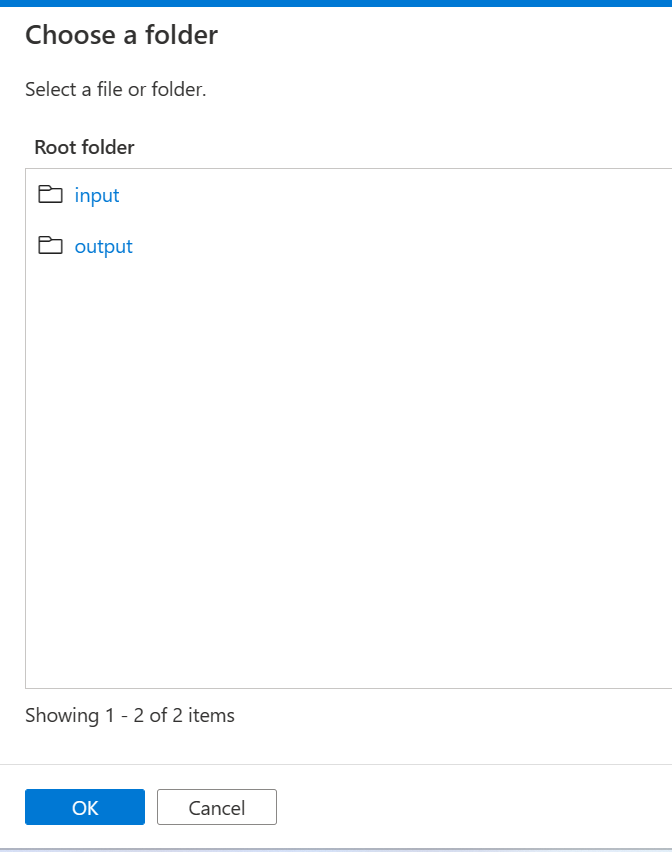

I try to retrieve the path again in data factory. It worked successfully. I was able to retrieve the path. Image for reference:

Another way is by whitelisting the IP addresses we can resolve this issue.

CodePudding user response:

From the error message:

'The remote server returned an error: (403) Forbidden.'

It's likely the authentication method you're using doesn't have enough permissions on the blob storage to list the paths. I would recommend using the Managed Identity of the Data Factory to do this data transfer.

- Take the name of the Data Factory

- Assign the Blob Data Contributor role in the context of the container or the blob storage to the ADF Managed Identity (step 1).

- On your blob linked service inside of Data Factory, choose the managed identity authentication method.

Also, if you stage your data transfer on the blob storage, you have to make sure the user can write to the blob storage, and also bulk permissions on SQL Server.