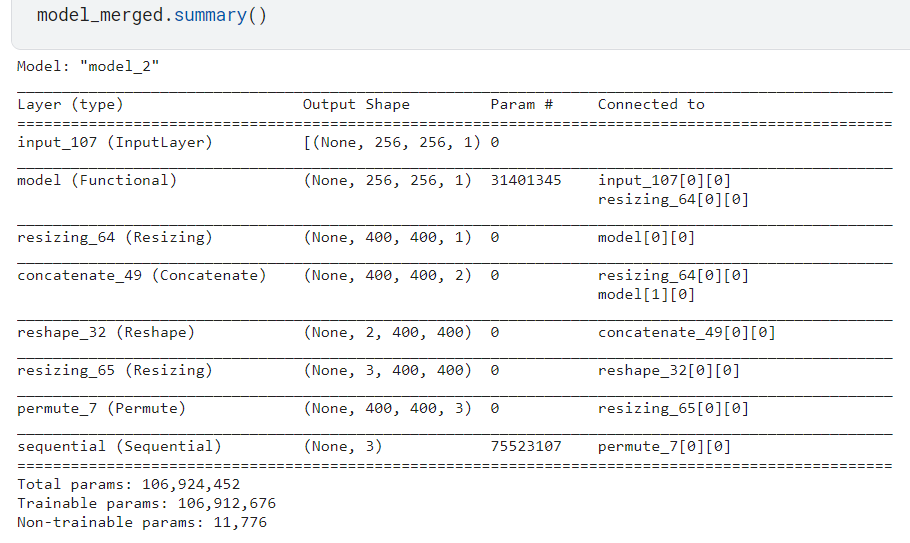

I have the following model:

I tried to fit the model using ImageDataGenerator with flow*_*from_directory and fit_generator, however I get the following error:

ValueError: Input 0 is incompatible with layer model: expected shape=(None, 256, 256, 1), found shape=(None, 400, 400, 1)

I used the correct target_size, so I don't know why the error appears. My code is the following:

model_merged.compile(loss='categorical_crossentropy',

optimizer="adam",

metrics=['acc'])

train_datagen =ImageDataGenerator(rescale=1./255, validation_split=0.25)

#training data

train_generator = train_datagen.flow_from_directory(

'/kaggle/working/images/', # Source directory

target_size=(256, 256), # Resizes images

batch_size=batch_size,

class_mode='categorical',subset = 'training')

epochs = epochs

#Testing data

validation_generator = train_datagen.flow_from_directory(

'/kaggle/working/images/',

target_size=(256, 256),

batch_size=batch_size,

class_mode='categorical',

subset='validation') # set as validation data

#Model fitting for a number of epochs

history = model_merged.fit_generator(

train_generator,

steps_per_epoch=steps_train,

epochs=epochs,

validation_data = validation_generator,

validation_steps = steps_val,

verbose=1)

Update

batch_size = 32

epochs = 32

steps_train = 18

steps_val = 3

img_height = 256

img_width = 256

data_dir='/kaggle/working/images/'

model_merged.compile(loss='categorical_crossentropy',

optimizer="adam",

metrics=['acc'])

train_ds = tf.keras.utils.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

val_ds = tf.keras.utils.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

# scale pixel value between 0 and 1

normalization_layer = tf.keras.layers.Rescaling(1./255)

reshape_layer = Reshape((-1,256,256))

resize_layer = Resizing(1,256)

permute_layer = Permute((2,3,1))

train_ds = train_ds.map(lambda x, y: (normalization_layer(x), y))

val_ds = val_ds.map(lambda x, y: (normalization_layer(x), y))

train_ds = train_ds.map(lambda x, y: (reshape_layer(x), y))

val_ds = val_ds.map(lambda x, y: (reshape_layer(x), y))

train_ds = train_ds.map(lambda x, y: (resize_layer(x), y))

val_ds = val_ds.map(lambda x, y: (resize_layer(x), y))

train_ds = train_ds.map(lambda x, y: (permute_layer(x), y))

val_ds = val_ds.map(lambda x, y: (permute_layer(x), y))

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

history = model_merged.fit(

train_ds,

steps_per_epoch=steps_train,

epochs=epochs,

validation_data = val_ds,

validation_steps = steps_val,

verbose=1)

The error is the same as the one above. I added some new layers in order to change the input layer from (None,256,256,3) to (None,256,256,1), as this was the initial error, but it still doesn't work. I am not sure where the error comes from because the dimensions of the train and validation datasets are correct now.

Update 2

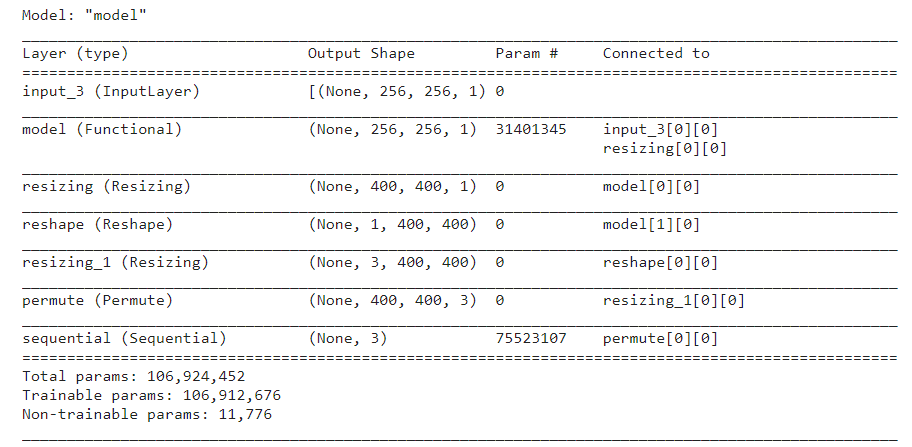

I removed the concatenation layer from the merged model, since I want the output of model A to be passed to the input of model B, however, even with the new merged model the error still appears.

I removed the concatenation layer from the merged model, since I want the output of model A to be passed to the input of model B, however, even with the new merged model the error still appears.

CodePudding user response:

Are you open to use the more modern https://www.tensorflow.org/api_docs/python/tf/keras/utils/image_dataset_from_directory

if yes this is a working example close to your code

epochs = 32

steps_train = 18

steps_val = 3

img_height = 256

img_width = 256

data_dir='/kaggle/working/images/'

model_merged.compile(loss='categorical_crossentropy',

optimizer="adam",

metrics=['acc'])

train_ds = tf.keras.utils.image_dataset_from_directory(

data_dir,

validation_split=0.25,

subset="training",

seed=123,

image_size=(img_height, img_width),

label_mode="categorical",

batch_size=batch_size)

val_ds = tf.keras.utils.image_dataset_from_directory(

data_dir,

validation_split=0.25,

subset="validation",

seed=123,

image_size=(img_height, img_width),

label_mode="categorical",

batch_size=batch_size)

# scale pixel value between 0 and 1

normalization_layer = tf.keras.layers.Rescaling(1./255)

reshape_layer = Reshape((-1,256,256))

resize_layer = Resizing(1,256)

permute_layer = Permute((2,3,1))

train_ds = train_ds.map(lambda x, y: (normalization_layer(x), y))

val_ds = val_ds.map(lambda x, y: (normalization_layer(x), y))

train_ds = train_ds.map(lambda x, y: (reshape_layer(x), y))

val_ds = val_ds.map(lambda x, y: (reshape_layer(x), y))

train_ds = train_ds.map(lambda x, y: (resize_layer(x), y))

val_ds = val_ds.map(lambda x, y: (resize_layer(x), y))

train_ds = train_ds.map(lambda x, y: (permute_layer(x), y))

val_ds = val_ds.map(lambda x, y: (permute_layer(x), y))

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

history = model_merged.fit(

train_ds,

steps_per_epoch=steps_train,

epochs=epochs,

validation_data = val_ds,

validation_steps = steps_val,

verbose=1)