All of the links of google are pink... I've read almost every answer on here and consulted several colleagues and they cannot work out what is wrong with my config.

My WebSocket server

// additional fastify websocket set-up - key point, is its running on 5000

const start = async () => {

try {

await fastify.listen({ port: 5000 });

console.log(

`Market data websocket listening on ws://localhost:5000`

);

} catch (err) {

fastify.log.error(err);

process.exit(1);

}

};

start();

Confirmation that this server works locally

I tested using wscat and it connects perfectly fine

wscat -c "ws://localhost:5000`

Deployment file

apiVersion: apps/v1

kind: Deployment

metadata:

name: test

spec:

replicas: 1

selector:

matchLabels:

app: test

strategy:

type: Recreate

template:

metadata:

labels:

app: market-data-ws

spec:

containers:

- name: market-data-ws

image: eu.gcr.io/test-repo/market-data-ws

envFrom:

- secretRef:

name: market-data-ws-env

resources:

limits:

memory: '0.5'

cpu: '0.25'

ports:

- containerPort: 5000

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

Service

apiVersion: v1

kind: Service

metadata:

name: market-data-ws

spec:

type: ClusterIP

selector:

app: market-data-ws

ports:

- port: 80

targetPort: 5000

My issue

I'm trying to connect to this service from another pod server. It does not need to be exposed via ingress.

Note: the pod will fail liveness probe, even though if i exec in and check the liveness probe it works.

The liveness endpoint works from inside the pod

kubectl exec -it $(kubectl get pods -o name | grep market-data) /bin/sh

curl http://localhost:5000/healthz

{"hello":"im alive"}/

❌ Accessing the pod by curling the hostname from inside another pod = Connection refused instantly

> kubectl exec -it $(kubectl get pods -o name | grep ui) /bin/sh

# curl the health check

> curl http://market-data-ws/healthz

> curl: (7) Failed to connect to market-data-ws port 80 after 8 ms: Connection refused

❌ Curl the IP instead of hostname = Connection refused instantly

curl 10.104.1.61:80/healthz

curl: (7) Failed to connect to 10.104.1.61 port 80 after 3 ms: Connection refused

❓ Curl the IP (but the wrong exposed port) = Connection Timeout after a few minutes

Because we get a time out here instead, this suggests to me that we were actually reaching our running container previously.

curl 10.104.1.61:5000/healthz

curl: (28) Failed to connect to 10.104.1.61 port 5000 after 129952 ms: Operation timed out

❓ When I port-forward my local port to this running service port it works

kub port-forward service/market-data-ws 5000:5000

Forwarding from 127.0.0.1:5000 -> 5000

Forwarding from [::1]:5000 -> 5000

wscat -c http://localhost:5000

> Connected

Other things I've tried

- Execing into the

market-data-wspod and usingwscat -c ws://localhost:5000to check if its actually running okay - it is. - Double, triple checked my containterPort / targetPort and colleagues have confirmed it looks 0okay.

- Tried

NodePortandClusterIp - tried running my websocket server on port 80 and exposing that

Other info

- Im using GCP GKE

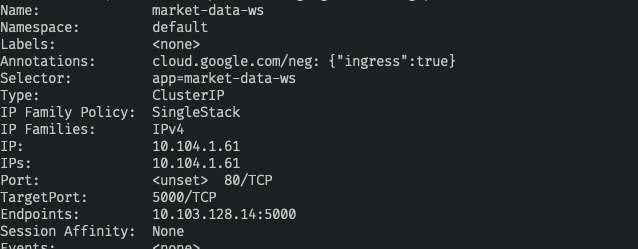

- output of

kubectl describe service market-data-ws

CodePudding user response:

Your service is accessible with kubectl port-forward, so service is working.

You say, your pod is not accessible from another pod. Verify that the other pod is running in the same namespace.

The correct way of addressing the pod is:

servicename.namespace.svc.cluster.local

inside the container from which you can't connect, try:

nc -zv servicename.namespace.svc.cluster.local port

should respond: open

if nc is not available, try curl or install nc.

Note in one of your tries you are using the clusterIp address. This wont work from outside the cluster.

CodePudding user response:

Okay i have finally found my issue, wow that was the hardest problem in a while.

Thanks to this answer I was able to fix my issue.

Fastify by default only listens on localhost

I'm honestly not sure why this is not sufficient for use with docker kubernetes, but I'm just glad its working now.

If someone is able to explain this in greater detail, I would be very grateful