I'm trying to determine a method to rotate and translate a scanned 2D image to match it's near-identical but lower-quality digital template. After running the code, I want the images to very closely align when overlaid, so that post-processing effects can be applied to the scan based on knowledge of the template layout.

I've tried a number of different approaches based on identifying certain features on the scanned image that could be consistently mapped to the template (not a lot of luck with a findContour based approach), but ultimately determined that the most effective approach is to perform a homographic match using openCV then apply a transform (either using perspectiveTransform or warpPerspective).

The match I'm getting is phenomenally good. Even when I make the distance threshold for matches extremely restrictive, I'm getting dozens of point matches. I've varied both the threshold and findHomography RANSAC a fair bit. But ultimately, the transform I get from findHomography is not good enough for my needs; I'm not sure if there's knobs I'm not adequately exploring, or if the the disparity in image quality is just enough that this isn't doable.

Here's the code I'm using:

from matplotlib import pyplot as plt

import numpy as np

import cv2 as cv

def feature_match(scanned_image, template_image, MIN_MATCH_COUNT=10, dist_thresh=0.2, RANSAC=10.0):

# Initiate SIFT detector

sift = cv.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(scanned_image, None)

kp2, des2 = sift.detectAndCompute(template_image, None)

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

# store all the good matches as per Lowe's ratio test.

good = []

for m, n in matches:

if m.distance < dist_thresh * n.distance:

good.append(m)

# Do we have enough?

if len(good) > MIN_MATCH_COUNT:

print("%s good matches using distance threshold of %s" % (len(good), dist_thresh))

src_pts = np.float32([kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1, 2)

M, mask = cv.findHomography(src_pts, dst_pts, cv.RANSAC, RANSAC)

matchesMask = mask.ravel().tolist()

# Apply warp perspective based on homography matrix

warped_image = cv.warpPerspective(scanned_image, M, (scanned_image.shape[1], scanned_image.shape[0]))

plt.imshow(warped_image, 'gray'), plt.show()

else:

print("Not enough matches are found - {}/{}".format(len(good), MIN_MATCH_COUNT))

matchesMask = None

# Show quality of matches

draw_params = dict(matchColor=(0, 255, 0), # draw matches in green color

singlePointColor=None,

matchesMask=matchesMask, # draw only inliers

flags=2)

match_quality = cv.drawMatches(scanned_image, kp1, template_image, kp2, good, None, **draw_params)

plt.imshow(match_quality, 'gray'), plt.show()

cv.imwrite(r"img1.png", cv.cvtColor(img1, cv.COLOR_GRAY2RGB))

cv.imwrite(r"img2.png", cv.cvtColor(img2, cv.COLOR_GRAY2RGB))

cv.imwrite(r"warped_image.png", cv.cvtColor(warped_image, cv.COLOR_GRAY2RGB))

# Load images

img1_path = r"scanned_image.png"

img2_path = r"template_image.png"

img1 = cv.imread(img1_path)

img1 = cv.cvtColor(img1, cv.COLOR_BGR2GRAY)

img2 = cv.imread(img2_path)

img2 = cv.cvtColor(img2, cv.COLOR_BGR2GRAY)

# upscaling img2 to the final scale I'm ultimately after; saves an upscale

img2 = cv.resize(img2, (img2.shape[1] * 2, img2.shape[0] * 2), cv.IMREAD_UNCHANGED)

feature_match(scanned_image=img1, template_image=img2, MIN_MATCH_COUNT=10, dist_thresh=0.2)

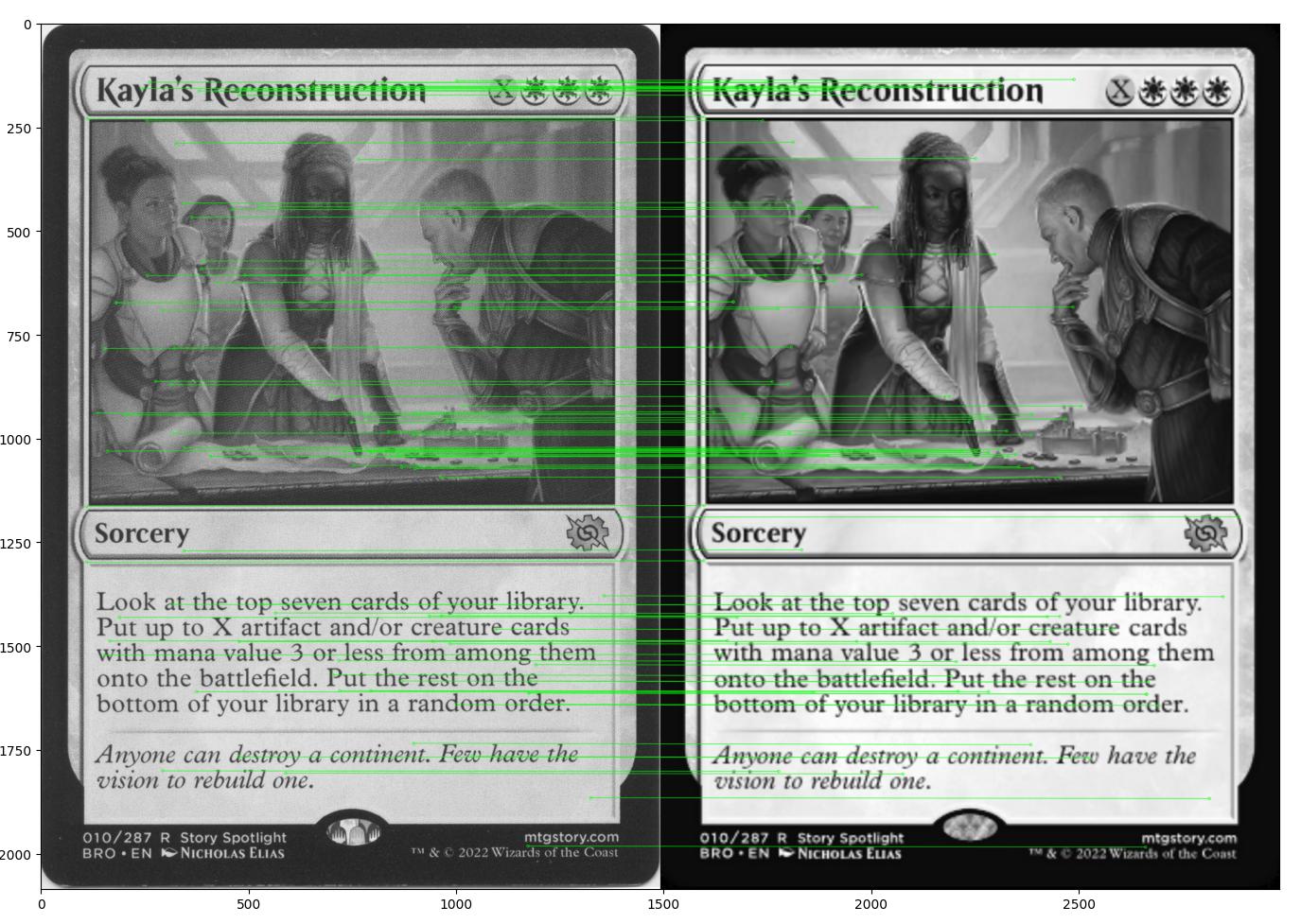

Here's the imagery I'm using:

Note: both a lower quality image, and initially lower resolution. There are minor differences between the two, but not enough that should degrade the match (I think?)

matches between scanned and template image

Using a distance threshold of 0.2 I'm getting 100 matches.

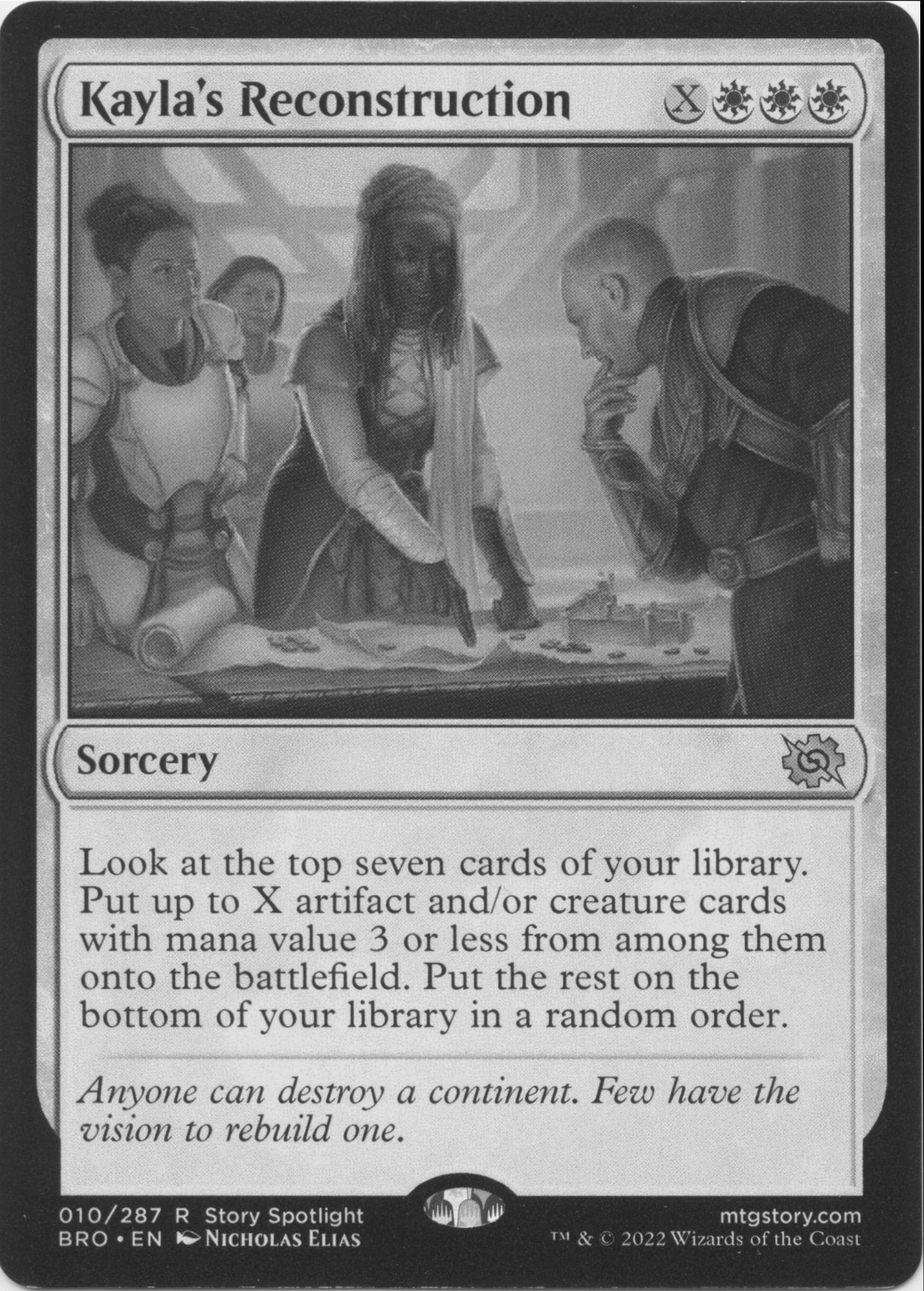

The warped scan overlaid ontop of the template

I was expecting a better outcome than this given the volume of match points. The transformed image looks good at first glance (and certainly better than it started), but is lacking in terms of the ability to use knowledge of the template layout to then modify the scan.

Is there an alternative approach I should take here? Parameters I should be leveraging instead? Or is this just what's achievable here given the quality of the template-- or the methodology being leveraged?

CodePudding user response:

To answer my own question in case someone mysteriously has a similar issue in future: it's important to make sure that when you're applying your homography matrix that your destination size corresponds with the template you're attempting to match if you're looking to get an "exact" match with said template.

In my original I had:

warped_image = cv.warpPerspective(scanned_image, M, (scanned_image.shape[1], scanned_image.shape[0]))

It should have been this:

warped_image = cv.warpPerspective(scanned_image, M, (template_image.shape[1], template_image.shape[0]))

There are minor scale differences between the size of scanned_image and template_image; while they are close, those minuscule differences are enough that, yes, projecting into the wrong size will skew the alignment when comparing them directly.

This updated version isn't perfect, but it's probably close enough for my needs. I suspect that the ECC matching approach / second stage treatment methods @fmw42 describes would be a good second pass. I'll look into it and edit this answer if they are substantial enough to be worth exploring if anyone is similarly dealing with this kind of thing in the future.