I have the following function that uses nested loops and honestly I'm not sure how to proceed with making the code run more efficient. It runs fine for 100 sims in my opinion but when I ran for 2000 sims it took almost 12 seconds.

This code will generate any n Brownian Motion simulations and works well, the issue is once the simulation size is increased to say 500 then it starts to bog down, and when it hits 2k then it's pretty slow ie 12.

Here is the function:

ts_brownian_motion <- function(.time = 100, .num_sims = 10, .delta_time = 1,

.initial_value = 0) {

# TidyEval ----

T <- as.numeric(.time)

N <- as.numeric(.num_sims)

delta_t <- as.numeric(.delta_time)

initial_value <- as.numeric(.initial_value)

# Checks ----

if (!is.numeric(T) | !is.numeric(N) | !is.numeric(delta_t) | !is.numeric(initial_value)){

rlang::abort(

message = "All parameters must be numeric values.",

use_cli_format = TRUE

)

}

# Initialize empty data.frame to store the simulations

sim_data <- data.frame()

# Generate N simulations

for (i in 1:N) {

# Initialize the current simulation with a starting value of 0

sim <- c(initial_value)

# Generate the brownian motion values for each time step

for (t in 1:(T / delta_t)) {

sim <- c(sim, sim[t] rnorm(1, mean = 0, sd = sqrt(delta_t)))

}

# Bind the time steps, simulation values, and simulation number together in a data.frame and add it to the result

sim_data <- rbind(

sim_data,

data.frame(

t = seq(0, T, delta_t),

y = sim,

sim_number = i

)

)

}

# Clean up

sim_data <- sim_data %>%

dplyr::as_tibble() %>%

dplyr::mutate(sim_number = forcats::as_factor(sim_number)) %>%

dplyr::select(sim_number, t, y)

# Return ----

attr(sim_data, ".time") <- .time

attr(sim_data, ".num_sims") <- .num_sims

attr(sim_data, ".delta_time") <- .delta_time

attr(sim_data, ".initial_value") <- .initial_value

return(sim_data)

}

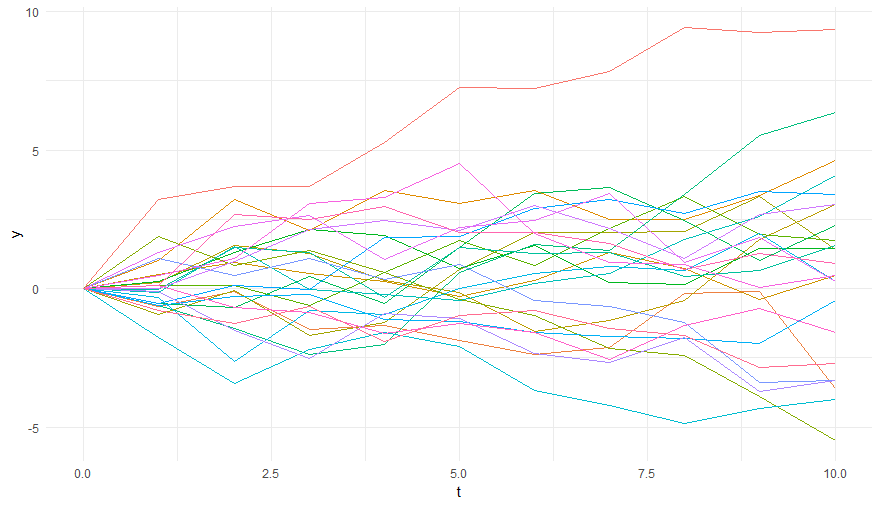

Here is some output of the function:

> ts_brownian_motion(.time = 10, .num_sims = 25)

# A tibble: 275 × 3

sim_number t y

<fct> <dbl> <dbl>

1 1 0 0

2 1 1 -2.13

3 1 2 -1.08

4 1 3 0.0728

5 1 4 0.562

6 1 5 0.255

7 1 6 -1.28

8 1 7 -1.76

9 1 8 -0.770

10 1 9 -0.536

# … with 265 more rows

# ℹ Use `print(n = ...)` to see more rows

CodePudding user response:

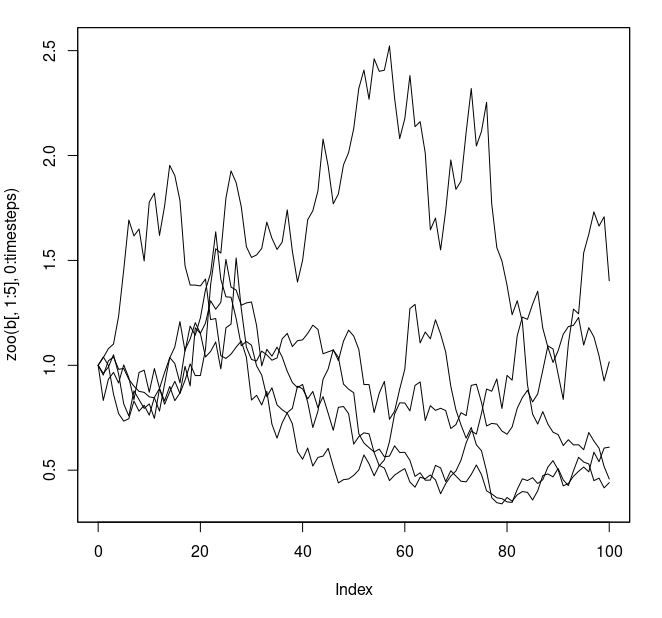

As suggested in the comments, if you want speed, you should use cumsum. You need to be clear what type of Brownian Motion you want (arithmetic, geometric). For geometric Brownian motion, you'll need to correct the approximation error by adjusting the mean. As an example, the