A project I've been working on for the past few months is calculating the top area of an object taken with a 3D depth camera from top view.

workflow of my project:

capture a group of objects image(RGB,DEPTH data) from top-view

Instance Segmentation with RGB image

Calculate the real area of the segmented mask with DEPTH data

Some problem on the project:

- All given objects have different shapes

- The side of the object, not the top, begins to be seen as it moves to the outside of the image.

- Because of this, the mask area to be segmented gradually increases.

- As a result, the actual area of an object located outside the image is calculated to be larger than that of an object located in the center.

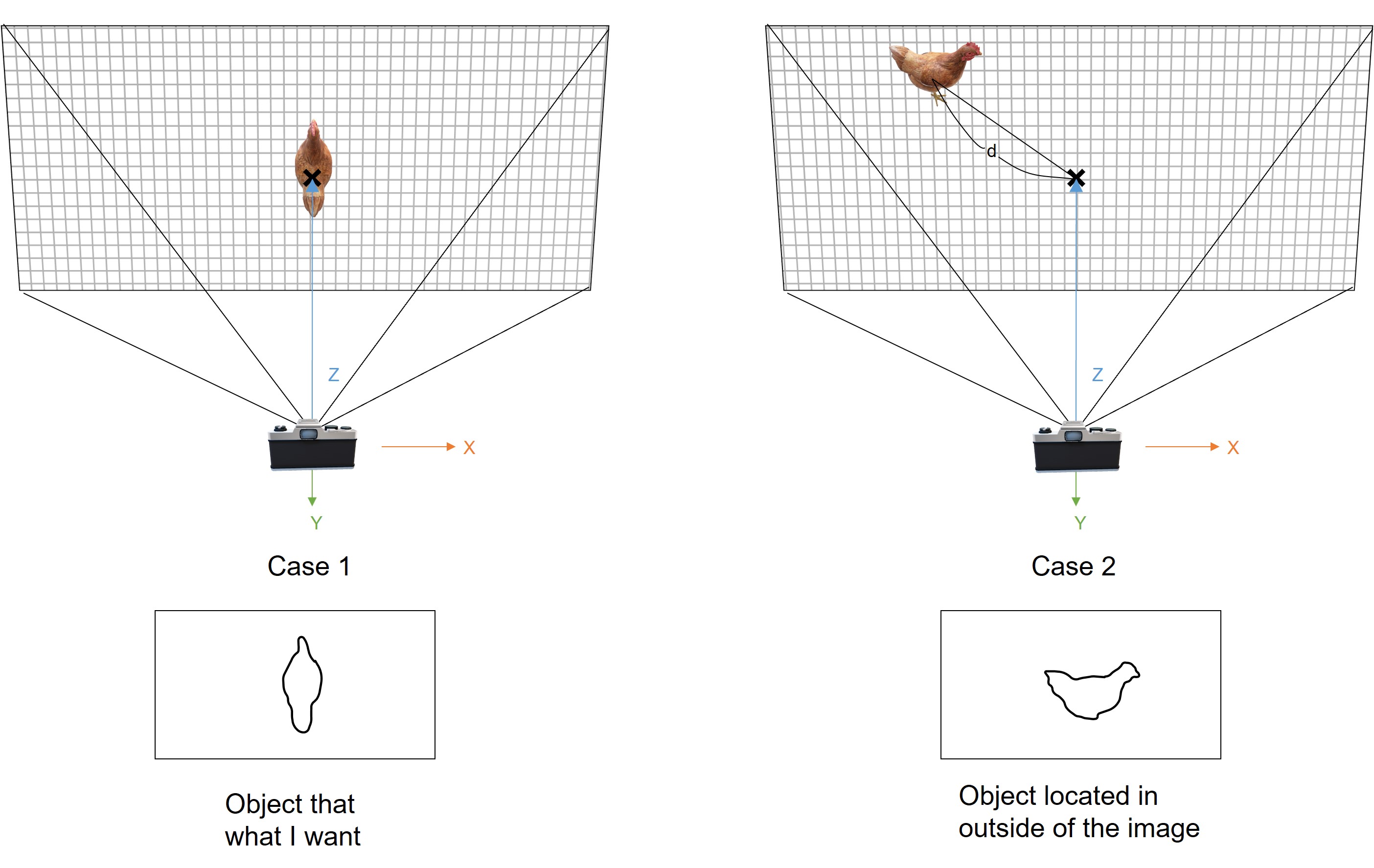

example problem image:

In the example image, object 1 is located in the middle of the angle, so only the top of the object is visible, but object 2 is located outside the angle, so part of the top is lost and the side is visible.

Because of this, the mask area to be segmented is larger for objects located on the periphery than for objects located in the center.

I only want to find the area of the top of an object.

example what I want image:

Is there a way to geometrically correct the area of an object located on outside of the image?

I tried to calibrate by multiplying the area calculated according to the angle formed by Vector 1 connecting the center point of the camera lens to the center point of the floor and Vector 2 connecting the center point of the lens to the center of gravity of the target object by a specific value. However, I gave up because I couldn't logically explain how much correction was needed.

CodePudding user response:

What I would do is convert your RGB and Depth image to 3D mesh (surface with bumps) using your camera settings (FOVs,focal length) something like this:

and then project it onto ground plane (perpendicul to camera view direction in the middle of screen).

That should eliminate the problem with visible sides on edges of FOV however you should also take into account that by showing side some part of top is also hidden so to remedy that you might also find axis of symmetry, and use just half of top side (that is not hidden partially) and just multiply the measured half area by 2 ...

CodePudding user response:

Accurate computation is virtually hopeless, because you don't see all sides.

Assuming your depth information is available as a range image, you can consider the points inside the segmentation mask of a single chicken, estimate the vertical direction at that point, rotate and project the points to obtain the silhouette.

But as a part of the surface is occluded, you may have to reconstruct it using symmetry.