I made linear regression using scikit learn

when I see my mean squared error on the test data then it's very low (0.09)

when I see my r2_score on my test data then it's also very less (0.05)

as per i know when mean squared error is low that present model is good but r2_score is very less that tells us model is not good

I don't understand that my regression model is good or not

Can a good model has a low R square value or can a bad model has a low mean square error value?

CodePudding user response:

R^2 is measure of, how good your fit is representing the data.

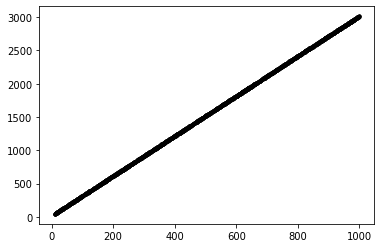

Let's say your data has a linear trend and some noise on it. We can construct the data and see how the R^2 is changing:

Data

I'm going to create some data using numpy:

xs = np.random.randint(10, 1000, 2000)

ys = (3 * xs 8) np.random.randint(5, 10, 2000)

Fit

Now we can create a fit object usinh scikit

reg = LinearRegression().fit(xs.reshape(-1, 1), ys.reshape(-1, 1))

And we can get the score from this fit.

reg.score(xs.reshape(-1, 1), ys.reshape(-1, 1))

My R^2 was: 0.9999971914416896

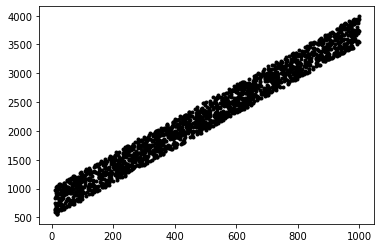

Bad data

Let's say we have a set of more scattered data (have more noise on it).

ys2 = (3 * xs 8) np.random.randint(500, 1000, 2000)

Now we can calculate the score of the ys2 to understand how good our fit represent the xs, ys2 data:

reg.score(xs.reshape(-1, 1), ys2.reshape(-1, 1))

My R^2 was: 0.2377175028951054

The score is low. we know the trend of the data did not change. It still is 3x 8 (noise). But ys2 are further away from the fit.

So, R^2 is an inductor of how good your fit is representing the data. But the condition of the data itself is important. Maybe even with low score the best possible fit is what you get. Since the data is scattered due to noise.