In the code below, when I change the declaration of "isuit" from "char" to "int", the result differ.

I thought int and char is the same in the essense, so I cannot figure out why.

#include <iostream>

#include <cstdio>

using namespace std;

int main()

{

int n, irank;

int cards[4][13] = {};

char isuit;

cin >> n;

for (int i = 0; i < n; i ) {

cin >> isuit >> irank;

switch (isuit)

{

case 'S':

cards[0][irank - 1] ;

break;

case 'H':

cards[1][irank - 1] ;

break;

case 'C':

cards[2][irank - 1] ;

break;

case 'D':

cards[3][irank - 1] ;

break;

}

}

for (int i = 0; i < 4; i ) {

for (int j = 0; j < 13; j ) {

if (!cards[i][j]) {

switch (i)

{

case 0:

cout << "S" << " " << j 1 << endl;

break;

case 1:

cout << "H" << " " << j 1 << endl;

break;

case 2:

cout << "C" << " " << j 1 << endl;

break;

case 3:

cout << "D" << " " << j 1 << endl;

break;

}

}

}

}

return 0;

}

Example input:

47 S 10 S 11 S 12 S 13 H 1 H 2 S 6 S 7 S 8 S 9 H 6 H 8 H 9 H 10 H 11 H 4 H 5 S 2 S 3 S 4 S 5 H 12 H 13 C 1 C 2 D 1 D 2 D 3 D 4 D 5 D 6 D 7 C 3 C 4 C 5 C 6 C 7 C 8 C 9 C 10 C 11 C 13 D 9 D 10 D 11 D 12 D 13

output when char:

S 1 H 3 H 7 C 12 D 8

output when int:

S 1 S 2 S 3 S 4 S 5 S 6 S 7 S 8 S 9 S 10 S 11 S 12 S 13 H 1 H 2 H 3 H 4 H 5 H 6 H 7 H 8 H 9 H 10 H 11 H 12 H 13 C 1 C 2 C 3 C 4 C 5 C 6 C 7 C 8 C 9 C 10 C 11 C 12 C 13 D 1 D 2 D 3 D 4 D 5 D 6 D 7 D 8 D 9 D 10 D 11 D 12 D 13

CodePudding user response:

Think of datatypes like icecream where you can choose size and flavor.

For flavors you have two choices, signed and unsigned.

For sizes, you have a range from 1 byte to 8 bytes. People refer to these as uint8_t, uint16_t, uint32_t.... etc.

So the difference between int and char is its 'size' and 'signed or unsignedness'. Google datatypes for more info regarding these two differences.

Note, there is even more complexity in breaking 'how' each datatype is interpreted when it comes to byte encoding and endianess... Lets ignore that for now.

Here are some resources discussing the difference between char and int

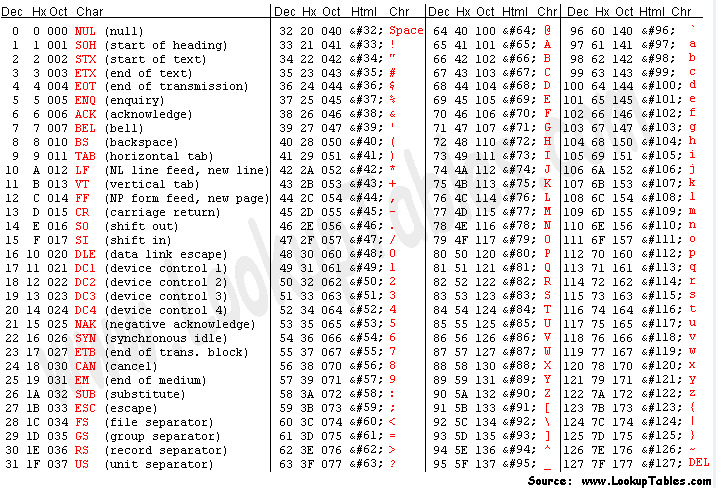

'1' is encoded as interpreted by the computer as the integer value 49. So really everything is interpreted as a number, even characters! It may help to think of it as: "Everything is a number, because everything is a series of bits. And as we know, bits are just binary numbers." ==> Everything is a number!

No back to your problem. You are telling the computer to interpret the std::cin value very differently. Remember 1 != '1'

- Int ==> A number (1)

- Char ==> ASCII table interpretation ('1')

CodePudding user response:

In this line

cin >> isuit >> irank;isuitcan only read text input if it ischar. If it isint, it expects digits (in your case, ASCII representation of the letters).CodePudding user response:

PMF gives you a little info, but it's not sufficient. It has to do with the input method.

char ch; cin >> ch;and

int a; cin >> a;Do NOT do the same thing. When doing a cin to a character, the computer will grab a single character and copy it into your character directly. When it does an int, cin will grab a sequence of digits and then do a conversion of those characters into an int. In effect, it's the same as grabbing a string and then doing

std::stoi(those chars).So if you do:

char ch; cin >> ch;And your input has a 1, then you get the ASCII representation of the character '1' into your character. This is NOT the same as an integer 1.

That is, you get 49, but when printed as a character, it prints '1'.