I am working with a nested json file that contains arrays on several levels that I need to explode. I have noticed that the values in a column I created have changed after I exploded an array which is one the same level as the value I wanted to store in said column. Here is an example to visualize:

jsonDF = jsonDF.withColumn("values_level1", explode("data.values"))

.withColumn("name_level1", col("values_level1.name"))

Until here everything is fine, column "name_level1" contains certain values that I want to filter for at a later stage. The problem begins when I continue with

.withColumn("values_level2", explode("values_level1.values"))

because now the values I had in column "name_level1" have changed and I no longer find the values I want to filter for later.

Is this something expectable which I just havent understood yet conceptually (I am rather new to Spark...)? Can I somehow "conserve" the original values in "name_level1"?

Thanks a lot in advance!

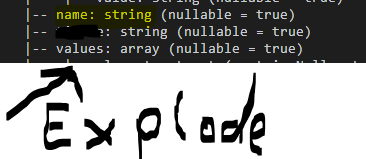

edit: I am adding a picture that might help to understand my problem:

Why does "name" change when I explode "values"?

CodePudding user response:

I talked to a colleague and he provided a solution that worked. Using

.withColumn("values_level2", explode_outer("values_level1.values"))

keeps the values I need in column "name_level1"