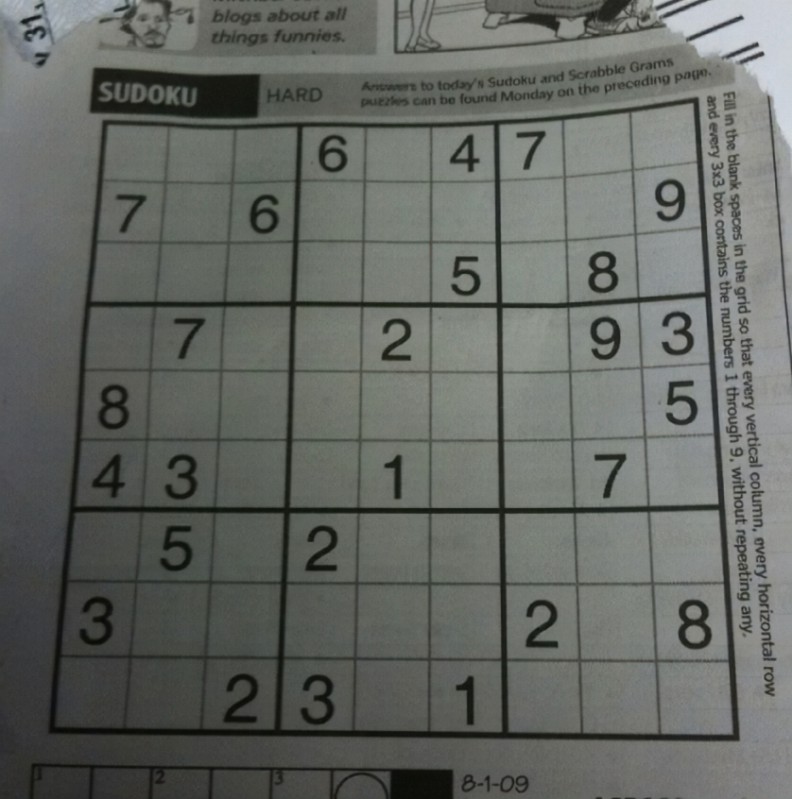

I am working on a project that consists of my code recognizing an image of a sudoku puzzle and then solving it. I am working on the image recognition part right now. It was working fine until I realized that I had been making the whole program flipped on the y axis. So I had replaced

dimensions = np.array([[0, 0], [width, 0], [width, height], [0, height]], dtype = "float32")

with

dimensions = np.array([[width, 0], [0, 0], [0, height], [width, height]], dtype = "float32")

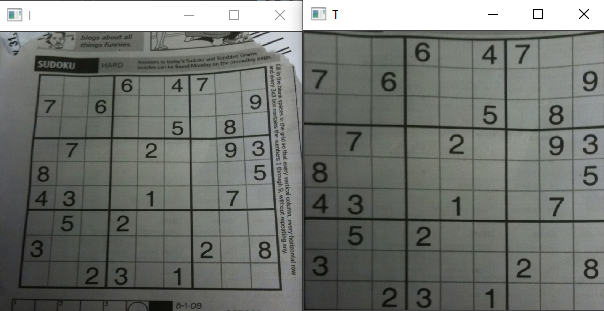

This seemed to change everything and now that I run it I just get a grey image. Here is my code. Please note that I am fairly new to opencv. Also, I draw lines in my code and when I run it, the lines still appear. Just the actual image doesn't appear.

#Imports

import cv2 as cv

import numpy as np

import math

#Load image

img = cv.imread('sudoku_test_image.jpeg')

#Transforms perspective

def perspectiveTransform(img, corners):

def orderCornerPoints(corners):

#Corners sperated into their own points

#Index 0 = top-right

# 1 = top-left

# 2 = bottom-left

# 3 = bottom-right

#Corners to points

corners = [(corner[0][0], corner[0][1]) for corner in corners]

add = np.sum(corners)

top_l = corners[np.argmin(add)]

bottom_r = corners[np.argmax(add)]

diff = np.diff(corners, 1)

top_r = corners[np.argmin(diff)]

bottom_l = corners[np.argmax(diff)]

return (top_r, top_l, bottom_l, bottom_r)

ordered_corners = orderCornerPoints(corners)

top_r, top_l, bottom_l, bottom_r = ordered_corners

#Find width of new image (Using distance formula)

width_A = np.sqrt(((bottom_r[0] - bottom_l[0]) ** 2) ((bottom_r[1] - bottom_l[1]) ** 2))

width_B = np.sqrt(((top_r[0] - top_l[0]) ** 2) ((top_r[1] - top_l[1]) ** 2))

width = max(int(width_A), int(width_B))

#Find height of new image (Using distance formula)

height_A = np.sqrt(((top_r[0] - bottom_r[0]) ** 2) ((top_r[1] - bottom_r[1]) ** 2))

height_B = np.sqrt(((top_l[0] - bottom_l[0]) ** 2) ((top_l[1] - bottom_l[1]) ** 2))

height = max(int(height_A), int(height_B))

#Make top down view

#Order: top-right, top-left, bottom-left, bottom-right

dimensions = np.array([[width, 0], [0, 0], [0, height], [width, height]], dtype = "float32")

#Make ordered_corners var numpy format

ordered_corners = np.array(ordered_corners, dtype = 'float32')

#Transform the perspective

m = cv.getPerspectiveTransform(ordered_corners, dimensions)

return cv.warpPerspective(img, m, (width, height))

#Processes image (Grayscale, median blur, adaptive threshold)

def processImage(img):

gray = cv.cvtColor(img, cv.COLOR_BGR2GRAY)

blur = cv.medianBlur(gray, 3)

thresh = cv.adaptiveThreshold(blur,255,cv.ADAPTIVE_THRESH_GAUSSIAN_C, cv.THRESH_BINARY_INV,11,3)

return thresh

#Find and sort contours

img_processed = processImage(img)

cnts = cv.findContours(img_processed, cv.RETR_EXTERNAL, cv.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

cnts = sorted(cnts, key=cv.contourArea, reverse=True)

#Perform perspective transform

peri = cv.arcLength(cnts[0], True)

approx = cv.approxPolyDP(cnts[0], 0.01 * peri, True)

transformed = perspectiveTransform(img, approx)

#Draw lines

height = transformed.shape[0]

width = transformed.shape[1]

#for vertical lines

line_x = 0

x_increment_val = round((1/9) * width)

#for horizontal lines

line_y = 0

y_increment_val = round((1/9) * height)

#vertical lines

for i in range(10):

cv.line(transformed, (line_x, 0), (line_x, height), (0, 0, 255), 1)

line_x = x_increment_val

#horizontal lines

for i in range(10):

cv.line(transformed, (0, line_y), (width, line_y), (0, 0, 255), 1)

line_y = y_increment_val

#Show image

cv.imshow('Sudoku', transformed)

cv.waitKey(0)

cv.destroyAllWindows()

CodePudding user response:

It seems that the input corners are wrongly calculated. Within your perspectiveTransform function, you have the following snippet that apparently calculates the four corners of the Sudoku puzzle:

# Corners to points

corners = [(corner[0][0], corner[0][1]) for corner in corners]

add = np.sum(corners)

top_l = corners[np.argmin(add)]

bottom_r = corners[np.argmax(add)]

diff = np.diff(corners, 1)

top_r = corners[np.argmin(diff)]

bottom_l = corners[np.argmax(diff)]

return (top_r, top_l, bottom_l, bottom_r)

Check the (top_r, top_l, bottom_l, bottom_r) tuple. Those coordinates are wrong, I don't know what you are doing after computing corners, but the top_l, bottom_r, top_r and bottom_l calculations definitely have issues. If you hard code the tuple like this:

ordered_corners = [(697, 99), (108, 121), (52, 730), (735, 730)] # orderCornerPoints(corners)

To pass where the actual corners (starting top right, anti-clockwise) of the puzzle are, then your transformation is correct:

Advice: When in presence of program bugs, use the debugger and debug step by step to check the actual values of the variables and the intermediate calculations.