I am working on a DICOM viewer project that combines PET and CT.

Progress

Adjust the size of one of the two dicom files to the larger one.

And change the color of one dicom file

And merge the two dicom files

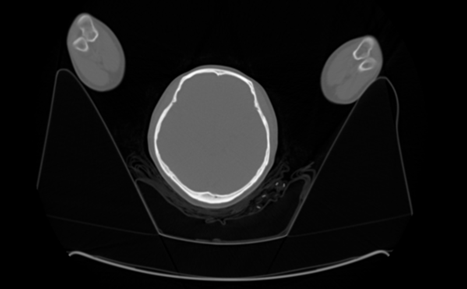

example dicom bitmap1

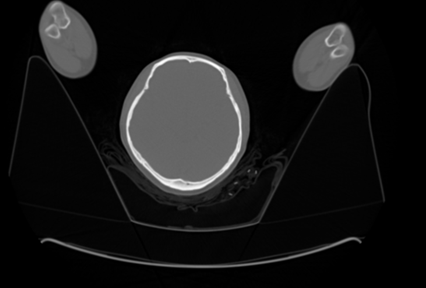

bitmap2 , newBitmap(change color Red)

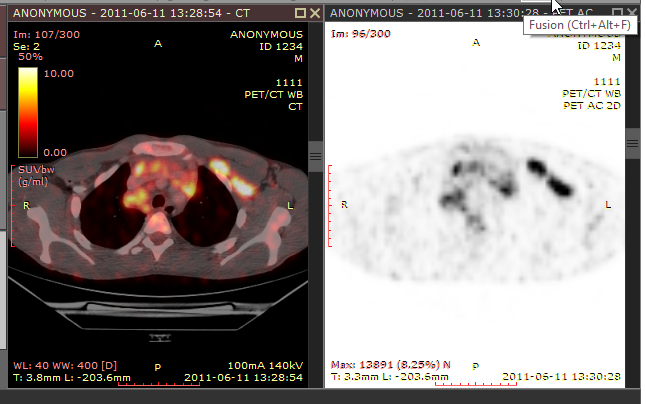

The result image I am aiming for

private void button1_Click(object sender, EventArgs e)

{

string ima_path = Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments);

var bitmap1 = dicomImageViewControls[0].DicomElements[0].Bitmap;

var bitmap2 = dicomImageViewControls[1].DicomElements[0].Bitmap;

if( bitmap1.Width<bitmap2.Width)

{

bitmap1 = resizeImage(bitmap1, bitmap2.Width, bitmap2.Height);

bitmap1.Save(ima_path "\\res.png", ImageFormat.Png);

}

else

{

bitmap2 = resizeImage(bitmap2, bitmap1.Width, bitmap1.Height);

bitmap2.Save(ima_path "\\res.png", ImageFormat.Png);

}

Color actualColor;

Bitmap newBitmap = new Bitmap(bitmap2.Width, bitmap2.Height);

for (int i = 0; i < bitmap2.Width; i )

{

for (int j = 0; j < bitmap2.Height; j )

{

actualColor = bitmap2.GetPixel(i, j);

float a = actualColor.A;

float r = actualColor.R;

newBitmap.SetPixel(i, j, Color.FromArgb((int)a, (int)r, 0, 0));

}

}

var target = new Bitmap(newBitmap.Width, newBitmap.Height, PixelFormat.Format32bppArgb);

var graphics = Graphics.FromImage(target);

graphics.CompositingMode = CompositingMode.SourceOver; // this is the default, but just to be clear

graphics.DrawImage(newBitmap, 0, 0);

graphics.DrawImage(bitmap1, 0, 0);

newBitmap.Save(ima_path "\\Image2.png", ImageFormat.Png);

}

public static Bitmap resizeImage(Bitmap image, int width, int height)

{

var destinationRect = new Rectangle(0, 0, width, height);

var destinationImage = new Bitmap(width, height);

destinationImage.SetResolution(image.HorizontalResolution, image.VerticalResolution);

using (var graphics = Graphics.FromImage(destinationImage))

{

graphics.CompositingMode = CompositingMode.SourceCopy;

graphics.CompositingQuality = CompositingQuality.HighQuality;

using (var wrapMode = new ImageAttributes())

{

wrapMode.SetWrapMode(WrapMode.TileFlipXY);

graphics.DrawImage(image, destinationRect, 0, 0, image.Width, image.Height, GraphicsUnit.Pixel, wrapMode);

}

}

return destinationImage;

}

target bitmap

It looks like the first picture.

======================================= I found a new algorithm in clear canvas.

baseImageGraphicProvider.ImageGraphic.PixelData.ForEachPixel(

(n, x, y, i) =>

{

// and this is why the base and overlay slices must be unsigned precisely coincident

var patientLocation = baseImageSopProvider.Frame.ImagePlaneHelper.ConvertToPatient(new PointF(x, y));

var overlayCoordinate = overlayImageSopProvider.Frame.ImagePlaneHelper.ConvertToImagePlane(patientLocation);

var baseValue = (ushort) baseImageGraphicProvider.ImageGraphic.PixelData.GetPixel(i);

var overlayValue = overlayImageGraphicProvider.ImageGraphic.PixelData.GetPixel((int) overlayCoordinate.X, (int) overlayCoordinate.Y);

// the fusion operator: output = underlyingGrey*(1-alpha) overlayingColour*(alpha) (see DICOM 2009 PS 3.4 N.2.4.3)

var compositeColor = ToRgbVectorFromGrey(baseValue)*(1 - opacity) ToRgbVector(colorMap[overlayValue])*opacity;

pixelData[3*n] = (byte) compositeColor.X;

pixelData[3*n 1] = (byte) compositeColor.Y;

pixelData[3*n 2] = (byte) compositeColor.Z;

});

I think this algorithm is

var compositeColor = ToRgbVectorFromGrey(baseValue)*(1 - opacity) ToRgbVector(colorMap[overlayValue])*opacity;

Get the original pixel rgb from greycolor (1-opactiy) color palette (red palette in my case) *opacity Returns the value of each pixel.

CodePudding user response:

It is likely that the bitmap1 lacks alpha channel, so when drawing, it will overwrite everything. You might want to consider for example making newBitmap completely red, and instead map the color channel to the alpha channel. I.e. newBitmap.SetPixel(i, j, Color.FromArgb((int)r, 1, 0, 0)); and switch the rendering order so that newBitmap is drawn last.

Also, dont forget to dispose all the created bitmaps and graphics objects.

You might also consider creating a custom function for fusing the pixel data, i.e. take the 16-bit values from the left and right image and use some custom function to produce a color value, since this should allow for far greater flexibility in how to visualize the data. But for this to work you need to access the raw 16-bit pixel data, and probably to write your own resize function for said 16-bit data. This would typically also involve some kind of windowing function to map the 16-bit data to 8 bit values.

You might also need some kind of registration function to accurately combine each dataset, since there is usually no guarantee they will align correctly from just resizing.

You might also want to look at Fast work with bitmaps in order to get any kind of rendering speed.