I have 100(ish) .pdf's that I've copied into 88 different folders. I'm now left with 1 .pdf and I think I may have accidently copied 1 .pdf into two folders.

I tried making a script that runs through all of the folders and check if the file already exists in the that folder. However, I think I may have gone into a rabbit hole with the code:

$ParentFolder = Get-ChildItem -Path "\\pc90555\c$\Users\mmdu\OneDrive - COWI\Documents\kort2021"

$childItem = @()

Foreach ($subFolders in $ParentFolder){

$childItem = Get-ChildItem -Path "\\pc90555\c$\Users\mmdu\OneDrive - COWI\Documents\kort2021\$subFolders"

foreach ($item in $childItem)

{

$itemPath = Get-ChildItem ($item.DirectoryName "\" $item)

foreach ($items in $itemPath)

{

if(Test-Path -Path $childItem)

{

$exists = $items.DirectoryName

write-host "$items exist in $exists"

}

}

}

}

In the end, I want to take one single file from each of the folders, run it through all the folders and then basically tell me if the file is already in a folder or not.

I'd appreciate any help in this regard!

CodePudding user response:

Here's how to simply do this, breaking it into steps.

- Recursively scan through the directory tree for

.pdffiles - Check the results of that to see if it contains your file where it shouldn't be

$myFileName = "ProposalDraft-Final.FINALv2.pdf"

$directoryToSearch = "\\pc90555\c$\Users\mmdu\OneDrive - COWI\"

$pdfFiles = Get-ChildItem -path $directoryToSearch -Include *.pdf

This leaves you with an array in $pdfFiles which has all the PDFs. This is useful because it will have the full FileInfo, including the path, size, extension and other useful info.

Now to see if you put your file in too many places!

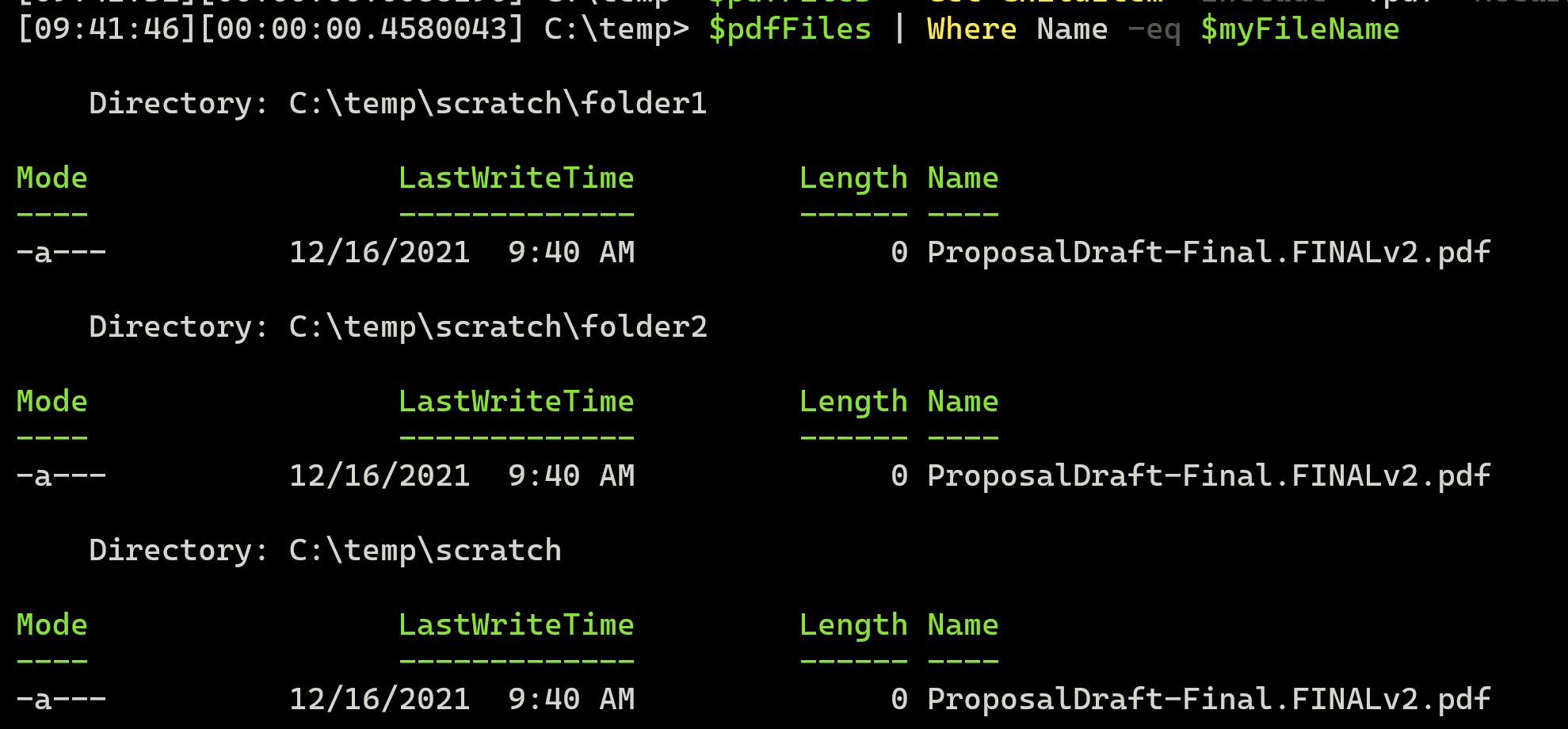

$pdfFiles | Where Name -eq $myFileName

The output will be an easy to read list showing the places where matching files were found.

In this example screenshot, I made a few directories and placed files with your desired name in each. You can see how the results are sorted by which directory they appear in, making it easy for you to find if there are duplicates.

CodePudding user response:

If you want something robust (but time consuming) that should be able to find duplicates from your start position (even if the file name or extension has changed):

# Define the starting point

$ParentFolder = "\\pc90555\c$\Users\mmdu\OneDrive - COWI\Documents\kort2021"

$duplicates = Get-ChildItem $ParentFolder -Recurse -File |

Get-FileHash -Algorithm MD5 | Group-Object Hash |

Where-Object Count -GT 1

Then, assuming it found a duplicate, you can inspect the $duplicates variables to get their paths:

PS /home/user/Documents> $duplicates[1]

Count Name Group

----- ---- -----

2 DA312CD92656EDCD5F7CF8DF… {Microsoft.PowerShell.Comman....

PS /home/user/Documents> $duplicates[1].Group

Algorithm Hash Path

--------- ---- ----

MD5 DA312CD92656EDCD5F7CF8DFB07CF0DC /home/user/Documents/test.txt

MD5 DA312CD92656EDCD5F7CF8DFB07CF0DC /home/user/Documents/test2.ext