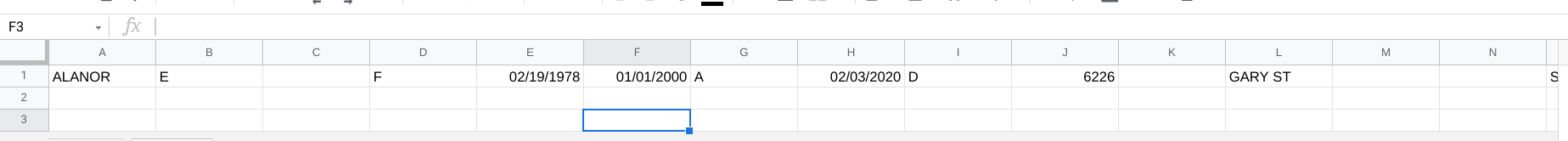

I have a ~1 GB text file with 153 separate fields. I uploaded the file to GCS and then created a new table in BQ with file format as "CSV". For table type, I selected "native table". For schema, I elected to auto-detect. For the field delimiter, I selected "tab". Upon running the job, I received the following error:

Could not parse '15229-1910' as INT64 for field int64_field_19 (position 19) starting at location 318092352 with message 'Unable to parse'

The error is originating out of a "zip code plus 4" field. My question is if there is a way to prevent the field from parsing this value or if there's a way to omit these parse errors altogether so that the job can complete? From GCP's documentation, they advise "If BigQuery doesn't recognize the format, it loads the column as a string data type. In that case, you might need to preprocess the source data before loading it". The "zip code plus four" field in my file is already assigned as a string field type, therefore, I'm not quite sure where to go from here. Being that I selected the delimiter as "tab", does that indicate that the "zip code plus for" value contains a tab character?

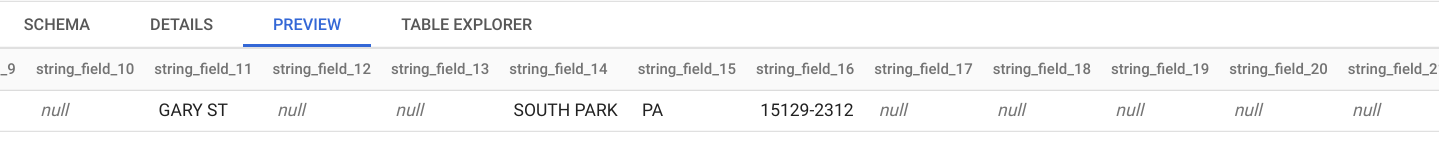

CodePudding user response:

- Save the file in .tsv format.

- You can upload the file into BigQuery using auto-detect schema and setting tab as delimiter. It will automatically detect all the field types without any error as can be seen in the table in BigQuery in the screenshot.

BigQuery Table