I would like to run the below code that loads a CSV into a Spark dataframe in IntelliJ.

import org.apache.spark.sql.SQLContext

import org.apache.spark.{SparkConf, SparkContext}

object ReadCSVFile {

case class Employee(empno:String, ename:String, designation:String, manager:String,

hire_date:String, sal:String , deptno:String)

def main(args : Array[String]): Unit = {

var conf = new SparkConf().setAppName("Read CSV File").setMaster("local[*]")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc)

import sqlContext.implicits._

val textRDD = sc.textFile("src\\main\\resources\\emp_data.csv")

//println(textRDD.foreach(println)

val empRdd = textRDD.map {

line =>

val col = line.split(",")

Employee(col(0), col(1), col(2), col(3), col(4), col(5), col(6))

}

val empDF = empRdd.toDF()

empDF.show()

}

}

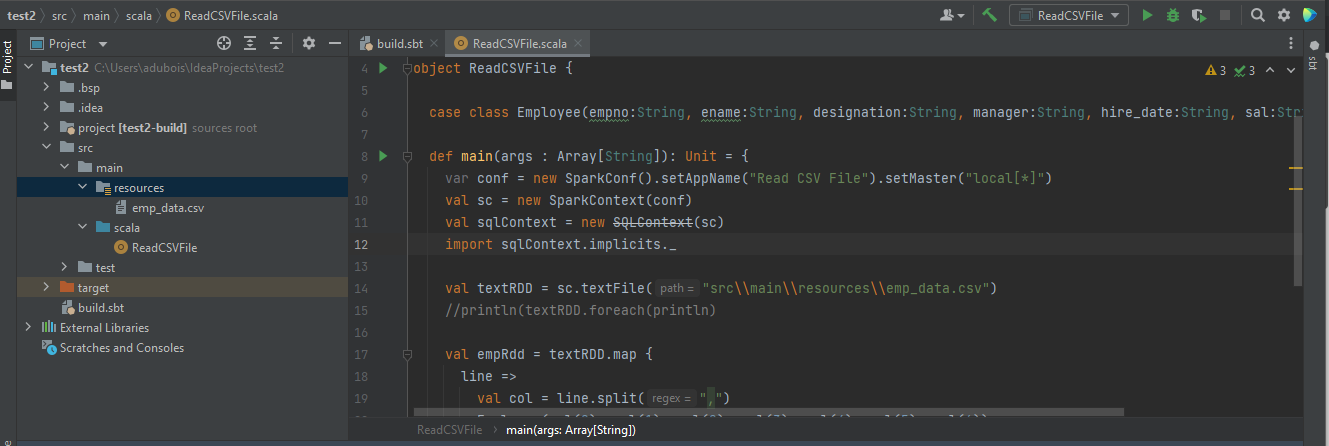

Here is also a screen of Intellij so you get how my project structure is :

And the last thing is my build.sbt file :

ThisBuild / version := "0.1.0-SNAPSHOT"

ThisBuild / scalaVersion := "2.12.15"

libraryDependencies =Seq(

"org.apache.spark"%"spark-core_2.12"%"2.4.5",

"org.apache.spark"%"spark-sql_2.12"%"2.4.5"

)

I am using Oracle OpenJDK version 17.0.2 as SDK.

When I execute my code, I get the following errors :

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/02/28 13:49:17 INFO SparkContext: Running Spark version 2.4.5

22/02/28 13:49:17 WARN NativeCodeLoader: Unable to load native-hadoop library for your

platform... using builtin-java classes where applicable

22/02/28 13:49:17 ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:378)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:393)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:386)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:79)

at org.apache.hadoop.security.Groups.parseStaticMapping(Groups.java:116)

at org.apache.hadoop.security.Groups.<init>(Groups.java:93)

at org.apache.hadoop.security.Groups.<init>(Groups.java:73)

at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:293)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:283)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:260)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:789)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:774)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:647)

at org.apache.spark.util.Utils$.$anonfun$getCurrentUserName$1(Utils.scala:2422)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2422)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:293)

at ReadCSVFile$.main(ReadCSVFile.scala:10)

at ReadCSVFile.main(ReadCSVFile.scala)

22/02/28 13:49:17 INFO SparkContext: Submitted application: Read CSV File

22/02/28 13:49:18 INFO SecurityManager: Changing view acls to: adamuser

22/02/28 13:49:18 INFO SecurityManager: Changing modify acls to: adamuser

22/02/28 13:49:18 INFO SecurityManager: Changing view acls groups to:

22/02/28 13:49:18 INFO SecurityManager: Changing modify acls groups to:

22/02/28 13:49:18 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(adamuser); groups with view permissions: Set(); users with modify permissions: Set(adamuser); groups with modify permissions: Set()

22/02/28 13:49:18 INFO Utils: Successfully started service 'sparkDriver' on port 52614.

22/02/28 13:49:18 INFO SparkEnv: Registering MapOutputTracker

22/02/28 13:49:18 INFO SparkEnv: Registering BlockManagerMaster

22/02/28 13:49:18 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/02/28 13:49:18 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/02/28 13:49:18 INFO DiskBlockManager: Created local directory at

C:\Users\adamuser\AppData\Local\Temp\blockmgr-c2b9fbdc-cd1a-4a49-8e60-10d3a0f21f3a

22/02/28 13:49:18 INFO MemoryStore: MemoryStore started with capacity 1032.0 MB

22/02/28 13:49:18 INFO SparkEnv: Registering OutputCommitCoordinator

22/02/28 13:49:19 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/02/28 13:49:19 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://SH-P101.s-h.local:4040

22/02/28 13:49:19 INFO Executor: Starting executor ID driver on host localhost

22/02/28 13:49:19 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 52615.

22/02/28 13:49:19 INFO NettyBlockTransferService: Server created on SH-P101.s-h.local:52615

22/02/28 13:49:19 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/02/28 13:49:19 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, SH-P101.s-h.local, 52615, None)

22/02/28 13:49:19 INFO BlockManagerMasterEndpoint: Registering block manager SH-P101.s-h.local:52615 with 1032.0 MB RAM, BlockManagerId(driver, SH-P101.s-h.local, 52615, None)

22/02/28 13:49:19 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, SH-P101.s-h.local, 52615, None)

22/02/28 13:49:19 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, SH-P101.s-h.local, 52615, None)

22/02/28 13:49:20 WARN BlockManager: Putting block broadcast_0 failed due to exception java.lang.reflect.InaccessibleObjectException: Unable to make field transient java.lang.Object[] java.util.ArrayList.elementData accessible: module java.base does not "opens java.util" to unnamed module @15c43bd9.

22/02/28 13:49:20 WARN BlockManager: Block broadcast_0 could not be removed as it was not found on disk or in memory

Exception in thread "main" java.lang.reflect.InaccessibleObjectException: Unable to make field transient java.lang.Object[] java.util.ArrayList.elementData accessible: module java.base does not "opens java.util" to unnamed module @15c43bd9

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:354)

at java.base/java.lang.reflect.AccessibleObject.checkCanSetAccessible(AccessibleObject.java:297)

at java.base/java.lang.reflect.Field.checkCanSetAccessible(Field.java:178)

at java.base/java.lang.reflect.Field.setAccessible(Field.java:172)

at org.apache.spark.util.SizeEstimator$.$anonfun$getClassInfo$2(SizeEstimator.scala:336)

at org.apache.spark.util.SizeEstimator$.$anonfun$getClassInfo$2$adapted(SizeEstimator.scala:330)

at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36)

at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:198)

at org.apache.spark.util.SizeEstimator$.getClassInfo(SizeEstimator.scala:330)

at org.apache.spark.util.SizeEstimator$.visitSingleObject(SizeEstimator.scala:222)

at org.apache.spark.util.SizeEstimator$.estimate(SizeEstimator.scala:201)

at org.apache.spark.util.SizeEstimator$.estimate(SizeEstimator.scala:69)

at org.apache.spark.util.collection.SizeTracker.takeSample(SizeTracker.scala:78)

at org.apache.spark.util.collection.SizeTracker.afterUpdate(SizeTracker.scala:70)

at org.apache.spark.util.collection.SizeTracker.afterUpdate$(SizeTracker.scala:67)

at org.apache.spark.util.collection.SizeTrackingVector.$plus$eq(SizeTrackingVector.scala:31)

at org.apache.spark.storage.memory.DeserializedValuesHolder.storeValue(MemoryStore.scala:665)

at org.apache.spark.storage.memory.MemoryStore.putIterator(MemoryStore.scala:222)

at org.apache.spark.storage.memory.MemoryStore.putIteratorAsValues(MemoryStore.scala:299)

at org.apache.spark.storage.BlockManager.$anonfun$doPutIterator$1(BlockManager.scala:1165)

at org.apache.spark.storage.BlockManager.doPut(BlockManager.scala:1091)

at org.apache.spark.storage.BlockManager.doPutIterator(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.putIterator(BlockManager.scala:914)

at org.apache.spark.storage.BlockManager.putSingle(BlockManager.scala:1481)

at org.apache.spark.broadcast.TorrentBroadcast.writeBlocks(TorrentBroadcast.scala:123)

at org.apache.spark.broadcast.TorrentBroadcast.<init>(TorrentBroadcast.scala:88)

at org.apache.spark.broadcast.TorrentBroadcastFactory.newBroadcast(TorrentBroadcastFactory.scala:34)

at org.apache.spark.broadcast.BroadcastManager.newBroadcast(BroadcastManager.scala:62)

at org.apache.spark.SparkContext.broadcast(SparkContext.scala:1489)

at org.apache.spark.SparkContext.$anonfun$hadoopFile$1(SparkContext.scala:1035)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.SparkContext.withScope(SparkContext.scala:699)

at org.apache.spark.SparkContext.hadoopFile(SparkContext.scala:1027)

at org.apache.spark.SparkContext.$anonfun$textFile$1(SparkContext.scala:831)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.SparkContext.withScope(SparkContext.scala:699)

at org.apache.spark.SparkContext.textFile(SparkContext.scala:828)

at ReadCSVFile$.main(ReadCSVFile.scala:14)

at ReadCSVFile.main(ReadCSVFile.scala)

22/02/28 13:49:20 INFO SparkContext: Invoking stop() from shutdown hook

22/02/28 13:49:20 INFO SparkUI: Stopped Spark web UI at http://SH-P101.s-h.local:4040

22/02/28 13:49:20 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/02/28 13:49:20 INFO MemoryStore: MemoryStore cleared

22/02/28 13:49:20 INFO BlockManager: BlockManager stopped

22/02/28 13:49:20 INFO BlockManagerMaster: BlockManagerMaster stopped

22/02/28 13:49:20 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/02/28 13:49:20 INFO SparkContext: Successfully stopped SparkContext

22/02/28 13:49:20 INFO ShutdownHookManager: Shutdown hook called

22/02/28 13:49:20 INFO ShutdownHookManager: Deleting directory

C:\Users\adamuser\AppData\Local\Temp\spark-67d11686-a6b8-4744-ab04-2ac1623db9dd

Process finished with exit code 1

I tried to resolve the first one : "java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries."

So I downloaded the winutils.exe file, put it in C:\hadoop\bin and set the HADOOP_HOME environment to C:\hadoop\bin, but it didn't resolve anything. I also tried to add :

System.setProperty("hadoop.home.dir", "c/hadoop/bin")

...in my code, but it didn't work either.

I didn't find anything about the second error, but I suppose my resources file can't be found (maybe the Path isn't defined properly?).

Can someone suggest what is wrong and what needs to be changed?

CodePudding user response:

Answers to both issues in comment:

1st one : Set HADOOP_HOME environment variable to C:\hadoop without "bin" and append C:\hadoop\bin to PATH environment variable.

2nd one : Use JDK 8 since Spark doesn't support JDK 17.