I have a metrics-server and a horizontal pod autoscaler using this server, running on my cluster.

This works perfectly fine, until i inject linkerd-proxies into the deployments of the namespace where my application is running. Running kubectl top pod in that namespace results in a error: Metrics not available for pod <name> error. However, nothing appears in the metrics-server pod's logs.

The metrics-server clearly works fine in other namespaces, because top works in every namespace but the meshed one.

At first i thought it could be because the proxies' resource requests/limits weren't set, but after running the injection with them (kubectl get -n <namespace> deploy -o yaml | linkerd inject - --proxy-cpu-request "10m" --proxy-cpu-limit "1" --proxy-memory-request "64Mi" --proxy-memory-limit "256Mi" | kubectl apply -f -), the issue stays the same.

Is this a known problem, are there any possible solutions?

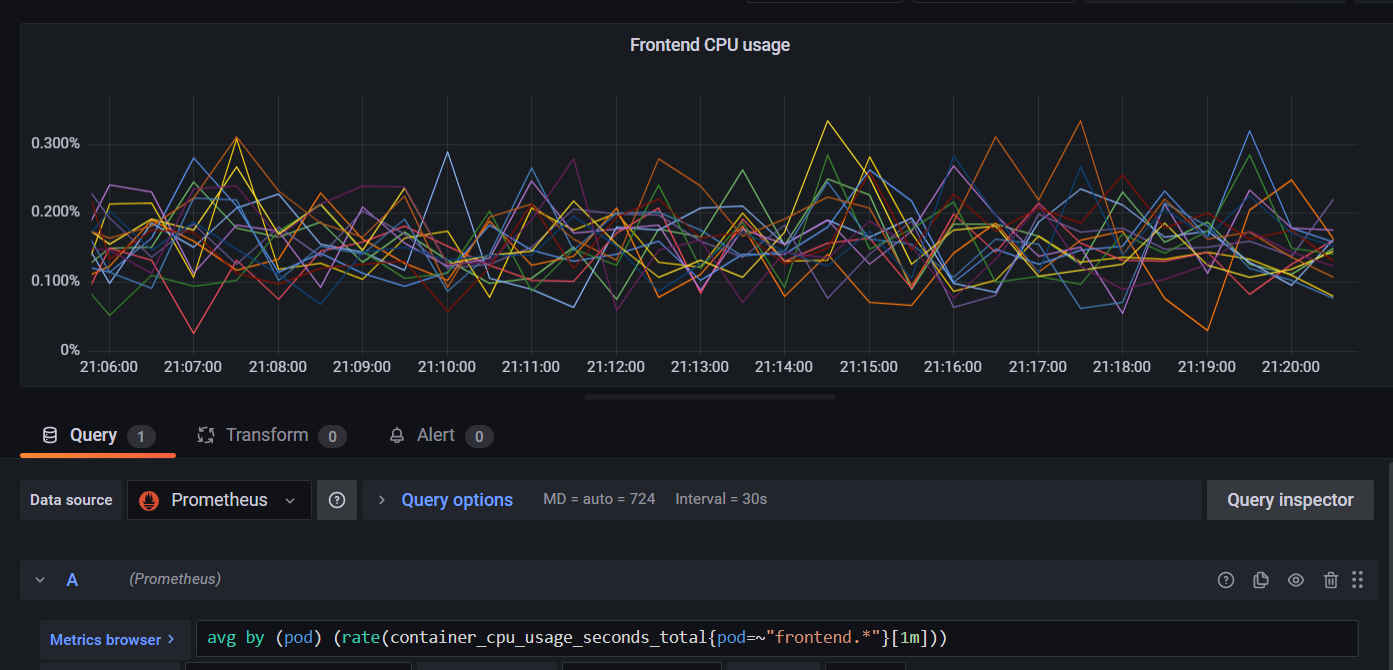

PS: I have a kube-prometheus-stack running in a different namespace, and this seems to be able to scrape the pod metrics from the meshed pods just fine

CodePudding user response:

I'm able to use kubectl top on pods that have linkerd injected:

:; kubectl top pod -n linkerd --containers

POD NAME CPU(cores) MEMORY(bytes)

linkerd-destination-5cfbd7468-7l22t destination 2m 41Mi

linkerd-destination-5cfbd7468-7l22t linkerd-proxy 1m 13Mi

linkerd-destination-5cfbd7468-7l22t policy 1m 81Mi

linkerd-destination-5cfbd7468-7l22t sp-validator 1m 34Mi

linkerd-identity-fc9bb697-s6dxw identity 1m 33Mi

linkerd-identity-fc9bb697-s6dxw linkerd-proxy 1m 12Mi

linkerd-proxy-injector-668455b959-rlvkj linkerd-proxy 1m 13Mi

linkerd-proxy-injector-668455b959-rlvkj proxy-injector 1m 40Mi

So I don't think there's anything fundamentally incompatible with the Linkerd and the Kubernetes metrics server.

I have noticed that I will sometimes see the errors for the first ~1m after a pod starts, before the metrics server has gotten its initial state for a pod; but these error messages seem a little different than what you reference:

:; kubectl rollout restart -n linkerd deployment linkerd-destination

deployment.apps/linkerd-destination restarted

:; while ! kubectl top pod -n linkerd --containers linkerd-destination-6d974dd4c7-vw7nw ; do sleep 10 ; done

Error from server (NotFound): podmetrics.metrics.k8s.io "linkerd/linkerd-destination-6d974dd4c7-vw7nw" not found

Error from server (NotFound): podmetrics.metrics.k8s.io "linkerd/linkerd-destination-6d974dd4c7-vw7nw" not found

Error from server (NotFound): podmetrics.metrics.k8s.io "linkerd/linkerd-destination-6d974dd4c7-vw7nw" not found

Error from server (NotFound): podmetrics.metrics.k8s.io "linkerd/linkerd-destination-6d974dd4c7-vw7nw" not found

POD NAME CPU(cores) MEMORY(bytes)

linkerd-destination-6d974dd4c7-vw7nw destination 1m 25Mi

linkerd-destination-6d974dd4c7-vw7nw linkerd-proxy 1m 13Mi

linkerd-destination-6d974dd4c7-vw7nw policy 1m 18Mi

linkerd-destination-6d974dd4c7-vw7nw sp-validator 1m 19Mi

:; kubectl version --short

Client Version: v1.23.3

Server Version: v1.21.7 k3s1

CodePudding user response:

The problem was apparently a bug in the cAdvisor stats provider with the CRI runtime. The linkerd-init containers keep producing metrics after they've terminated, which shouldn't happen. The metrics-server ignores stats from pods that contain containers that report zero values (to avoid reporting invalid metrics, like when a container is restarting, metrics aren't collected yet,...). You can follow up on the issue here. Solutions seem to be changing to another runtime or using the PodAndContainerStatsFromCRI flag, which will let the internal CRI stats provider be responsible instead of the cAdvisor one.