I'm going insane trying to get this to work

Trying to duplicate the following java function

I thought this may be standard hex decoding so I used the usual decoding function I've been using

extension String {

/// A data representation of the hexadecimal bytes in this string.

var hexDecodedData: Data {

// Get the UTF8 characters of this string

let chars = Array(utf8)

// Keep the bytes in an UInt8 array and later convert it to Data

var bytes = [UInt8]()

bytes.reserveCapacity(count / 2)

// It is a lot faster to use a lookup map instead of stratal

let map: [UInt8] = [

0x00, 0x01, 0x02, 0x03, 0x04, 0x05, 0x06, 0x07, // 01234567

0x08, 0x09, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, // 89:;<=>?

0x00, 0x0a, 0x0b, 0x0c, 0x0d, 0x0e, 0x0f, 0x00, // @ABCDEFG

0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00, 0x00 // HIJKLMNO

]

// Grab two characters at a time, map them and turn it into a byte

for i in stride(from: 0, to: count, by: 2) {

let index1 = Int(chars[i] & 0x1F ^ 0x10)

let index2 = Int(chars[i 1] & 0x1F ^ 0x10)

bytes.append(map[index1] << 4 | map[index2])

}

return Data(bytes)

}

}

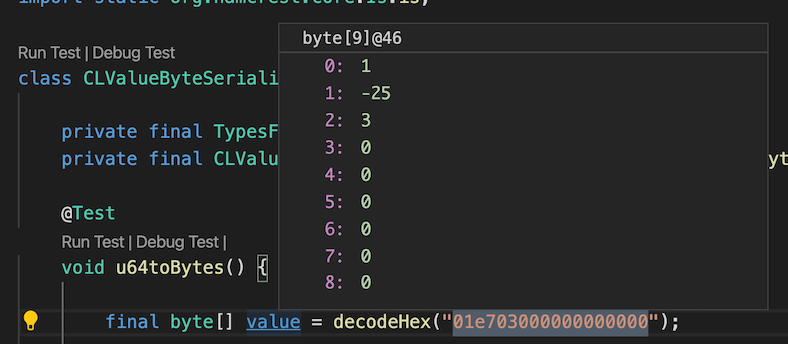

This results in

[1, 231, 3, 0, 0, 0, 0, 0, 0]

So the I tried converting the java code to swift myself

extension String {

// public static byte[] decodeHex(final char[] data) throws DecoderException

func decodeHex() throws -> [Int] {

let stringArray = Array(self)

let len = count

var out: [Int?] = Array(repeating: nil, count: len >> 1)

if (len & 0x01) != 0 {

throw HExDecodingError.oddNumberOfCharacters

}

var i = 0

var j = 0

while j < len {

var f: Int = try Self.toDigit(char: String(stringArray[j]), index: j)

j = 1

f = f | (try Self.toDigit(char: String(stringArray[j]), index: j))

j = 1

out[i] = f & 0xFF

i = 1

}

return out.compactMap { $0 }

}

enum HExDecodingError: Error {

case oddNumberOfCharacters

case illegalCharacter(String)

case conversionToDogotFailed(String, Int)

}

static func toDigit(char: String, index: Int) throws -> Int {

let digit = Int(char, radix: 16)

if digit == -1 {

throw HExDecodingError.illegalCharacter(char)

}

guard let digit = digit else {

throw HExDecodingError.conversionToDogotFailed(char, index)

}

return digit

}

}

Which results in

[1, 15, 3, 0, 0, 0, 0, 0, 0]

What is going on? what am I doing wrong

EDIT:

Also how can there possibly be a negative number in there since a byte array is represented as a [UInt8]

CodePudding user response:

is an 8-bit signed two's complement integer. It has a minimum value of -128 and a maximum value of 127 (inclusive).

(Java, in general, does not have unsigned primitive types, only signed ones.)

In your Java output, the -25 value corresponds to hex E7 in your string, whose decimal value is E * 16 7 = 14 * 16 7 = 231; 231 is outside of the [-128, 127] range, and wraps around to -25. (More precisely the bit pattern of unsigned 8-bit 231 corresponds to the bit pattern of signed 8-bit -25 in two's-complement.)

In Swift, you're using a UInt8 to represent results (both explicitly, and implicitly in a Data value), and the range of UInt8 is [0, 255]; 231 fits within this range, and is what you see in your first Swift code snippet. The results are bitwise equivalent, but if you need results which are type equivalent to what you're seeing in Java, you'll need to work in terms of Int8 instead of UInt8.