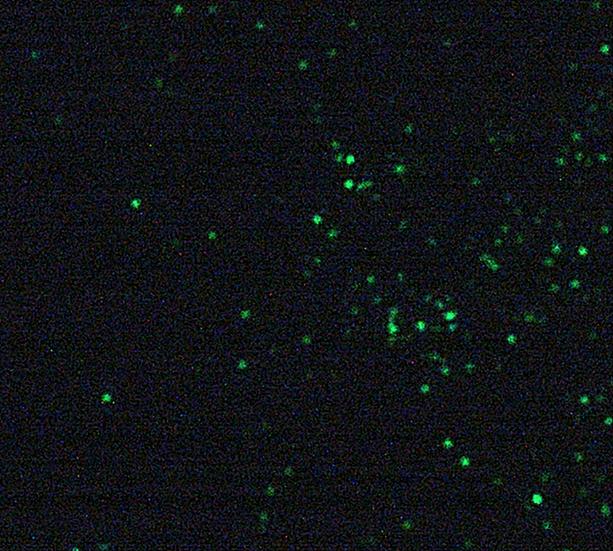

I'm looking for a proper solution how to count particles and measure their sizes in this image:

In the end I have to obtain the lists of particles' coordinates and area squares. After some search on the internet I realized there are 3 approaches for particles detection:

- blobs

- Contours

- connectedComponentsWithStats

Looking at different projects I assembled some code with the mix of it.

import pylab

import cv2

import numpy as np

import pylab

Gaussian blurring and thresholding

original_image = cv2.imread(img_path)

img = original_image

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

img = cv2.GaussianBlur(img, (5, 5), 0)

img = cv2.blur(img, (5, 5))

img = cv2.medianBlur(img, 5)

img = cv2.bilateralFilter(img, 6, 50, 50)

max_value = 255

adaptive_method = cv2.ADAPTIVE_THRESH_GAUSSIAN_C

threshold_type = cv2.THRESH_BINARY

block_size = 11

img_thresholded = cv2.adaptiveThreshold(img, max_value, adaptive_method, threshold_type, block_size, -3)

filter small objects

min_size = 4

nb_components, output, stats, centroids = cv2.connectedComponentsWithStats(img, connectivity=8)

sizes = stats[1:, -1]

nb_components = nb_components - 1

# for every component in the image, you keep it only if it's above min_size

for i in range(0, nb_components):

if sizes[i] < min_size:

img[output == i 1] = 0

generation of Contours for filling holes and measurements. pos_list and size_list is what we were looking for

contours, hierarchy = cv2.findContours(img, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

pos_list = []

size_list = []

for i in range(len(contours)):

area = cv2.contourArea(contours[i])

size_list.append(area)

(x, y), radius = cv2.minEnclosingCircle(contours[i])

pos_list.append((int(x), int(y)))

for the self-check, if we plot these coordinates over the original image

pts = np.array(pos_list)

pylab.figure(0)

pylab.imshow(original_image)

pylab.scatter(pts[:, 0], pts[:, 1], marker="x", color="green", s=5, linewidths=1)

pylab.show()

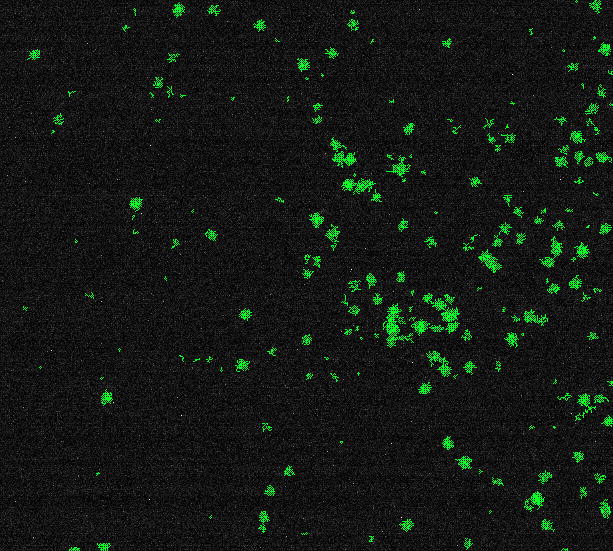

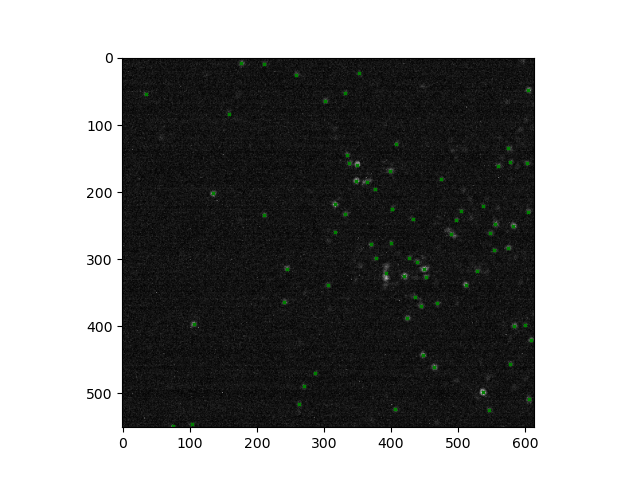

We might get something like the following:

And... I'm not really satisfied with the results. Some clearly visible particles are not included, on the other side, some doubt fluctuations of intensity have been counted. I'm playing now with different filters' settings, but the feeling is it's wrong.

If someone knows how to improve my solution, please share.

CodePudding user response:

Not an answer, but I need to show an image.

Your grayscale image has all semblance of a Bayer-pattern-encoded one. You should debayerize it.

CodePudding user response:

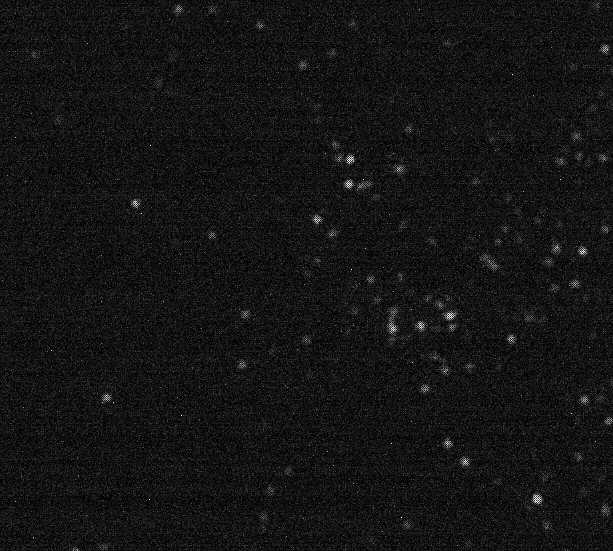

Since the particles are in white and the background in black, we can use Kmeans Color Quantization to segment the image into two groups with cluster=2. This will allow us to easily distinguish between particles and the background. Since the particles may be very tiny, we should try to avoid blurring, dilating, or any morphological operations which may alter the particle contours. Here's an approach:

Kmeans color quantization. We perform Kmeans with two clusters, grayscale, then Otsu's threshold to obtain a binary image.

Filter out super tiny noise. Next we find contours, remove tiny specs of noise using contour area filtering, and collect each particle

(x, y)coordinate and its area. We remove tiny particles on the binary mask by "filling in" these contours to effectively erase them.Apply mask onto original image. Now we bitwise-and the filtered mask onto the original image to highlight the particle clusters.

Kmeans with clusters=2

Result

Number of particles: 204

Average particle size: 30.537

Code

import cv2

import numpy as np

import pylab

# Kmeans

def kmeans_color_quantization(image, clusters=8, rounds=1):

h, w = image.shape[:2]

samples = np.zeros([h*w,3], dtype=np.float32)

count = 0

for x in range(h):

for y in range(w):

samples[count] = image[x][y]

count = 1

compactness, labels, centers = cv2.kmeans(samples,

clusters,

None,

(cv2.TERM_CRITERIA_EPS cv2.TERM_CRITERIA_MAX_ITER, 10000, 0.0001),

rounds,

cv2.KMEANS_RANDOM_CENTERS)

centers = np.uint8(centers)

res = centers[labels.flatten()]

return res.reshape((image.shape))

# Load image

image = cv2.imread('1.png')

original = image.copy()

# Perform kmeans color segmentation, grayscale, Otsu's threshold

kmeans = kmeans_color_quantization(image, clusters=2)

gray = cv2.cvtColor(kmeans, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY cv2.THRESH_OTSU)[1]

# Find contours, remove tiny specs using contour area filtering, gather points

points_list = []

size_list = []

cnts, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[-2:]

AREA_THRESHOLD = 2

for c in cnts:

area = cv2.contourArea(c)

if area < AREA_THRESHOLD:

cv2.drawContours(thresh, [c], -1, 0, -1)

else:

(x, y), radius = cv2.minEnclosingCircle(c)

points_list.append((int(x), int(y)))

size_list.append(area)

# Apply mask onto original image

result = cv2.bitwise_and(original, original, mask=thresh)

result[thresh==255] = (36,255,12)

# Overlay on original

original[thresh==255] = (36,255,12)

print("Number of particles: {}".format(len(points_list)))

print("Average particle size: {:.3f}".format(sum(size_list)/len(size_list)))

# Display

cv2.imshow('kmeans', kmeans)

cv2.imshow('original', original)

cv2.imshow('thresh', thresh)

cv2.imshow('result', result)

cv2.waitKey()