I have run the below lines in the pypsark shell (mac, 8 cores).

import pandas as pd

df = spark.createDataFrame(pd.DataFrame(dict(a = list(range(1000)))

df.show()

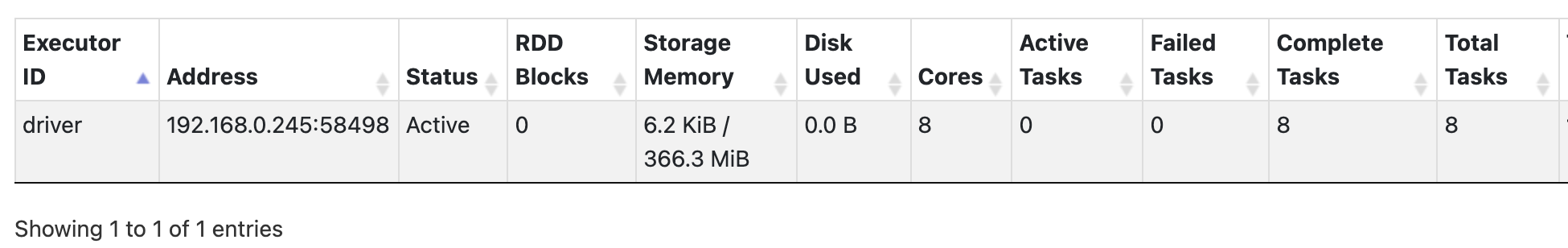

I want to count my worker nodes (and see the number of cores on each), so I run the python commands in

Can work be done by the cores in the driver? If so, are 7 cores doing work and 1 is reserved for "driver" functionality? Why aren't worker nodes being created automatically?

CodePudding user response:

It's not up to Spark to figure out the perfect cluster for the hardware you provide (although it's highly task-specific what is a perfect infrastructure anyway)

Actually, a behaviour you described is default for Spark to set up infrastructure like this if you run on YARN master (see spark.executor.cores option in the docs).

To modify it you have to either add some options while running pyspark-shell or do in inside your code with, for example:

conf = spark.sparkContext._conf.setAll([('spark.executor.memory', '4g'), ('spark.executor.cores', '4'), ('spark.cores.max', '4'), ('spark.driver.memory','4g')])

spark.sparkContext.stop()

spark = SparkSession.builder.config(conf=conf).getOrCreate()