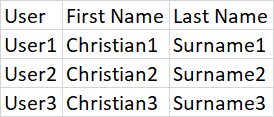

I am often given a CSV and asked to add information to it. For a simplified example I may be handed the following CSV and asked to get the lastlogontimestamp for each user

In order to achieve this I did the following

$csv = import-csv users.csv

$report = "User Audit_{0:dd-MM-yyyy_HHmm}.csv" -f (Get-Date)

$csv | ForEach-Object {

$user = ""

$user = get-aduser $_.user -Properties lastlogontimestamp | Select-Object @{ N = 'LastLogonTimestamp'; E = { [DateTime]::FromFileTime($_.LastLogonTimestamp) } }

[pscustomobject]@{

User = $_.User

'First Name' = $_.'First Name'

'Last Name' = $_.'Last Name'

'LastLogonTimestamp (AD)' = $user.lastlogontimestamp

} | Export-Csv $report -NoTypeInformation -Append

}

This works very well but creating it can be time consuming for spreadsheets with lots of rows and is subject to human error during the pscustomobject construction. So my question is - rather than manually constructing the pscustomobject is there a way to code it (using the row headings to populate each key and value), after which I can append my new additions?

CodePudding user response:

You can use Select-Object with * to copy all existing columns and use calculated properties to add new ones:

$report = "User Audit_{0:dd-MM-yyyy_HHmm}.csv" -f (Get-Date)

import-csv users.csv | ForEach-Object {

$user = get-aduser $_.user -Properties lastlogontimestamp

$_ | Select-Object *,

@{ N = 'LastLogonTimestamp (AD)'; E = { [DateTime]::FromFileTime($user.LastLogonTimestamp) } }

} | Export-Csv $report -NoTypeInformation

Also, as suggested by Mathias R. Jessen, move Export-Csv to the end of the pipeline and remove -Append to improve performance. Otherwise the file would be opened and closed for each row, which is very slow.

I also took the liberty to slightly refactor the code to remove the first Select-Object statement, which is no longer needed. Also you can pipe Import-Csv directly into ForEach-Object, without using a temporary variable.

CodePudding user response:

Part of the reason why it's time-consuming is that you're appending your results for each user. You could reduce how long it takes by simply assigning all your results to a variable and then exporting those results to a CSV at the end like this:

$csv = import-csv users.csv

$report = "User Audit_{0:dd-MM-yyyy_HHmm}.csv" -f (Get-Date)

$results = $csv | ForEach-Object {

$user = ""

$user = get-aduser $_.user -Properties lastlogontimestamp | Select-Object @{ N = 'LastLogonTimestamp'; E = { [DateTime]::FromFileTime($_.LastLogonTimestamp) } }

[pscustomobject]@{

User = $_.User

'First Name' = $_.'First Name'

'Last Name' = $_.'Last Name'

'LastLogonTimestamp (AD)' = $user.lastlogontimestamp

}

}

$results | Export-Csv $report -NoTypeInformation

You could also ignore the variable all together and pipe your results directly to Export-Csv

$csv = import-csv users.csv

$report = "User Audit_{0:dd-MM-yyyy_HHmm}.csv" -f (Get-Date)

$csv | ForEach-Object {

$user = ""

$user = get-aduser $_.user -Properties lastlogontimestamp | Select-Object @{ N = 'LastLogonTimestamp'; E = { [DateTime]::FromFileTime($_.LastLogonTimestamp) } }

[pscustomobject]@{

User = $_.User

'First Name' = $_.'First Name'

'Last Name' = $_.'Last Name'

'LastLogonTimestamp (AD)' = $user.lastlogontimestamp

}

} | Export-Csv $report -NoTypeInformation

In terms of human error. I'm not sure what exactly is "prone to human error" in your code so I can't make a recommendation there.

UPDATE - This should get you what you're looking for

$csv | ForEach-Object {

Get-AdUser $_.user -Properties lastlogontimestamp | Select-Object UserPrincipalName, SamAccountName, GivenName, SurName, @{ N = 'LastLogonTimestamp'; E = { [DateTime]::FromFileTime($_.LastLogonTimestamp) } }

} | Export-Csv $report -NoTypeInformation