I'm trying to do some k-means clustering but mu algorithm is converging to just one iteration.

Xnorm=mms.fit_transform(dataML)

cluster_centers = [meanC01, meanC02, meanC03, meanC04]

km = KMeans(n_clusters=4,max_iter=30,random_state=42)

km.fit(cluster_centers)

km.predict(Xnorm)

y_kmeans = km.predict(Xnorm)

#K-labels assigned

print("Labels assigned: ")

print(y_kmeans)

#The lowest SSE value

print("The lowest SSE value: " ,km.inertia_)

#The number of iterations required to converge

print("Num iterations to converge: ",km.n_iter_)

print("Final centers")

print(km.cluster_centers_)

#Clustering evaluation

#Silhouette score

#the closest to 1 the better

silSc=silhouette_score(X,y_kmeans,metric="euclidean")

print("Silhouette score: " , round(silSc,3))

print("\nThese measures need grand truth\n")

The output of the print statments is the following

Labels assigned:

[2 2 2 ... 2 2 2]

The lowest SSE value: 0.0

Num iterations to converge: 1

Final centers

[[ 8.91661735 8.19164571 7.28941813 11.01087393 9.66623751 7.68937223

10.79608166 10.58748025 12.4907922 9.14605905 4.07722332 8.74891868

7.81256141 9.68941418 8.16819725 12.43025352 6.20820642 7.62733302

10.53154745 8.76984275 9.62075754 6.39738163 7.16050728 6.38184175

10.78785962 8.46715886 9.11383958 7.71452426 8.26977858 7.65773373

5.05325902]

[-5.00771523 -5.20188195 -2.99006607 -6.50605353 -6.09703232 -4.81206434

-5.45380305 -5.96851614 -4.08740353 -4.94564133 -3.74848871 -3.88798456

-3.54267501 -3.31545128 -3.5289669 -5.23113531 -3.02861882 -2.07393902

-2.43206187 -5.96649754 -3.98380319 -1.38585587 -7.52809619 -4.80289282

-5.07892565 -2.69869804 -5.54481921 -4.6469543 -4.68872912 -5.07506579

-4.21190338]

[ 2.45037887 4.13676771 3.80011345 1.72639965 6.20431644 3.19958091

5.49969845 3.50406452 1.72854851 1.93279541 4.49166824 2.44420895

0.58778682 4.39920697 3.18566372 1.54782867 3.9471792 3.41704944

0.87701242 3.16223717 1.43453581 3.40814297 5.75767491 2.20136347

3.11641734 0.95040789 3.41645753 3.12363669 3.50884484 3.72560648

4.30498412]

[-5.15811619 -6.13586949 -7.11294387 -4.71940827 -8.65273641 -5.11589886

-9.44446671 -6.72118314 -8.18793936 -4.86600742 -4.49854142 -6.05955188

-3.68868328 -9.39266332 -6.69004796 -6.88351084 -6.34617737 -7.84613902

-7.24726862 -4.88277056 -5.63399845 -7.47390969 -4.8059051 -3.01932193

-7.31246743 -5.38774461 -5.82137554 -5.21165498 -6.02645256 -5.39091563

-4.66980532]]

And I also get this error

ValueError: Number of labels is 1. Valid values are 2 to n_samples - 1 (inclusive)

Is there any error in my code that is causing this?

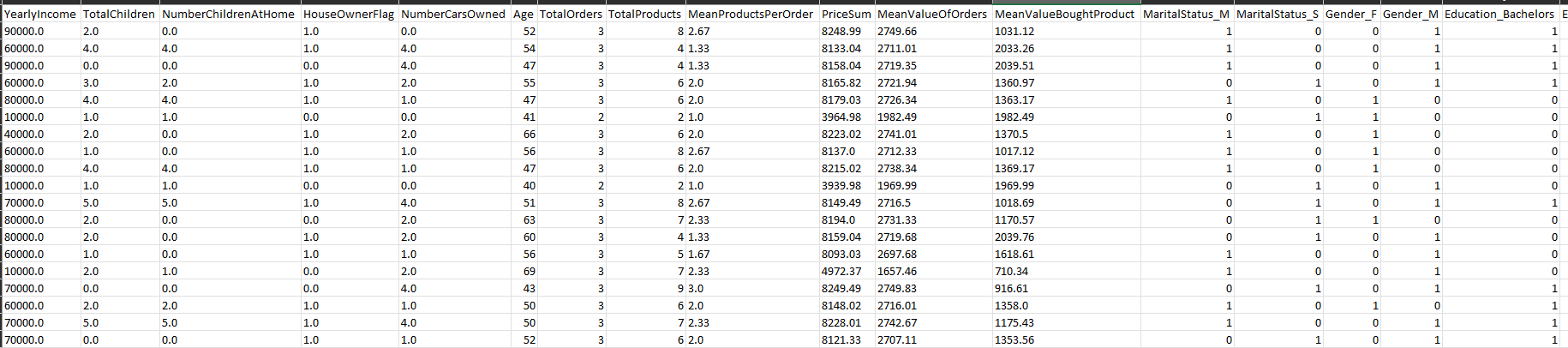

Below is a sample of my dataset

Update

I made the changes you said but now I'm getting this error

ValueError: init should be either 'k-means ', 'random', a ndarray or a callable, got '[[-5.158116189420493, -6.135869490272886, -7.112943870919114, -4.719408271488778, -8.652736411771514, -5.115898856180195, -9.444466710512515, -6.721183141827833, -8.187939363193856, -4.866007421496122, -4.498541424902004, -6.059551875914619], [2.4503788682948797, 4.136767712097716, 3.800113452319174, 1.7263996510061552, 6.204316437195862, 3.1995809081247324, 5.499698454146857, 3.504064521222991, 1.72854851263446, 1.9327954130937557, 4.491668242286317, 2.444208952435482], [8.91661735243092, 8.19164570547311, 7.289418131440908, 11.010873934094928, 9.666237508380636, 7.689372230181427, 10.796081659572993, 10.58748024786907, 12.490792204659163, 9.14605905236541, 4.077223320288767, 8.748918676524136], [-5.007715234440542, -5.201881954076602, -2.990066071487653, -6.50605352762039, -6.097032315522047, -4.81206434114537, -5.453803052692124, -5.968516137674577, -4.087403530804171, -4.94564133196963, -3.748488710268994, -3.8879845624490703]]' instead.

CodePudding user response:

You are handing just four points to K-Means and then ask it to find four cluster centers? That means it will fit in one iteration because the initial cluster centers selected will just be these four centers, then there is no more improvement after that. Also then the number of clusters k is equal to the number of points which leads to meaningless results.

Did you mean to use km.fit(Xnorm)?

CodePudding user response:

The code shows you're fitting kmeans with 4 clusters to 4 data points, which is the reason it's converging that fast. You can try using:

km.fit(Xnorm)

which will fit kmeans to your normalized data. Incase you want to specify the initial clusters by yourself, it is stated in the documentation that you can pass a matrix to the parameter init: https://scikit-learn.org/stable/modules/generated/sklearn.cluster.KMeans.html

For instance:

km = KMeans(n_clusters=4, init=cluster_centers, max_iter=30)

km.fit(Xnorm)