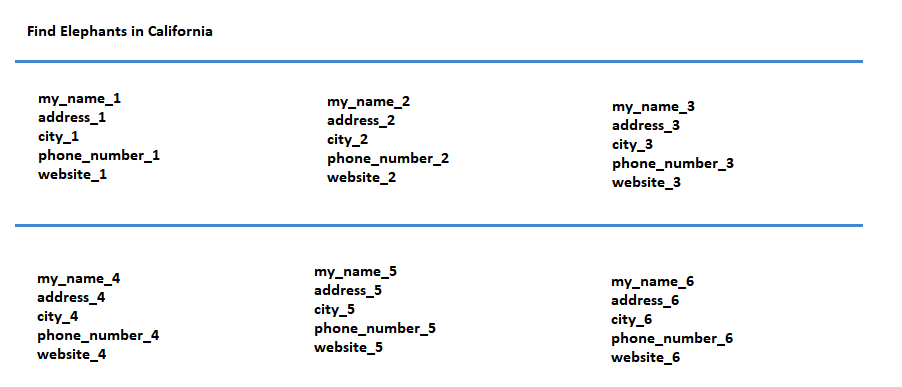

I am working with the R programming language. I am trying to webscrape a page (e.g. "my_website.html") - the webpage looks something like this:

And the source code for this page looks something like this:

<div >

<strong>my_name_1.</strong><br />

address_1<br />

city_1<br />

phone_1<br />

<a href="website1.com" target="_blank">Website</a>

</div>

<div >

<strong>my_name_2</strong><br />

address_2<br />

city_2<br />

phone_2<br />

<a href="website2.com">Website</a>

</div>

<div >

<strong>my_name_2</strong><br />

address_2,<br />

city_2<br />

phone_2

</div>

<div ></div>

<div style='margin:20px !important;'></div>

<div >

<strong>my_name_3</strong><br />

address_3<br />

city_3<br />

phone_3<br />

<a href="website3.com" target="_blank">Website</a>

</div>

<div >

<strong>my_name_4</strong><br />

address_4,<br />

city_4<br />

phone_4

</div>

I am trying to extract the following information into a data frame - this would look something like this:

name address city phone

1 my_name_1 address_1 city_1 phone_1

2 my_name_2 address_2 city_2 phone_2

3 my_name_3 address_3 city_3 phone_3

4 my_name_4 address_4 city_4 phone_4

I found this tutorial (https://www.dataquest.io/blog/web-scraping-in-r-rvest/) and tried to do this:

library(rvest)

simple <- read_html("my_website.html")

Then, I tried different combinations of the following command to try and extract the names, addresses, cities and phone numbers:

simple %>%

html_nodes("strong") %>%

html_text()

simple %>%

html_nodes("bold") %>%

html_text()

simple %>%

html_nodes("br") %>%

html_text()

simple %>%

html_nodes(".br") %>%

html_text()

simple %>%

html_nodes("p")

But so far, none of this is working - But the addresses are not pulling.

Can someone please tell me what I am doing wrong?

Thanks!

CodePudding user response:

Here is a head start:

library(rvest)

url<-"mywebsite.html"

page <-read_html(url)

#find the div tab of class=one_third

b = page %>% html_nodes("div.one_third")

listanswer <- b %>% html_text() %>% strsplit("\\n")

"listanswer" has a vector for each entry. Now comes the had part, each address can have 1 to 3 elements for the name(s) and at the end may or may not have a phone number or website. I think you can go through this list of vectors pick out the city, contains "Vancouver" the element before is the street address the element after, contains "P:" is the phone, and everything before the address are the names.

Good luck.