I want to setup my framework in such a way that - A test is listed in Nunit test playlist (TestExplorer) only if it is set to run else don't show it in the list at all.

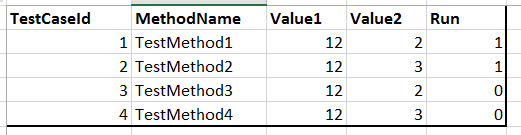

My plan - I will have an external datasource like csv, xlsx or DB with rows and columns for test data. One of the column will have Run flag which would decide if a partcular test needs to be run or not. If the flag is set to false, the nunit should not consider it for test and should not show it in the test playlist (even if the test method has the attribute [Test] ) or report. Please note, I am aware of "Ignore" atrribute but I don't want to use it as - such tests show up as "skipped" in the test report or the playlist.

As per the table, the playlist should have only TestMethod1 and TestMethod2 listed and executed.

Note: I have tried [TestCase] and [TestCaseSource] but for each I can see the unwanted test methods listed in the playlist and the report.

CodePudding user response:

Negative answer I'm afraid...

Attributes like [Test] and [TestCase] are used by the NUnit framework to identify tests. A Playlist is an external construct, about which NUnit knows nothing. It's just a list of which NUnit tests should be run.

Various runners have different ways to tell NUnit which tests to run. The TestExplorer is one such runner and works through the NUnit 3 VS Adapter to select NUnit tests. The adapter doesn't know anything about Playlists either, by the way.

So your problem is entirely localized to TestExplorer and its play lists. You would need to have some way to select which NUnit tests to run in the playlist but you cannot control whether NUnit actually finds the tests or not. NUnit discovers all the tests prior to each test run.

CodePudding user response:

I explored a bit and acheived what I wanted to. Surprizingly, Charlie suggested the same so I am confident. There can be more ways or the code can be furthur optimised, I am adding what I have tried so far. Note: There will just one method in the list which will not be executed/skipped. You cannot avoid that as the same method generates the actual test methods for the list.

namespace AutoRunNunit

{

internal class TestClass

{

[TestCaseSource(typeof(TestDataDummy), "DataProvider")]

public void RunTest(string methodName, string data)

{

typeof(TestClass).GetMethod(methodName).Invoke(null, new[] { data });

}

public static void TestMethod1(string data)

{

Assert.AreEqual(data, "12");

}

public static void TestMethod2(string data)

{

Assert.AreEqual(data, "13");

}

public class TestDataDummy

{

private static IEnumerable<TestCaseData> DataProvider()

{

var testCases = new List<TestCaseData>();

ArrayList data = GetDataFromExcelDummy();

foreach (string[] str in data)

{

testCases.Add(new TestCaseData(str[1], str[2]).SetName(str[1]));

}

return testCases;

}

}

public static ArrayList GetDataFromExcelDummy()

{

string connectionString = "";

string path = @"C:\Test\";

string filename = "TestData.xls";

if (filename.Contains(".xls"))

{

connectionString = "Provider=Microsoft.Jet.OLEDB.4.0;Data Source=" path filename ";Extended Properties=\"Excel 8.0;HDR=Yes;IMEX=2\"";

}

else if (filename.Contains(".xlsx"))

{

connectionString = "Provider=Microsoft.ACE.OLEDB.12.0;Data Source=" path filename ";Extended Properties=\"Excel 12.0;HDR=Yes;IMEX=2\"";

}

string query = "SELECT * FROM [Data01$]";

using (OleDbConnection connection = new OleDbConnection(connectionString))

{

OleDbCommand command = new OleDbCommand(query, connection);

connection.Open();

OleDbDataReader reader = command.ExecuteReader();

var lines = new List<string>();

while (reader.Read())

{

var fieldCount = reader.FieldCount;

var fieldIncrementor = 1;

var fields = new List<string>();

while (fieldCount >= fieldIncrementor)

{

fields.Add(reader[fieldIncrementor - 1].ToString());

fieldIncrementor ;

}

lines.Add(string.Join("\t", fields));

}

reader.Close();

ArrayList list = new ArrayList();

foreach (string str in lines)

{

string[] words = str.Split();

if (!words[4].Equals("0"))

{

list.Add(words);

}

}

return list;

}

}

}

}