I'm following the python example from the

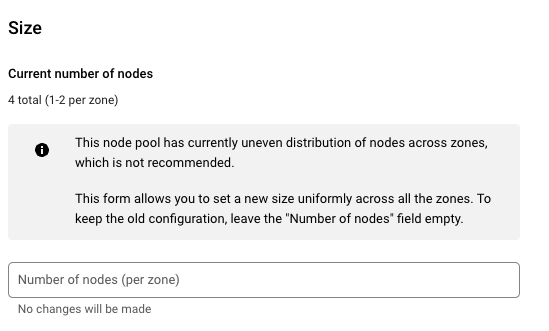

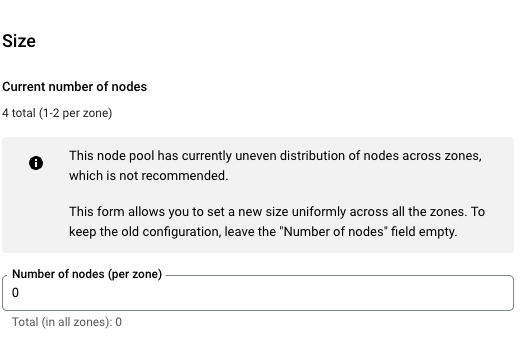

Setting it manually works. All my nodes are gone and none of the pods get scheduled. When doing it via API though, it just sets it to blank which seems to cause one node to be left out, causing some of my pods to get scheduled which I don't want:

Is nodeCount the correct field to set the number of nodes per zone? I'm fairly new to GKE and kubernetes in general so I apologise if this is a very basic question. Also tried looking around for questions but haven't found anything close.

I do know GKE needs at least one node to run system pods, but in this case I need all of them gone which should be fine because I'm managing my cluster using GCP?

EDIT: Before running the script, I set the node count per zone to 1 and I enabled the auto-scaler. After running the script, the node count per zone is blank (as from the image above) and the auto-scaler gets disabled.

CodePudding user response:

The following works for me:

PROJECT=...

CLUSTER=...

LOCATION=...

NODE_POOL=...

ACCOUNT="tester"

ROLE="roles/container.clusterAdmin"

gcloud iam service-accounts create ${TESTER} \

--project=${PROJECT}

EMAIL=${ACCOUNT}@${PROJECT}.iam.gserviceaccount.com

gcloud iam service-accounts keys create ${PWD}/${ACCOUNT}.json \

--iam-account=${EMAIL} \

--project=${PROJECT}

gcloud projects add-iam-policy-binding ${PROJECT} \

--member=serviceAccount:${EMAIL} \

--role=${ROLE}

export CLUSTER

export LOCATION

export NODE_POOL

export GOOGLE_APPLICATION_CREDENTIALS=${PWD}/tester.json

Then:

import google.auth

from googleapiclient.discovery import build

from os import getenv

credentials, project = google.auth.default()

cluster = getenv("CLUSTER")

location = getenv("LOCATION")

node_pool = getenv("NODE_POOL")

gke = build(

"container",

"v1",

credentials=credentials

)

name = f"projects/{project}/locations/{location}/clusters/{cluster}/nodePools/{node_pool}"

body = {

"nodeCount": 0

}

rqst = gke.projects().locations().clusters().nodePools().setSize(

name=name,

body=body

)

resp = rqst.execute()

print(resp)

body = {

"autoscaling": {

"enabled": False

}

}

rqst = gke.projects().locations().clusters().nodePools().setAutoscaling(

name=name,

body=body

)

resp = rqst.execute()

print(resp)

Update

I think I understand your concern with zero vs null in the number of nodes but I don't observe the behavior where a node remains; all the nodes are deleted.

gcloud container node-pools describe default-pool \

--cluster=${CLUSTER} \

--zone=${ZONE} \

--project=${PROJECT} \

--format="value(initialNodeCount)"

Returns e.g. 1

And

kubectl get nodes --output=name | wc -l

Returns e.g. 1

Then, after running the equivalent of setSize(0) the two commands return the equivalent of null which doesn't accord with the NodePool spec.

initialNodeCount is an integer and, I think it's reasonable to assume that the value should be 0 not that the field is not present particularly since the field is not marked as optional. I understand why this behavior occurs but I agree that it's confusing.

gcloud container node-pools describe default-pool --cluster=${CLUSTER} --zone=${ZONE} --project=${PROJECT} --format="value(initialNodeCount)"

NOTE

initialNodeCountis not present in theNodePoolwhen the size is zero.

kubectl get nodes \

--output=yaml

Correctly returns an empty list of item:

apiVersion: v1

items: []

kind: List

metadata:

resourceVersion: ""

Consider filing an issue on Google's public Issue Tracker (for Kubernetes Engine) to understand what Google Engineering thinks.

CodePudding user response:

For others who face the same issue, apparently it just needs a timeout in between the commands, since operations are still being executed on the cluster. It seems 5 minutes was needed in this case, so:

import time

# ...

gke.projects()

.locations()

.clusters()

.nodePools()

.setSize(name=name, body=set_node_pool_size_request_body).execute()

# Wait 5 minutes before disabling the auto-scaler

time.sleep(300)

gke.projects()

.locations()

.clusters()

.nodePools()

.setAutoscaling(name=name, body=set_node_pool_autoscaling_request_body).execute()