It has two inputs and one output.

Input: [Temperature, Humidity]

Output: [wattage]

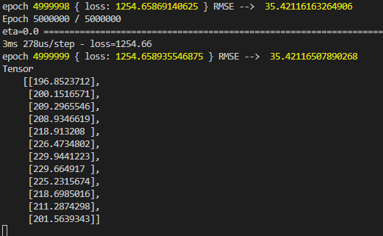

I learned as follows Even after 5 million rotations, it does not work properly.

Did I choose the wrong option?

var input_data = [

[-2.4,2.7,9,14.2,17.1,22.8,281,25.9,22.6,15.6,8.2,0.6],

[58,56,63,54,68,73,71,74,71,70,68,62]

];

var power_data = [239,224,189,189,179,192,243,317,224,190,189,202];

var reason_data = tf.tensor2d(input_data);

var result_data = tf.tensor(power_data);

var X = tf.input({ shape: [2] });

var Y = tf.layers.dense({ units: 1 }).apply(X);

var model = tf.model({ inputs: X, outputs: Y });

var compileParam = { optimizer: tf.train.adam(), loss: tf.losses.meanSquaredError }

model.compile(compileParam);

var fitParam = {

epochs: 500000,

callbacks: {

onEpochEnd: function (epoch, logs) {

console.log('epoch', epoch, logs, "RMSE --> ", Math.sqrt(logs.loss));

}

}

}

model.fit(reason_data, result_data, fitParam).then(function (result) {

var final_result = model.predict(reason_data);

final_result.print();

model.save('file:///path/');

});The following is the result for 5 million times.

It should be the same as power_data , but it failed.

What should I fix?

CodePudding user response:

While there is rarely one simple reason to point to when a model doesn't perform the way you would expect, here are some options to consider:

You don't have enough data points. Twelve is not nearly sufficient to get an accurate result.

You need to normalize the data of the input tensors. Given that your two features [temperature and humidity] have different ranges, they need to be normalized to give them equal opportunity to influence the output. The following is a normalization function you could start with:

function normalize(tensor, min, max) {

const result = tf.tidy(function() {

// Find the minimum value contained in the Tensor.

const MIN_VALUES = min || tf.min(tensor, 0);

// Find the maximum value contained in the Tensor.

const MAX_VALUES = max || tf.max(tensor, 0);

// Now calculate subtract the MIN_VALUE from every value in the Tensor

// And store the results in a new Tensor.

const TENSOR_SUBTRACT_MIN_VALUE = tf.sub(tensor, MIN_VALUES);

// Calculate the range size of possible values.

const RANGE_SIZE = tf.sub(MAX_VALUES, MIN_VALUES);

// Calculate the adjusted values divided by the range size as a new Tensor.

const NORMALIZED_VALUES = tf.div(TENSOR_SUBTRACT_MIN_VALUE, RANGE_SIZE);

// Return the important tensors.

return {NORMALIZED_VALUES, MIN_VALUES, MAX_VALUES};

});

return result;

}

- You should try a different optimizer. Adam might be the best choice, but for a linear regression problem such as this, you should also consider Stochastic Gradient Descent (SGD).

Check out this sample code for an example that uses normalization and sgd. I ran your data points through the code (after making the changes to the tensors so they fit your data), and I was able to reduce the loss to less than 40. There is room for improvement, but that's where adding more data points comes in.