I want to scrape Congressional stock trades from

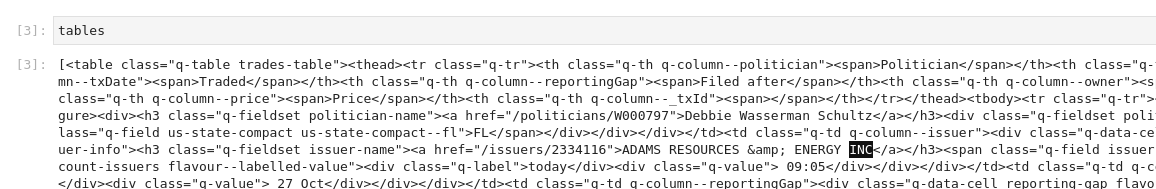

Here is where I found the span tag:

Company tickers are 1 to 5 characters in length, and I have failed to regex tickers because there are many varieties of company suffixes (e.g., "INC", "CORP", "PLC", "SE", etc.), and not all company names have suffixes.

How can I either replace span tags with whitespace to separate company names and tickers or parse the span as another column?

Here is my code:

import pandas as pd

import yfinance as yf

from selenium import webdriver

from bs4 import BeautifulSoup

import time

import datetime

def get_url(page=1, pageSize=50, assetType='stock'):

if page == 1:

return f'https://www.capitoltrades.com/trades?assetType={assetType}&pageSize={pageSize}'

elif page > 1:

return f'https://www.capitoltrades.com/trades?assetType={assetType}&page={page}&pageSize={pageSize}'

else:

return None

driver = webdriver.Firefox()

driver.get(get_url(page=1))

driver.implicitly_wait(10)

time.sleep(1)

soup = BeautifulSoup(driver.page_source, 'lxml')

tables = soup.find_all('table')

table = pd.read_html(str(tables))[0]

driver.close()

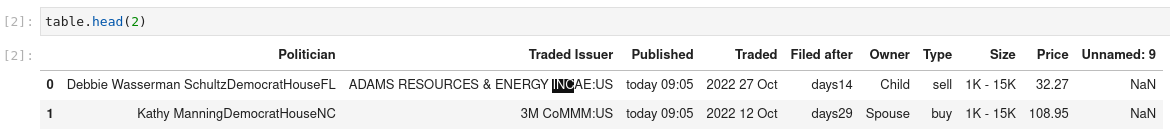

CodePudding user response:

To separate company names and tickers or parse the span as another column aka to get overall neat and clean ResultSet, you can change your tool selection strategy a bit. In this case, It would be better to apply bs4 with pandas DataFrame instead of pd.read_html() method.

Full working code as an example:

import pandas as pd

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

import time

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()))

#The base url: https://www.capitoltrades.com/trades?assetType=stock&pageSize=50

data = []

for page in range(1, 5):

driver.get(f'https://www.capitoltrades.com/trades?assetType=stock&pageSize=50&page={page}')

driver.maximize_window()

time.sleep(3)

soup = BeautifulSoup(driver.page_source, 'lxml')

for row in soup.select('table.q-table.trades-table > tbody tr'):

Politician = row.select_one('[] > a').text.strip()

Politician_info = row.select_one('[]').get_text(' ',strip=True)

Traded_Issuer = row.select_one('[] > a').text.strip()

Issuer_ticker =row.select_one('span[]').text.strip()

Published = row.select_one('[] .q-value').text.strip()

Traded = row.select_one('[] .q-value')

Traded = Traded.text.strip() if Traded else None

Filed_after = row.select_one('[] .q-value').text.strip()

Owner =row.select_one('[] span').text.strip()

_type = row.select_one('[]').get_text(strip=True)

Size = row.select_one('[] > div').get_text(strip=True)

#Size = Size.text.strip() if Size else None

Price =row.select_one('[]').text.strip()

data.append({

'Politician':Politician,

'Politician_info':Politician_info,

'Traded_Issuer':Traded_Issuer,

'Issuer_ticker':Issuer_ticker,

'Published':Published,

'Traded':Traded,

'Filed_after':Filed_after,

'Owner':Owner,

'Type':_type,

'Size':Size,

'Price':Price

})

df = pd.DataFrame(data)

print(df)

Output:

Politician Politician_info Traded_Issuer Issuer_ticker ... Owner Type Size Price

0 Debbie Wasserman Schultz Democrat House FL ADAMS RESOURCES & ENERGY INC AE:US ... Child sell 1K - 15K 32.27

1 Kathy Manning Democrat House NC 3M Co MMM:US ... Spouse buy 1K - 15K 108.95

2 Kathy Manning Democrat House NC Accenture PLC ACN:US ... Spouse buy 1K - 15K 250.07

3 Kathy Manning Democrat House NC Adobe Inc ADBE:US ... Spouse sell 1K - 15K 286.15

4 Kathy Manning Democrat House NC Alphabet Inc GOOGL:US ... Spouse buy 1K - 15K 97.56

.. ... ... ... ... ... ... ... ... ...

195 Diana Harshbarger Republican House TN CME Group Inc CME:US ... Joint sell 1K - 15K 176.26

196 Diana Harshbarger Republican House TN CME Group Inc CME:US ... Spouse sell 1K - 15K 176.26

197 Diana Harshbarger Republican House TN The Home Depot Inc HD:US ... Undisclosed sell 1K - 15K 268.69

198 Diana Harshbarger Republican House TN The Home Depot Inc HD:US ... Undisclosed sell 1K - 15K 268.69

199 Diana Harshbarger Republican House TN The Home Depot Inc HD:US ... Joint sell 1K - 15K 268.69

[200 rows x 11 columns]

CodePudding user response:

Btw, you don't need to go through those steps with soup and find_all - read_html already uses the same [or at least very similar] parsers as bs4 so you could just have

table = pd.read_html(driver.page_source)[0] # , flavor='lxml')[0] # default

or even directly pd.read_html(get_url(page=1)) - it doesn't always work for all pages, but it seems to work for your page; it won't allow for the solutions I'm about to suggest, but I thought it was worth mentioning this convenience in case you didn't already know.

Anyway, my first solution is going to seem a bit hacky, but it has worked for me so far - since, read_html doesn't seem to have any string-separator argument like bs4's get_text does, we can try to create the same effect by just adding spaces to the end of every tag in the html using .replace.

table = pd.read_html(driver.page_source.replace('</', ' </'))

If you are wary of messing with text-replace on html strings, or are worried about adding unwanted spaces (even though it doesn't, as far I have seen), or just want more control of how the text is extracted from table cells, you can also convert the bs4 table tag to a python dictionary using get_text [with space (' ') separator] and list comprehension.

tableSoup = soup.find('table')

tableHeaders = [

h.get_text(' ', strip=True)

for h in tableSoup.select('thead tr th')

]

tableData = [{h: c.get_text(' ', strip=True) for h, c in zip(

tableHeaders, [cell for cell in r.select('td')]

)} for r in tableSoup.select('tbody tr')]

table = pd.DataFrame(tableData)

You could even replace get_text with your own function if you want to customize how cell values are extracted and/or processed, but as it is, it [and the first method with .replace] creates a DataFrame like:

| Politician | Traded Issuer | Published | Traded | Filed after | Owner | Type | Size | Price |

|---|---|---|---|---|---|---|---|---|

| Pete Sessions Republican House TX | US TREASURY NOTE N/A | yesterday 14:05 | 2022 9 Nov | days 1 | Undisclosed | buy | 15K - 50K | N/A |

| Pete Sessions Republican House TX | US TREASURY NOTE N/A | yesterday 14:05 | 2022 7 Nov | days 3 | Undisclosed | buy | 50K - 100K | N/A |

| Pete Sessions Republican House TX | US TREASURY NOTE N/A | yesterday 14:05 | 2022 7 Nov | days 3 | Undisclosed | buy | 1K - 15K | N/A |

| Brad Sherman Democrat House CA | US TREASURY NOTE N/A | yesterday 14:05 | 2022 3 Nov | days 7 | Undisclosed | sell | 250K - 500K | N/A |

| Brad Sherman Democrat House CA | US TREASURY NOTE N/A | yesterday 14:05 | 2022 3 Nov | days 7 | Undisclosed | sell | 500K - 1M | N/A |

| Brad Sherman Democrat House CA | US TREASURY NOTE N/A | yesterday 14:05 | 2022 3 Nov | days 7 | Undisclosed | sell | 250K - 500K | N/A |

| Brad Sherman Democrat House CA | US TREASURY NOTE N/A | yesterday 14:05 | 2022 7 Nov | days 3 | Undisclosed | sell | 250K - 500K | N/A |

| Brad Sherman Democrat House CA | US TREASURY NOTE N/A | yesterday 14:05 | 2022 3 Nov | days 7 | Undisclosed | sell | 250K - 500K | N/A |

| Brad Sherman Democrat House CA | US TREASURY NOTE N/A | yesterday 14:05 | 2022 3 Nov | days 7 | Undisclosed | sell | 15K - 50K | N/A |

| Debbie Wasserman Schultz Democrat House FL | ADAMS RESOURCES & ENERGY INC AE:US | yesterday 14:05 | 2022 27 Oct | days 14 | Child | sell | 1K - 15K | 32.27 |

| Kathy Manning Democrat House NC | 3M Co MMM:US | yesterday 14:05 | 2022 12 Oct | days 29 | Spouse | buy | 1K - 15K | 108.95 |

| Kathy Manning Democrat House NC | Accenture PLC ACN:US | yesterday 14:05 | 2022 12 Oct | days 29 | Spouse | buy | 1K - 15K | 250.07 |

| Kathy Manning Democrat House NC | Adobe Inc ADBE:US | yesterday 14:05 | 2022 12 Oct | days 29 | Spouse | sell | 1K - 15K | 286.15 |

| Kathy Manning Democrat House NC | Alphabet Inc GOOGL:US | yesterday 14:05 | 2022 12 Oct | days 29 | Spouse | buy | 1K - 15K | 97.56 |

| Kathy Manning Democrat House NC | Amazon.com Inc AMZN:US | yesterday 14:05 | 2022 12 Oct | days 29 | Spouse | buy | 1K - 15K | 112.9 |

[Markdown for the table above was copied from the output of print(table.drop([''], 'columns').to_markdown(index=False)).]