I tried to converting tf2 keras model with Conv2DTranspose layer to tflite.

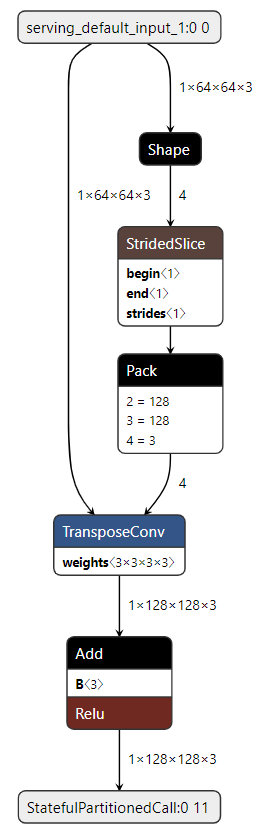

But after converting to tflite, this model generates automatically Shape and Pack operation. That is a quiet problem for me because these operation can't be supported by mobile gpu. Why tflite works like this and how do I fix it?

Tflite converting code:

def get_model():

inputs = tf.keras.Input(shape=(64, 64, 3))

outputs = keras.layers.Conv2DTranspose(3, 3, strides=2, padding="same", activation="relu", name="deconv1")(inputs)

model = keras.Model(inputs=inputs, outputs=outputs, name="custom")

x = tf.ones((1, 64, 64, 3))

y = model(x)

return model

def convert_model(saved_model_dir, tflite_save_dir):

converter = tf.lite.TFLiteConverter.from_saved_model(saved_model_dir)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float32]

tflite_model = converter.convert()

with open(tflite_save_dir, "wb") as f:

f.write(tflite_model)

if __name__=="__main__":

model = get_model()

current_path = os.path.dirname(os.path.realpath(__file__))

save_dir = os.path.join(current_path, "custom/1/")

tf.saved_model.save(model, save_dir)

tflite_save_dir = os.path.join(current_path, "my_model.tflite")

convert_model(save_dir, tflite_save_dir)

test_tflite(tflite_save_dir)

Tflite Model Visualizing:

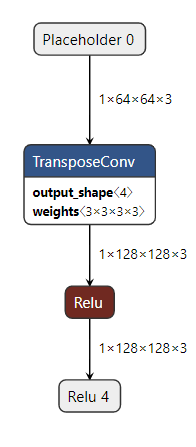

P.S. tf.compat.v1.layers.conv2d_transpose graph not generate Shape-StridedSlice-Pack operation. That means tf.compat.v1.layers.conv2d_transpose can run on the mobile gpu. Why does this difference occur?

Tflite converting code:

tfv1 = tf.compat.v1

def generate_tflite_model_from_v1(saved_model_dir):

tflite_save_dir = os.path.join(saved_model_dir, "my_model_tfv1.tflite")

with tf.Graph().as_default() as graph:

inputs = tfv1.placeholder(tf.float32, shape=[1, 64, 64, 3])

x = tfv1.layers.conv2d_transpose(inputs, 3, 3, strides=2, padding="same", name="deconv1")

outputs = tf.nn.relu(x)

with graph.as_default(), tfv1.Session(graph=graph) as session:

session.run(tfv1.global_variables_initializer())

converter = tfv1.lite.TFLiteConverter.from_session(

session, input_tensors=[inputs], output_tensors=[outputs])

with open(tflite_save_dir, "wb") as f:

f.write(converter.convert())

CodePudding user response:

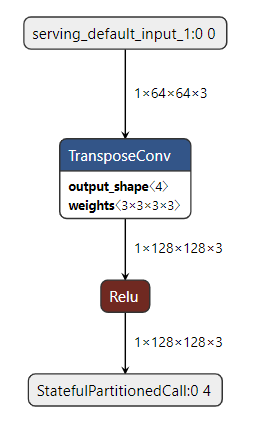

I found the solution.

It is quite simple: specify the batch size in model's input.

def get_model():

inputs = tf.keras.Input(shape=(64, 64, 3), batch_size=1) # specify batch size

outputs = keras.layers.Conv2DTranspose(3, 3, strides=2, padding="same", activation="relu", name="deconv1")(inputs)

model = keras.Model(inputs=inputs, outputs=outputs, name="custom")

x = tf.ones((1, 64, 64, 3))

y = model(x)

return model

def convert_model(saved_model_dir, tflite_save_dir):

converter = tf.lite.TFLiteConverter.from_saved_model(saved_model_dir)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float32]

tflite_model = converter.convert()

with open(tflite_save_dir, "wb") as f:

f.write(tflite_model)

if __name__=="__main__":

model = get_model()

current_path = os.path.dirname(os.path.realpath(__file__))

save_dir = os.path.join(current_path, "custom/1/")

tf.saved_model.save(model, save_dir)

tflite_save_dir = os.path.join(current_path, "my_model.tflite")

convert_model(save_dir, tflite_save_dir)

test_tflite(tflite_save_dir)

Then, you can get the same model compared to tf1's graph.