I've got simple io_context object which is used to initialize ssl_stream object (using async_resolve, async_connect and async_handshake).

On a different scope, it's used to call async_read and async_write to pass IO in that connection.

the async calls are performed from within coroutine (boost::asio::spawn(io_context_, [&](boost::asio::yield_context yield)). each one of the stages above is executed on a different such coroutine. In order to execute the coroutine, the underlying io_context of the ssl_stream should be in run() method.

However, since those 2 methods are separated, than a single run() stage wouldn't be enough. After the connection initialization will be finished, the first run() will be terminated, so a second run() instance should be called right after the second coroutine (that does the IO ops) is called)

However, I observed that the second run goes out immediately, and doesn't perform the recently inserted spawn. Any idea how to overcome this scenario ? or the only alternative is to run the whole connection lifecycle in single coroutine, or call the run on a separated thread that never quits...

Here's the semi-pseudo code of my scenario :

boost::asio::io_context io_context_;

std::optional<boost::beast::tcp_stream> stream_;

std::optional<boost::asio::ip::tcp::resolver> resolver_;

//STAGE 1

boost::asio::spawn(io_context_, [&](boost::asio::yield_context yield) {

results = resolver_->async_resolve(host_,port_ , yield);

ssl_stream_->next_layer().async_connect(results, yield);

ssl_stream_->async_handshake(ssl::stream_base::client, yield);

}

try {

io_context_.run();

} catch (...) {

}

//STAGE 2

beast::flat_buffer buffer;

http::response<http::dynamic_body> res;

boost::asio::spawn(io_context_, [&](boost::asio::yield_context yield) {

beast::get_lowest_layer(*ssl_stream_).expires_after(kOpTimeout);

auto sent = http::async_write(*ssl_stream_, beast_request, yield);

auto read = http::async_read(*ssl_stream_, buffer, res, yield);

});

try {

io_context_.run();

return res;

} catch (...) {

}

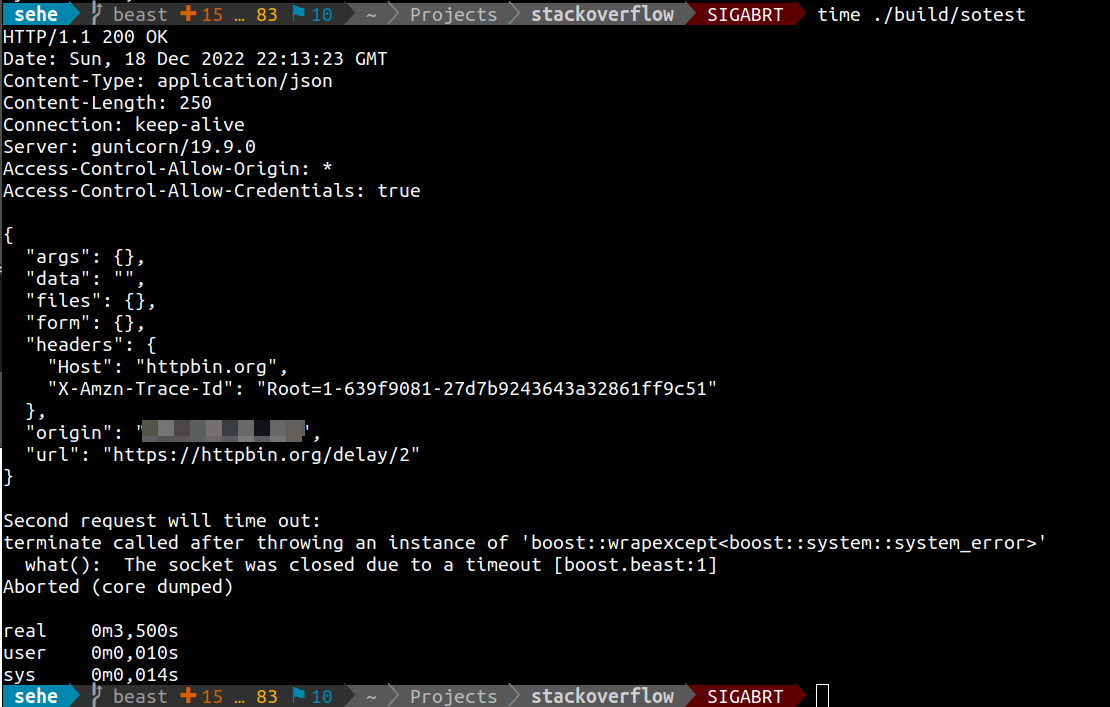

CodePudding user response:

It is possible, but a-typical. As documented, in order to be able to re-run after the service ran out of work (I.e.