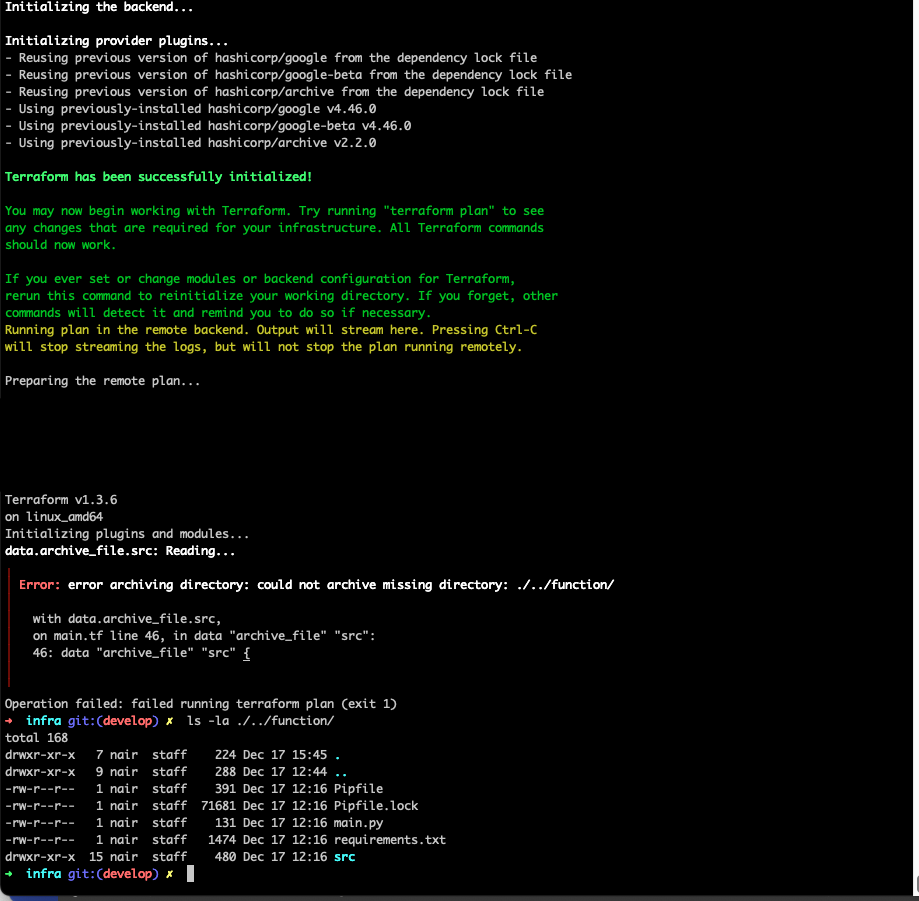

I am archieving a folder with some exception however getting the attached error. FYI, I am using TF Cloud as my backend

The directory structure looks like below:

/root

- infra

- function

All of my terraform code is under infra folder, so I am executing the terraform commands from that location.

Terraform code for the archive_file is :

data "archive_file" "src" {

type = "zip"

source_dir = "${path.root}/../function/"

output_path = "${path.root}/generated/${var.func_name}.zip"

excludes = setunion(

fileset("${path.root}/../function/", ".venv/**"),

fileset("${path.root}/../function/", "Pipfile*")

)

}

I also tried with the below code as well but getting same error:

data "archive_file" "src" {

type = "zip"

source_dir = "${path.module}/../function/"

output_path = "${path.module}/generated/${var.func_name}.zip"

excludes = setunion(

fileset("${path.module}/../function/", ".venv/**"),

fileset("${path.module}/../function/", "Pipfile*")

)

}

Both code shows the below error:

data.archive_file.src: Reading...

╷

│ Error: error archiving directory: could not archive missing directory: ./../function/

│

│ with data.archive_file.src,

│ on main.tf line 46, in data "archive_file" "src":

│ 46: data "archive_file" "src" {

│

╵

Operation failed: failed running terraform plan (exit 1)

Any idea why I am getting this error?

CodePudding user response:

Unfortunately the terraform not able to pick the folder outside of its root. So I resolved this issue using a symlink

Terraform parent directory uploads issue

CodePudding user response:

When you use Terraform Cloud with its remote operations feature, your local Terraform CLI becomes only a client to the Terraform Cloud API. Running terraform apply or terraform plan just tells Terraform Cloud to start running Terraform and then streams the remote output into your terminal.

In order for that to work, the remote Terraform Cloud API needs to have access to your configuration. Terraform CLI creates an archive file containing the contents of your working directory and subdirectories and uploads that archive to the Terraform Cloud API, and then tells Terraform Cloud to run Terraform Core against that uploaded configuration.

For this to work you will need to have all of the necessary files in the scope of what Terraform CLI will upload to Terraform Cloud. By default Terraform Cloud expects your root module to be in the root directory of what you upload into Terraform Cloud and so only the directory infra is included in the uploaded package.

In your workspace settings you can find the Terraform Working Directory setting, which allows overriding which directory Terraform Cloud will expect to find your root module. In your case you can configure the working directory to be "infra", which will tell Terraform Cloud to expect your root module to be in that subdirectory.

Once you've changed that setting, when you run terraform apply Terraform CLI will now see that your infrastructure is in a subdirectory and so it will use the parent directory as the base of files to upload to Terraform Cloud. That will therefore include both your infra directory and your function directory, and so your remote operation should work as you expected.

If your parent directory really is /root -- the home directory of the user root -- then the settings above will cause Terraform CLI to upload the entire contents of that home directory, which I expect isn't what you want to happen. To avoid that, place both your infra directory and your function directory into a common parent directory which contains only the files you want to upload to Terraform Cloud. For example:

/root

- terraform

- function

- infra

With the above directory structure and the working directory set to infra, Terraform CLI will upload the full contents of the terraform directory to Terraform Cloud.

It sounds like you aren't keeping your Terraform configuration in a version control system yet and so this directory structure probably seems arbitrary to you at this point. When you later put your Terraform configuration under version control, the terraform directory I've shown above would be the root of your version control repository, and so infra and function will both be subdirectories inside the repository. Terraform Cloud generally expects to be working within some kind of "repository-like" filesystem structure, because it needs to have some way to decide what files should be included when uploading to start a remote operation.

Running Terraform as the superuser root is unusual and should not typically be necessary, especially if you are using remote operations and therefore aren't taking any actions on your local system anyway. I would suggest running Terraform as a normal user on your system, instead of as the superuser, to minimize the scope of any mistakes you might make when configuring Terraform.