I have done image segmentation of the image using PyTorch. I am trying to get the pixel count of Boat class to measure the area. As an example in the image I want to get the pixel count to measure the boat. How do I do that? from the pixel count is it possible to measure the are of the boat?

I am confused and trying to find a way. I would appreciate if anybody can guide me for that.

**The coding is as below: **

from torchvision import models

fcn = models.segmentation.fcn_resnet101(pretrained=True).eval()

from PIL import Image

import matplotlib.pyplot as plt

import torch

img = Image.open('boat.jpg')

plt.imshow(img)

plt.show()

# Apply the transformations needed

#Resize the image to (256 x 256)

#CenterCrop it to (224 x 224)

import torchvision.transforms as T

trf = T.Compose([T.Resize(256),

T.CenterCrop(224),

T.ToTensor(),

T.Normalize(mean = [0.485, 0.456, 0.406],

std = [0.229, 0.224, 0.225])])

inp = trf(img).unsqueeze(0)

out = fcn(inp)['out']

print (out.shape)

#now this 21 channeled output into a 2D image or a 1 channeled image, where each pixel of that image corresponds to a class.

import numpy as np

om = torch.argmax(out.squeeze(), dim=0).detach().cpu().numpy()

print (om.shape)

print (np.unique(om))

# Define the helper function

def decode_segmap(image, nc=21):

label_colors = np.array([(0, 0, 0), # 0=background

# 1=aeroplane, 2=bicycle, 3=bird, 4=boat, 5=bottle

(128, 0, 0), (0, 128, 0), (128, 128, 0), (0, 0, 128), (128, 0, 128),

# 6=bus, 7=car, 8=cat, 9=chair, 10=cow

(0, 128, 128), (128, 128, 128), (64, 0, 0), (192, 0, 0), (64, 128, 0),

# 11=dining table, 12=dog, 13=horse, 14=motorbike, 15=person

(192, 128, 0), (64, 0, 128), (192, 0, 128), (64, 128, 128), (192, 128, 128),

# 16=potted plant, 17=sheep, 18=sofa, 19=train, 20=tv/monitor

(0, 64, 0), (128, 64, 0), (0, 192, 0), (128, 192, 0), (0, 64, 128)])

r = np.zeros_like(image).astype(np.uint8)

g = np.zeros_like(image).astype(np.uint8)

b = np.zeros_like(image).astype(np.uint8)

for l in range(0, nc):

idx = image == l

r[idx] = label_colors[l, 0]

g[idx] = label_colors[l, 1]

b[idx] = label_colors[l, 2]

rgb = np.stack([r, g, b], axis=2)

return rgb

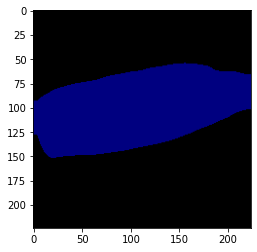

rgb = decode_segmap(om)

plt.imshow(rgb); plt.show()

I want to find some guidance

CodePudding user response:

You are looking for skimage.measure.regionprops. Once you have the predicted label map (om in your code) you can apply regionprops to it and get the area of each region.

CodePudding user response:

You can take the following actions to find the number of pixels in the "Boat" class in an image segmentation result using PyTorch:

Getting the expected labels for each image pixel is the first step. These labels, which include "Boat," "Sky," and other terms, will match to the classes in your dataset. Running the segmentation model on the image and getting the expected labels will allow you to do this.

Then, you can restrict your selection to the pixels with the label "Boat" by using PyTorch's torch.where() function. The indices of the elements that meet a certain criterion are returned by this function. To obtain the indices of the pixels with the label "Boat," for instance, you may use the following code:

indices = torch.where(labels == "Boat")

Once you have the indices, you can obtain the number of pixels for the "Boat" class by using the form attribute of the indices tensor. For instance:`pixel_count = indices.shape[0]

The area of the "Boat" class in the image can be determined using the pixel count if you know the size of the pixels in the image (for example, in pixels per metre). For instance, if the pixel density is 0.1 pixels per metre, the area may be determined as follows:

p_size = 0.1 # pixels per meter

area = p_count * p_size**2 # in square meters

Hope this helps!