#include <stdio.h>

int F(int L[], int p, int q) {

if (p < q) {

int r, f1, f2;

r = (p q) / 2;

f1 = 2 * F(L, p, r);

f2 = 2 * F(L, r 1, q);

return f1 f2;

} else if (p == q) {

return L[p] * L[p];

}else{

return 0;

}

}

int main(void) {

int arr[8] = {1,2,3,4,5,6,7};

printf("%d", F(arr, 0, 7));

}

Someone said the time complexity of this code is O(n).

I don't understand it at all...

Isn't it O(logN) ???

CodePudding user response:

Answer: The Big-O complexity is O(N)

Explanation:

The program takes a range of some size (i.e. q - p) and cut that range into 2 half. Then it calls the function recursively on these two half ranges.

That process continues until the range has size 1. Then there is no more recursion.

Example: Consider a start range with size 8 (e.g. p=0, q=7) then you will get

1 call with range size 8

2 calls with range size 4

4 calls with range size 2

8 calls with range size 1

So 7 calls (i.e. 1 2 4) with range size greater than 1 and 8 calls with range size equal to 1. A total of 15 calls which is nearly 2 times the starting range size.

So for a range size being a power of 2, you can generalize to be

Number of calls with range size greater than 1:

1 2 4 8 16 ... rangesize/2 = rangesize - 1

Number of calls with range size equal to 1:

rangesize

So there will be exactly 2 * rangesize - 1 function calls when range size is a power of 2.

That is Big-O complexity O(N).

Want to try it out?

#include <stdio.h>

unsigned total_calls = 0;

unsigned calls_with_range_size_greater_than_one = 0;

unsigned calls_with_range_size_equal_one = 0;

int F(int L[], int p, int q) {

total_calls;

if (p < q) {

calls_with_range_size_greater_than_one;

int r, f1, f2;

r = (p q) / 2;

f1 = 2 * F(L, p, r);

f2 = 2 * F(L, r 1, q);

return f1 f2;

} else if (p == q) {

calls_with_range_size_equal_one;

return L[p] * L[p];

}else{

return 0;

}

}

int arr[200] = {1,2,3,4,5,6,7};

int main(void) {

for (int i=3; i < 128; i = i i 1)

{

total_calls=0;

calls_with_range_size_greater_than_one=0;

calls_with_range_size_equal_one=0;

F(arr, 0, i);

printf("Start range size: = -> total_calls: %3u calls_with_range_size_greater_than_one: %3u calls_with_range_size_equal_one: %3u\n", i 1, total_calls, calls_with_range_size_greater_than_one, calls_with_range_size_equal_one);

}

return 0;

}

Output:

Start range size: 4 -> total_calls: 7 calls_with_range_size_greater_than_one: 3 calls_with_range_size_equal_one: 4

Start range size: 8 -> total_calls: 15 calls_with_range_size_greater_than_one: 7 calls_with_range_size_equal_one: 8

Start range size: 16 -> total_calls: 31 calls_with_range_size_greater_than_one: 15 calls_with_range_size_equal_one: 16

Start range size: 32 -> total_calls: 63 calls_with_range_size_greater_than_one: 31 calls_with_range_size_equal_one: 32

Start range size: 64 -> total_calls: 127 calls_with_range_size_greater_than_one: 63 calls_with_range_size_equal_one: 64

Start range size: 128 -> total_calls: 255 calls_with_range_size_greater_than_one: 127 calls_with_range_size_equal_one: 128

CodePudding user response:

To put your O(n) or O(log n) ideas to the test, I generated a quick Python program that doesn't actually calculate the information, but does calculate the number of times F would be called.

def F(p, q, calls=1):

if p < q:

r = (p q) // 2

c = F(p, r, calls 1)

c = F(p 1, q, c 1)

return c

else:

return calls

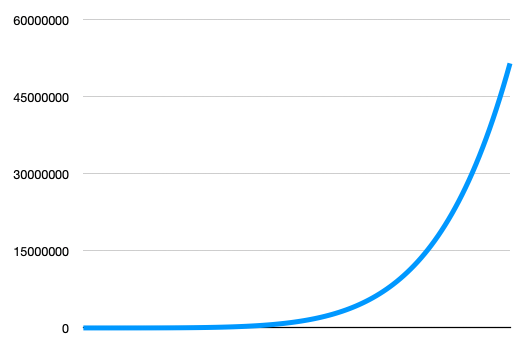

If we leave p as 1 and increase q, and check for the first 250 values of q, we see what is clearly neither logarithmic nor linear complexity.

This happens because you have two recursive calls on each call where p is less than q. The first behaves in a logarithmic fashion while the second is linear.

If we actually follow what is happening, we can see that all of these recursive calls repeatedly re-evaluate the same arguments. A memoization system would dramatically improve the efficiency of this process.