This lines of code extracts all tables from page 667-795 from a pdf and saves them into an array full of tables.

tablesSys = cam.read_pdf("840Dsl_sysvar_lists_man_0122_de-DE_wichtig.pdf",

pages = "667-795",

process_threads = 100000,

line_scale = 100,

strip_text ='.\n'

)

tablesSys = np.array(tablesSys)

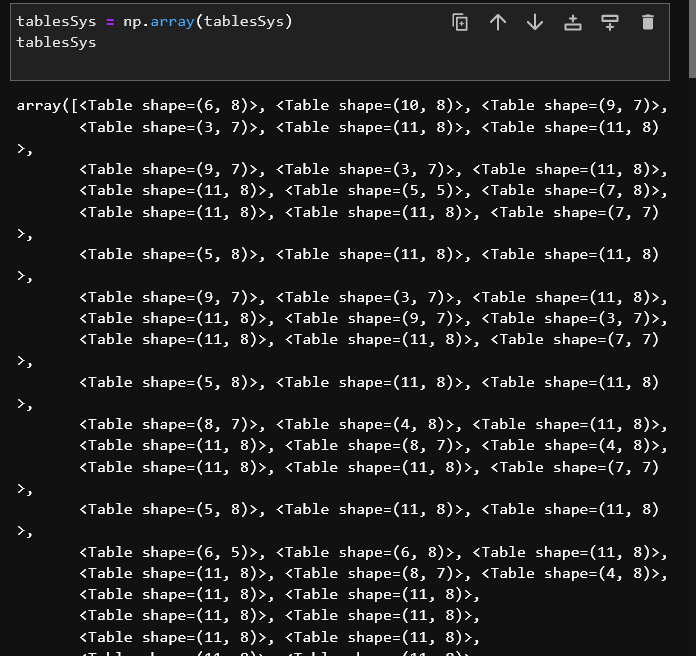

The array looks like this.

Later I have to use this array multiple times.

Now I work with jupyter lab and whenever my kernel gets offline or I start working again after hours or when I restart the kernel etc. I have to call up this line of code to get my tablesSys. Which takes more then 11 minutes to load.

Since the pdf doesn't change at all, I think that I could find a way to only load the code once and save the array somehow. So in the furture I can use the array without loading the code.

Hope to find a solution :)))

CodePudding user response:

Try using the pickle format to save a pickle file to the file system https://docs.python.org/3/library/pickle.html

See a high-level example here, I did not run this code but it should give you an idea.

import pickle

import numpy as np

# calculate the huge data slice

heavy_numpy_array = np.zeros((1000,2)) # some data

# decide where to store the data in the file-system

my_filename = 'path/to/my_file.xyz'

my_file = open(my_filename, 'wb')

# save to file

pickle.dump(heavy_numpy_array, my_file)

my_file.close()

# load the data from file

my_file_v2 = open(my_filename, 'wb')

my_long_numpy_array = pickle.load(my_file_v2)

my_file_v2.close()

CodePudding user response:

Was playing around...

import numpy as np

class Cam:

def read_pdf(self, *args, **kwargs):

return np.random.rand(3, 2)

cam = Cam()

tablesSys = cam.read_pdf(

"840Dsl_sysvar_lists_man_0122_de-DE_wichtig.pdf",

pages="667-795",

process_threads=100000,

line_scale=100,

strip_text=".\n",

)

with open("data.npy", "wb") as f:

np.save(f, tablesSys)

with open("data.npy", "rb") as f:

tablesSys = np.load(f)

print(tablesSys)