What I have is a list of filepaths, saved inside a text file.

eg: filepaths.txt ==

C:\Docs\test1.txt

C:\Docs\test2.txt

C:\Docs\test3.txt

How can I set up a Azure Data Factory pipeline, to essentially loop through each file path and copy it to Azure Blob Storage? So in blob storage, I would have:

- \Docs\test1.txt

- \Docs\test2.txt

- \Docs\test3.txt

Thanks,

CodePudding user response:

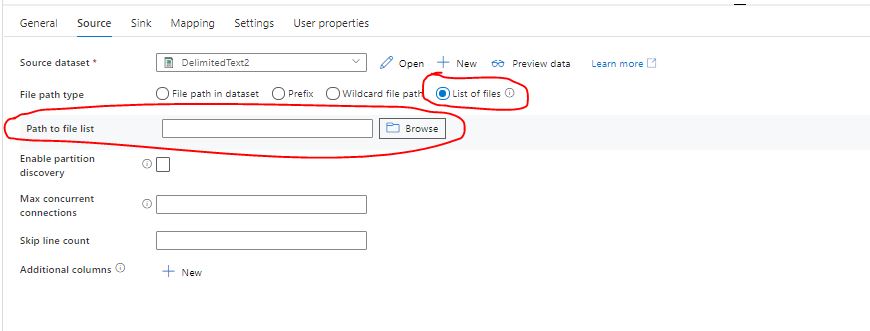

You can use the "List of files" option in the copy activity. But to do this in 1 step, your txt file with the list of files needs to be in the same Source as where the actual files are present.