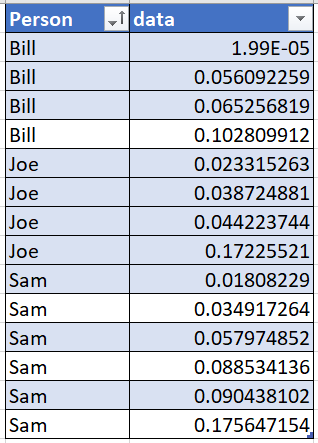

Python newbie here. Imagine a csv file that looks something like this:

(...except that in real life, there are 20 distinct names in the Person column, and each Person has 300-500 rows. Also, there are multiple data columns, not just one.)

What I want to do is randomly flag 10% of each Person's rows and mark this in a new column. I came up with a ridiculously convoluted way to do this--it involved creating a helper column of random numbers and all sorts of unnecessarily complicated jiggery-pokery. It worked, but was crazy. More recently, I came up with this:

import pandas as pd

df = pd.read_csv('source.csv')

df['selected'] = ''

names= list(df['Person'].unique()) #gets list of unique names

for name in names:

df_temp = df[df['Person']== name]

samp = int(len(df_temp)/10) # I want to sample 10% for each name

df_temp = df_temp.sample(samp)

df_temp['selected'] = 'bingo!' #a new column to mark the rows I've randomly selected

df = df.merge(df_temp, how = 'left', on = ['Person','data'])

df['temp'] =[f"{a} {b}" for a,b in zip(df['selected_x'],df['selected_y'])]

#Note: initially instead of the line above, I tried the line below, but it didn't work too well:

#df['temp'] = df['selected_x'] df['selected_y']

df = df[['Person','data','temp']]

df = df.rename(columns = {'temp':'selected'})

df['selected'] = df['selected'].str.replace('nan','').str.strip() #cleans up the column

As you can see, essentially I'm pulling out a temporary DataFrame for each Person, using DF.sample(number) to do the randomising, then using DF.merge to get the 'marked' rows back into the original DataFrame. And it involved iterating through a list to create each temporary DataFrame...and my understanding is that iterating is kind of lame.

There's got to be a more Pythonic, vectorising way to do this, right? Without iterating. Maybe something involving groupby? Any thoughts or advice much appreciated.

CodePudding user response:

If I understood you correctly, you can achieve this using:

df = pd.DataFrame(data={'persons':['A']*10 ['B']*10, 'col_1':[2]*20})

percentage_to_flag = 0.5

a = df.groupby(['persons'])['col_1'].apply(lambda x: pd.Series(x.index.isin(x.sample(frac=percentage_to_flag, random_state= 5, replace=False).index))).reset_index(drop=True)

df['flagged'] = a

Input:

persons col_1

0 A 2

1 A 2

2 A 2

3 A 2

4 A 2

5 A 2

6 A 2

7 A 2

8 A 2

9 A 2

10 B 2

11 B 2

12 B 2

13 B 2

14 B 2

15 B 2

16 B 2

17 B 2

18 B 2

19 B 2

Output with 50% flagged rows in each group:

persons col_1 flagged

0 A 2 False

1 A 2 False

2 A 2 True

3 A 2 False

4 A 2 True

5 A 2 True

6 A 2 False

7 A 2 True

8 A 2 False

9 A 2 True

10 B 2 False

11 B 2 False

12 B 2 True

13 B 2 False

14 B 2 True

15 B 2 True

16 B 2 False

17 B 2 True

18 B 2 False

19 B 2 True

CodePudding user response:

You could use groupby.sample, either to pick out a sample of the whole dataframe for further processing, or to identify rows of the dataframe to mark if that's more convenient.

import pandas as pd

percentage_to_flag = 0.5

# Toy data: 8 rows, persons A and B.

df = pd.DataFrame(data={'persons':['A']*4 ['B']*4, 'data':range(8)})

# persons data

# 0 A 0

# 1 A 1

# 2 A 2

# 3 A 3

# 4 B 4

# 5 B 5

# 6 B 6

# 7 B 7

# Pick out random sample of dataframe.

random_state = 41 # Change to get different random values.

df_sample = df.groupby("persons").sample(frac=percentage_to_flag,

random_state=random_state)

# persons data

# 1 A 1

# 2 A 2

# 7 B 7

# 6 B 6

# Mark the random sample in the original dataframe.

df["marked"] = False

df.loc[df_sample.index, "marked"] = True

# persons data marked

# 0 A 0 False

# 1 A 1 True

# 2 A 2 True

# 3 A 3 False

# 4 B 4 False

# 5 B 5 False

# 6 B 6 True

# 7 B 7 True

If you really do not want the sub-sampled dataframe df_sample you can go straight to marking a sample of the original dataframe:

# Mark random sample in original dataframe with minimal intermediate data.

df["marked2"] = False

df.loc[df.groupby("persons")["data"].sample(frac=percentage_to_flag,

random_state=random_state).index,

"marked2"] = True

# persons data marked marked2

# 0 A 0 False False

# 1 A 1 True True

# 2 A 2 True True

# 3 A 3 False False

# 4 B 4 False False

# 5 B 5 False False

# 6 B 6 True True

# 7 B 7 True True