I have a pretty simple PyMongo configuration that connects to two hosts:

from pymongo import MongoClient

host = os.getenv('MONGODB_HOST', '127.0.0.1')

port = int(os.getenv('MONGODB_PORT', 27017))

hosts = []

for h in host.split(','):

hosts.append('{}:{}'.format(h, port))

cls.client = MongoClient(host=hosts, replicaset='replicatOne')

MONGODB_HOST is composed of a list of two ips, such as "primary_mongo,secondary_mongo"

They are configured as a replicat set.

The issue I notice is that, in this current configuration, if secondary_mongo goes down, my whole code stops working.

I believe that giving a list of hosts to MongoClient would tell it "use the one that works, starting with the first", but it appears it's not correct.

What is wrong and how can I ensure that MongoClient connects properly to primary_mongo first, and in the event it fails, goes to secondary_mongo ?

Thank you for your help.

CodePudding user response:

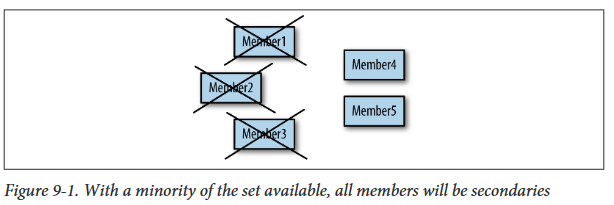

In order to have a fully running MongoDB ReplicaSet you must have a PRIMARY member. A PRIMARY can be only elected if the majority of all members is available. 1 out of 2 is not the majority, thus your MongoDB ReplicaSet goes down if one node fails.

When you connect to your MongoDB then you can connect either to the ReplicaSet, e.g.

mongo "mongodb://localhost:27037,localhost:27137,localhost:27237/?replicaSet=repSet"

or you connect directly, e.g.

mongo "mongodb://localhost:27037,localhost:27137"

When you connect to a ReplicaSet and the PRIMARY goes down (or when it steps down to SECONDARY for whatever reason) and another member becomes the PRIMARY, then usually the client automatically reconnect to the new PRIMARY. However, connecting to a ReplicaSet requires a PRIMARY member, i.e. the majority of members must be available.

When you connect directly, then you can also connect to a SECONDARY member and use the MongoDB in read/only mode. However, you don't have any failover/reconnect function if the connected member goes down.

You could create an ARBITER member on one of your nodes. Then, if the other node goes down, the application is still fully available. Bear in mind, by this setup you can lose only the "second" host but not either of them. In best case you configure the ARBITER on an independent third location.

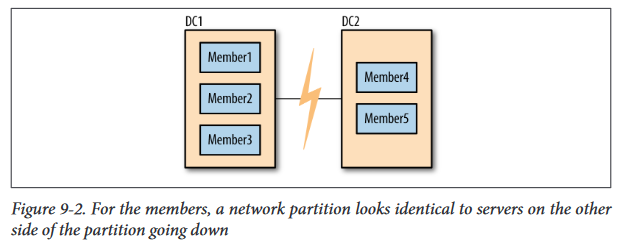

Many users find this frustrating: why can’t the two remaining members elect a primary? The problem is that it’s possible that the other three members didn’t go down, and that it was the network that went down, as shown in Figure 9-2. In this case, the three members on the left will elect a primary, since they can reach a majority of the set (three members out of five).

In the case of a network partition, we do not want both sides of the partition to elect a primary: otherwise the set would have two primaries. Then both primaries would be writing to the data and the data sets would diverge. Requiring a majority to elect or stay primary is a neat way of avoiding ending up with more than one primary.

It is important to configure your set in such a way that you’ll usually be able to have one primary. For example, in the five-member set described above, if members 1, 2, and 3 are in one data center and members 4 and 5 are in another, there should almost always be a majority available in the first data center (it’s more likely to have a network break between data centers than within them).