I'm running different nodejs microservices on Google Kubernetes Services. Sometimes these services crash and according to Cloud Logging, I can find detailed information in a logging file. For example, the logging message says

{

"textPayload": "npm ERR! /root/.npm/_logs/2021-10-27T11_26_28_534Z-debug.log\n",

"insertId": "zoqxk8wvkuofhslm",

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "client-depl-7f679c6b49-5d9tz",

"container_name": "client",

"namespace_name": "production",

"cluster_name": "cluster-1",

"location": "europe-west3-a",

"project_id": "XXX"

}

},

"timestamp": "2021-10-27T11:26:28.701252670Z",

"severity": "ERROR",

"labels": {

"k8s-pod/app": "client",

"k8s-pod/skaffold_dev/run-id": "b5518659-05d6-4c08-9b55-9d58fdd5807f",

"k8s-pod/pod-template-hash": "7f679c6b49",

"compute.googleapis.com/resource_name": "gke-cluster-1-pool-1-8bfc60b2-ag86",

"k8s-pod/app_kubernetes_io/managed-by": "skaffold"

},

"logName": "projects/xxx-productive/logs/stderr",

"receiveTimestamp": "xxx"

}

Where do I find these logs on Google Cloud Platform?

---------------- Edit 2021.10.28 ---------------------------

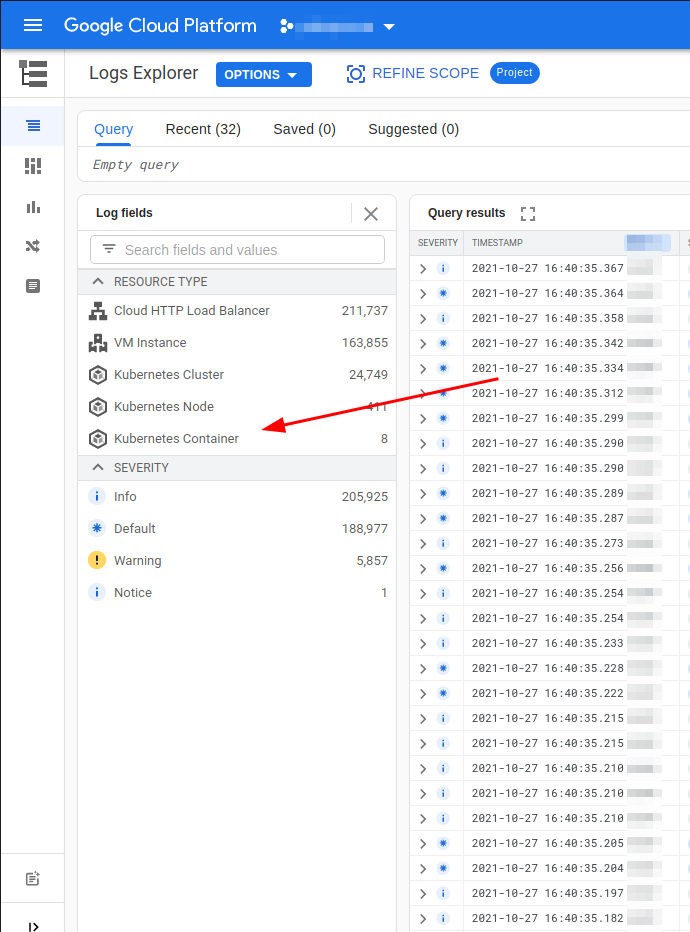

I should clarify that I am already using the logs explorer. This is what I see there:

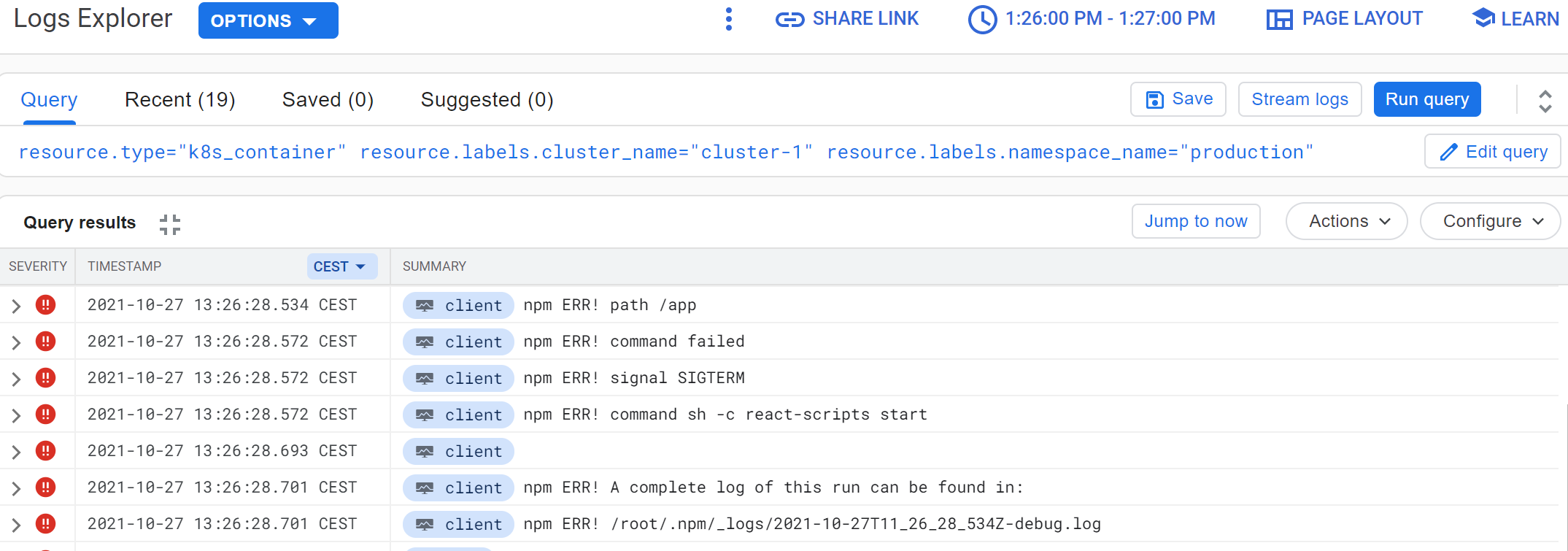

The logs show 7 consecutive error entries about npm failing. The last two entries indicate that there are more information in a log file "/root/.npm/_logs/2021-10-27T11_26_28_534Z-debug.log". Does this log file has more info about the failure or is all the info I get in these 7 error log entries?

Thanks

CodePudding user response:

CodePudding user response:

Assuming you already Enable Logging and Monitoring, You can view logs on:

a. Go to the Logs explorer in the Cloud Console.

b. Click Resource. Under ALL_RESOURCE_TYPES, select Kubernetes Container.

c. Under CLUSTER_NAME, select the name of your user cluster.

d. Under NAMESPACE_NAME, select default.

e. Click Add and then click Run Query.

f. Under Query results, you can see log entries from the monitoring-example Deployment. For example:

{ "textPayload": "2020/11/14 01:24:24 Starting to listen on :9090\n",

"insertId": "1oa4vhg3qfxidt",

"resource": {

"type": "k8s_container",

"labels": {

"pod_name": "monitoring-example-7685d96496-xqfsf",

"cluster_name": ...,

"namespace_name": "default",

"project_id": ...,

"location": "us-west1",

"container_name": "prometheus-example-exporter"

}

}, "timestamp": "2020-11-14T01:24:24.358600252Z",

"labels": {

"k8s-pod/pod-template-hash": "7685d96496",

"k8s-pod/app": "monitoring-example"

},

"logName": "projects/.../logs/stdout",

"receiveTimestamp": "2020-11-14T01:24:39.562864735Z"

}

CodePudding user response:

How about

log into the pod while it is alive

kubectl exec -it your-pod -- sh

wait for it to crash and watch the crash file in real time while the pod is not restarted yet :)