I am trying to run tensorflow with gpu support in a docker on a virtual machine. I have tried lots of online solutions including:

- tried different docker images of versions of tensorflow: 2.6, 2.4, 1.15, 1.14

- built tensorflow from source inside the container based on this guide several times with different bazel flags

Python version is : Python 3.8.10

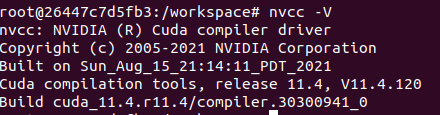

and tensorflow version is:

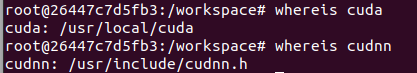

import tensorflow as tf tf.__version__ '2.6.0'The error appears with: tf.config.list_physical_devices()

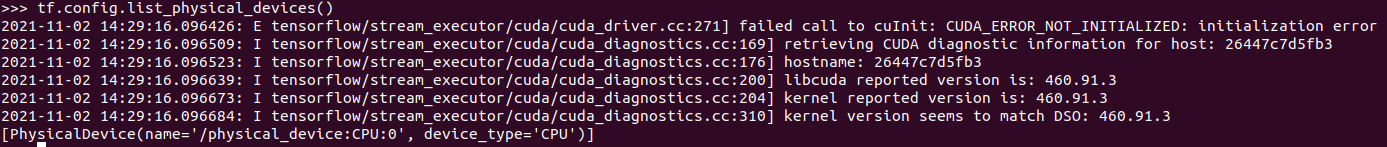

So the GPU is somehow not visible to the tensorflow. All tensorflow builds return the same error:

E tensorflow/stream_executor/cuda/cuda_driver.cc:271] failed call to cuInit: CUDA_ERROR_NOT_INITIALIZED: initialization errorbut for example for 1.14 there is an additional comment regarding the CPU type:

Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 AVX512F FMAThe GPU is a A100 and the CPU is Intel(R) Xeon(R) Gold 6226R.

What is going on here? How do I fix this?

CodePudding user response:

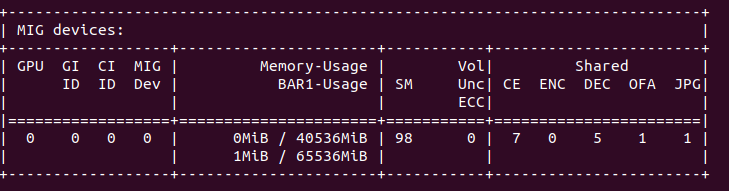

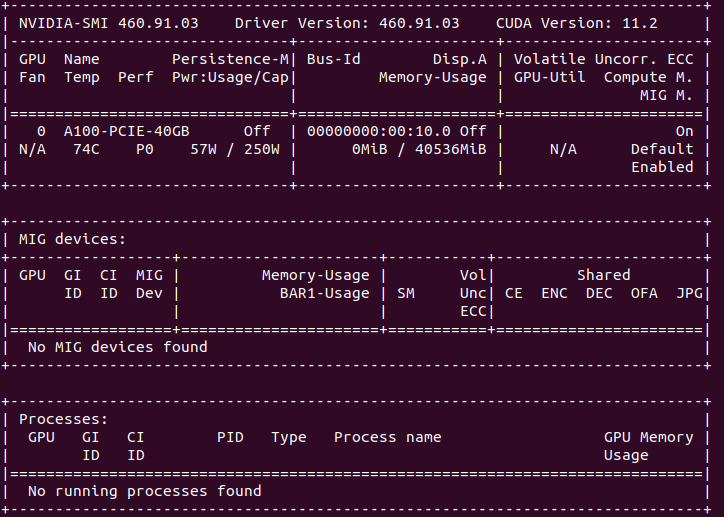

I realized that the GPU has a multi-instance feature:

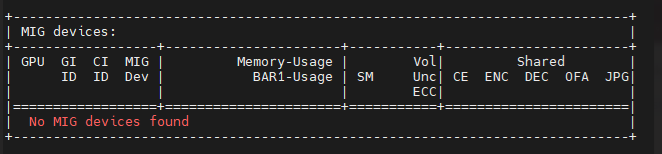

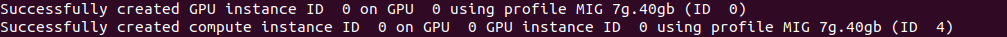

Therefore, the GPU instances should be configured:

sudo nvidia-smi mig -cgi 0 -Cand afterwards when calling nvidia-smi you get:

And the problem is solved!