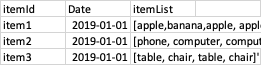

Here is a sample for my dataset. I would like to write Spark SQL to change the itemlist with duplicate values to unique values so for example 'apple, banana, banana" will be come 'apple, banana' I wrote the code here :

SELECT ItemId, Date, concat_ws(',',collect_set(CAST(SPLIT(PointNameArray, ','),ARRAY<STRING>))) AS VarN

FROM dataset

But I keep getting errors stating

cannot resolve 'concat_ws(',', collect_set(split(dataset.

PointNameArray, ',')))' due to data type mismatch: argument 2 requires (array or string) type, however, 'collect_set(split(dataset.PointNameArray, ','))' is of array<array>

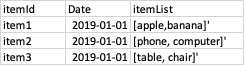

The result for itemlist is

apple, banana

phone, computer

table, chair

So the table should look like this

CodePudding user response:

You are getting the error :

cannot resolve 'concat_ws(',', collect_set(split(dataset.PointNameArray, ',')))' due to data type mismatch: argument 2 requires (array or string) type, however, 'collect_set(split(dataset.PointNameArray, ','))' is of array

because split already returns an array<string> and collect_set is treating this entire array as a single entry in the set, hence and array<array<string>>.

It should be noted that collect_set is an aggregate function that may be applied in a group by or as a window function on each row. Applying it on each row as a window function as is however will run the risk of the entire split array being considered as one element which is not the aim.

Moreover, when you split some sample data ('apple, banana, banana) by ,, some may have spaces ( ) preceding the value and banana is different from banana. To ensure we have unique values we can trim these.

Since you are using spark sql, the examples below will use spark-sql instead of the python/scala apis which could follow the same approach

Sample Dataframe before

------ ---------- ---------------------

|ItemId|Date |itemList |

------ ---------- ---------------------

|item1 |2019-01-01|apple, banana, banana|

------ ---------- ---------------------

Proposed Code

SELECT

ItemId,

Date,

concat_ws(',',collect_set(trim(isplit))) as VarN

FROM

df

LATERAL VIEW explode(SPLIT(itemList,',')) as isplit

GROUP BY ItemId, Date

Output After

------ ---------- ------------

|ItemId|Date |VarN |

------ ---------- ------------

|item1 |2019-01-01|apple,banana|

------ ---------- ------------

In the sample code above the data is:

- Split by

,usingSPLIT(itemList,',') - exploded into multiple rows using

explode(SPLIT(itemList,','))in a lateral view outputing

------ ---------- -------

|ItemId|Date |isplit |

------ ---------- -------

|item1 |2019-01-01|apple |

|item1 |2019-01-01| banana|

|item1 |2019-01-01| banana|

------ ---------- -------

- The data is then trimmed to remove spaces using

trim(isplit) - We then aggregate the data using

collect_setwhich will provide our unique values, grouping on the other columnsItemId, Date. NB. If you have many columns you may group by on the unique column egItemIdand simply useMAXon the others eg

SELECT

ItemId,

MAX(Date) as Date,

concat_ws(',',collect_set(trim(isplit))) as VarN

FROM

df

LATERAL VIEW explode(SPLIT(itemList,',')) as isplit

GROUP BY ItemId

- Finally we concatenate these values using

concat_ws

CodePudding user response:

I think you want to use array_distinct function not collect_set:

spark.sql("""

SELECT ItemId,

Date,

PointNameArray,

concat_ws(',',array_distinct(split(PointNameArray, ','))) AS VarN

FROM dataset

""").show(truncate=False)

# ------ ---------- ------------------------- --------------

#|ItemId|Date |PointNameArray |VarN |

# ------ ---------- ------------------------- --------------

#|item1 |2019-01-01|apple,banana,apple,banana|apple,banana |

#|item2 |2019-01-01|phone,computer,computer |phone,computer|

#|item3 |2019-01-01|table,chair,table,chair |table,chair |

# ------ ---------- ------------------------- --------------