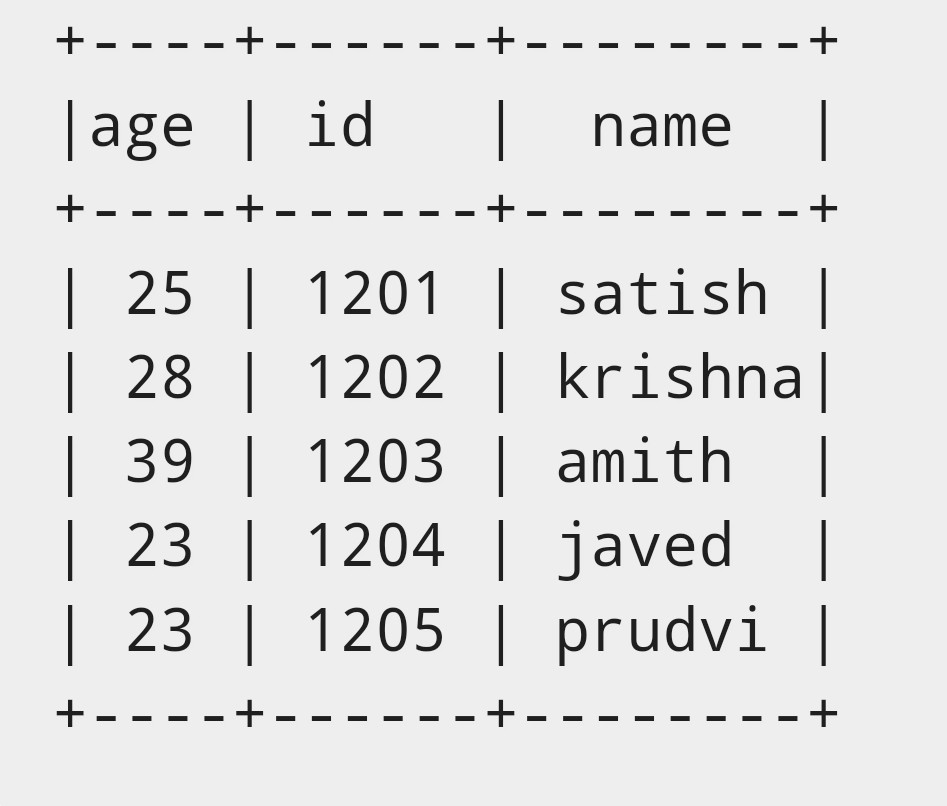

This is the dataframe which I'm working on I want to change it to a dataset with the follow format

"Id":{

"Unique-id" : "id"

"Details" : {

"Person-name" : "name"

"Person-name" : "age"

}

}

CodePudding user response:

There are couple of issue with your json.

- You can't have same key "Person-name" twice within the sub json "Details"

- You are missing the outer curly braces.

So the corrected json object should look like this.

{"Id": {"Unique-id": "id", "Details": {"Person-name": "name", "Person-age": "25"}}}

If so, then the transformation is pretty straight forward.

df = (spark.read.json('test.json')

.withColumn('Unique-Id', F.col('Id.Unique-id'))

.withColumn('age', F.col('Id.Details.Person-age'))

.withColumn('name', F.col('Id.Details.Person-name'))

.drop('Id')

.withColumnRenamed('Unique-Id', 'id')

)

CodePudding user response:

You just need to select the fields that you need. Assuming the following input DataFrame:

case class Top(Id: Inner)

case class Inner(`Unique-id`: String, Details: Details)

case class Details(`Person-name`: String, `Person-age`: Int)

val ss: SparkSession = ???

import ss.implicits._

val df = Seq(

Top(Inner("1201", Details("satish", 25)))

).toDF

You will have the following:

root

|-- Id: struct (nullable = true)

| |-- Unique-id: string (nullable = true)

| |-- Details: struct (nullable = true)

| | |-- Person-name: string (nullable = true)

| | |-- Person-age: integer (nullable = false)

You can construct your desired output with:

df.select(

col("Id.Unique-id") as "id",

col("Id.Details.Person-name") as "name",

col("Id.Details.Person-age") as "age",

)