I need some assistance to undertand the various forms of logging in to Databricks. I am using Terraform to provision Azure Databricks I would like to know the difference in the two codes below When i use option 1, i get the error as shown

Option 1:

required_providers {

azuread = "~> 1.0"

azurerm = "~> 2.0"

azuredevops = { source = "registry.terraform.io/microsoft/azuredevops", version = "~> 0.0" }

databricks = { source = "registry.terraform.io/databrickslabs/databricks", version = "~> 0.0" }

}

}

provider "random" {}

provider "azuread" {

tenant_id = var.project.arm.tenant.id

client_id = var.project.arm.client.id

client_secret = var.secret.arm.client.secret

}

provider "databricks" {

host = azurerm_databricks_workspace.db-workspace.workspace_url

azure_use_msi = true

}

resource "azurerm_databricks_workspace" "db-workspace" {

name = module.names-db-workspace.environment.databricks_workspace.name_unique

resource_group_name = module.resourcegroup.resource_group.name

location = module.resourcegroup.resource_group.location

sku = "premium"

public_network_access_enabled = true

custom_parameters {

no_public_ip = true

virtual_network_id = module.virtualnetwork["centralus"].virtual_network.self.id

public_subnet_name = module.virtualnetwork["centralus"].virtual_network.subnets["db-sub-1-public"].name

private_subnet_name = module.virtualnetwork["centralus"].virtual_network.subnets["db-sub-2-private"].name

public_subnet_network_security_group_association_id = module.virtualnetwork["centralus"].virtual_network.nsgs.associations.subnets["databricks-public-nsg-db-sub-1-public"].id

private_subnet_network_security_group_association_id = module.virtualnetwork["centralus"].virtual_network.nsgs.associations.subnets["databricks-private-nsg-db-sub-2-private"].id

}

tags = local.tags

}

Databricks Cluster Creation

resource "databricks_cluster" "dbcselfservice" {

cluster_name = format("adb-cluster-%s-%s", var.project.name, var.project.environment.name)

spark_version = var.spark_version

node_type_id = var.node_type_id

autotermination_minutes = 20

autoscale {

min_workers = 1

max_workers = 7

}

azure_attributes {

availability = "SPOT_AZURE"

first_on_demand = 1

spot_bid_max_price = 100

}

depends_on = [

azurerm_databricks_workspace.db-workspace

]

}

Databricks Workspace RBAC Permission

resource "databricks_group" "db-group" {

display_name = format("adb-users-%s", var.project.name)

allow_cluster_create = true

allow_instance_pool_create = true

depends_on = [

resource.azurerm_databricks_workspace.db-workspace

]

}

resource "databricks_user" "dbuser" {

count = length(local.display_name)

display_name = local.display_name[count.index]

user_name = local.user_name[count.index]

workspace_access = true

depends_on = [

resource.azurerm_databricks_workspace.db-workspace

]

}

Adding Members to Databricks Admin Group

resource "databricks_group_member" "i-am-admin" {

for_each = toset(local.email_address)

group_id = data.databricks_group.admins.id

member_id = databricks_user.dbuser[index(local.email_address, each.key)].id

depends_on = [

resource.azurerm_databricks_workspace.db-workspace

]

}

data "databricks_group" "admins" {

display_name = "admins"

depends_on = [

# resource.databricks_cluster.dbcselfservice,

resource.azurerm_databricks_workspace.db-workspace

]

}

The error that i get while TF apply is below :

Error: User not authorized

with databricks_user.dbuser[1],

on resources.adb.tf line 80, in resource "databricks_user" "dbuser":

80: resource "databricks_user" "dbuser"{

Error: User not authorized

with databricks_user.dbuser[0],

on resources.adb.tf line 80, in resource "databricks_user" "dbuser":

80: resource "databricks_user" "dbuser"{

Error: cannot refresh AAD token: adal:Refresh request failed. Status Code = '500'. Response body: {"error":"server_error", "error_description":"Internal server error"} Endpoint http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https://management.core.windows.net/

with databricks_group.db-group,

on resources.adb.tf line 80, in resource "databricks_group" "db-group":

71: resource "databricks_group" "db-group"{

Is the error coming because of this block below ?

provider "databricks" {

host = azurerm_databricks_workspace.db-workspace.workspace_url

azure_use_msi = true

}

I just need to login automatically when i click on the URL from the portal. So what shall i use for that? And why do we need to provide two times databricks providers, once under required_providers and again in provider "databricks"? I have seen if i don't provide the second provider i get the error :

"authentication is not configured for provider"

CodePudding user response:

As mentioned in the comments , If you are using Azure CLI authentication i.e. az login using your username and password , then you can use the below code :

terraform {

required_providers {

databricks = {

source = "databrickslabs/databricks"

version = "0.3.11"

}

}

}

provider "azurerm" {

features {}

}

provider "databricks" {

host = azurerm_databricks_workspace.example.workspace_url

}

resource "azurerm_databricks_workspace" "example" {

name = "DBW-ansuman"

resource_group_name = azurerm_resource_group.example.name

location = azurerm_resource_group.example.location

sku = "premium"

managed_resource_group_name = "ansuman-DBW-managed-without-lb"

public_network_access_enabled = true

custom_parameters {

no_public_ip = true

public_subnet_name = azurerm_subnet.public.name

private_subnet_name = azurerm_subnet.private.name

virtual_network_id = azurerm_virtual_network.example.id

public_subnet_network_security_group_association_id = azurerm_subnet_network_security_group_association.public.id

private_subnet_network_security_group_association_id = azurerm_subnet_network_security_group_association.private.id

}

tags = {

Environment = "Production"

Pricing = "Standard"

}

}

data "databricks_node_type" "smallest" {

local_disk = true

depends_on = [

azurerm_databricks_workspace.example

]

}

data "databricks_spark_version" "latest_lts" {

long_term_support = true

depends_on = [

azurerm_databricks_workspace.example

]

}

resource "databricks_cluster" "dbcselfservice" {

cluster_name = "Shared Autoscaling"

spark_version = data.databricks_spark_version.latest_lts.id

node_type_id = data.databricks_node_type.smallest.id

autotermination_minutes = 20

autoscale {

min_workers = 1

max_workers = 7

}

azure_attributes {

availability = "SPOT_AZURE"

first_on_demand = 1

spot_bid_max_price = 100

}

depends_on = [

azurerm_databricks_workspace.example

]

}

resource "databricks_group" "db-group" {

display_name = "adb-users-admin"

allow_cluster_create = true

allow_instance_pool_create = true

depends_on = [

resource.azurerm_databricks_workspace.example

]

}

resource "databricks_user" "dbuser" {

display_name = "Rahul Sharma"

user_name = "[email protected]"

workspace_access = true

depends_on = [

resource.azurerm_databricks_workspace.example

]

}

resource "databricks_group_member" "i-am-admin" {

group_id = databricks_group.db-group.id

member_id = databricks_user.dbuser.id

depends_on = [

resource.azurerm_databricks_workspace.example

]

}

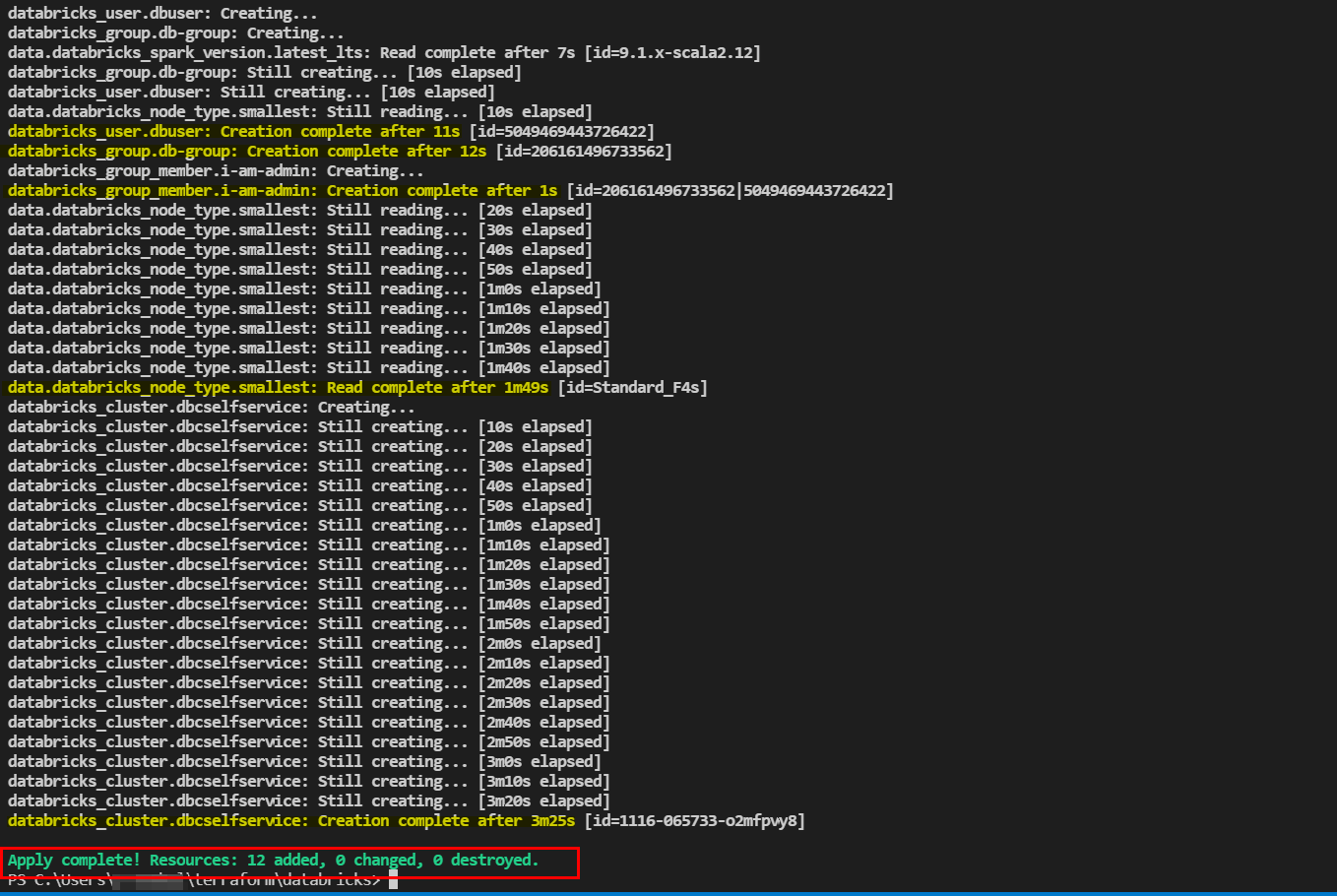

Output:

If you are using Service Principal as authentication , then you can use something like below:

terraform {

required_providers {

databricks = {

source = "databrickslabs/databricks"

version = "0.3.11"

}

}

}

provider "azurerm" {

subscription_id = "948d4068-xxxx-xxxx-xxxx-e00a844e059b"

tenant_id = "72f988bf-xxxx-xxxx-xxxx-2d7cd011db47"

client_id = "f6a2f33d-xxxx-xxxx-xxxx-d713a1bb37c0"

client_secret = "inl7Q~Gvdxxxx-xxxx-xxxxyaGPF3uSoL"

features {}

}

provider "databricks" {

host = azurerm_databricks_workspace.example.workspace_url

azure_client_id = "f6a2f33d-xxxx-xxxx-xxxx-d713a1bb37c0"

azure_client_secret = "inl7Q~xxxx-xxxx-xxxxg6ntiyaGPF3uSoL"

azure_tenant_id = "72f988bf-xxxx-xxxx-xxxx-2d7cd011db47"

}

resource "azurerm_databricks_workspace" "example" {

name = "DBW-ansuman"

resource_group_name = azurerm_resource_group.example.name

location = azurerm_resource_group.example.location

sku = "premium"

managed_resource_group_name = "ansuman-DBW-managed-without-lb"

public_network_access_enabled = true

custom_parameters {

no_public_ip = true

public_subnet_name = azurerm_subnet.public.name

private_subnet_name = azurerm_subnet.private.name

virtual_network_id = azurerm_virtual_network.example.id

public_subnet_network_security_group_association_id = azurerm_subnet_network_security_group_association.public.id

private_subnet_network_security_group_association_id = azurerm_subnet_network_security_group_association.private.id

}

tags = {

Environment = "Production"

Pricing = "Standard"

}

}

data "databricks_node_type" "smallest" {

local_disk = true

depends_on = [

azurerm_databricks_workspace.example

]

}

data "databricks_spark_version" "latest_lts" {

long_term_support = true

depends_on = [

azurerm_databricks_workspace.example

]

}

resource "databricks_cluster" "dbcselfservice" {

cluster_name = "Shared Autoscaling"

spark_version = data.databricks_spark_version.latest_lts.id

node_type_id = data.databricks_node_type.smallest.id

autotermination_minutes = 20

autoscale {

min_workers = 1

max_workers = 7

}

azure_attributes {

availability = "SPOT_AZURE"

first_on_demand = 1

spot_bid_max_price = 100

}

depends_on = [

azurerm_databricks_workspace.example

]

}

resource "databricks_group" "db-group" {

display_name = "adb-users-admin"

allow_cluster_create = true

allow_instance_pool_create = true

depends_on = [

resource.azurerm_databricks_workspace.example

]

}

resource "databricks_user" "dbuser" {

display_name = "Rahul Sharma"

user_name = "[email protected]"

workspace_access = true

depends_on = [

resource.azurerm_databricks_workspace.example

]

}

resource "databricks_group_member" "i-am-admin" {

group_id = databricks_group.db-group.id

member_id = databricks_user.dbuser.id

depends_on = [

resource.azurerm_databricks_workspace.example

]

}

And why do we need to provide two times databricks providers, once under required_providers and again in provider "databricks"?

The required_providers is used to download and initialize the required providers from the source i.e. Terraform Registry . But the Provider Block is used for further configuration of that downloaded provider like describing client_id, features block etc. which can be used for authentication or other configuration.

CodePudding user response:

The azure_use_msi option is primarily intended for use from CI/CD pipelines that are executed on machines with managed identity assigned to them. All possible authentication options are described in the documenation, but simplest way is to use authentication via Azure CLI, so you just need to leave host parameter in the provider block. If you don't have Azure CLI on that machine, you can use combination of host personal access token instead.

if you're running that code from the machine with assigned managed identity, then you need to make sure that this identity is either added into workspace, or it has Contributor access to it - see Azure Databricks documentation for more details.