My data set has the following shapes:

y_train.shape,y_val.shape

((265, 2), (10, 2))

x_train.shape, x_val.shape

((265, 4), (10, 4))

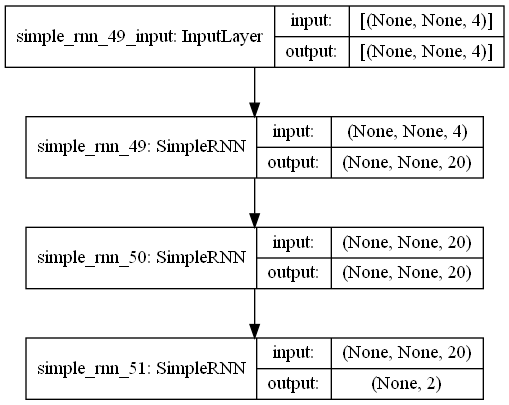

I'm trying to use a simple RNN model

model=models.Sequential([layers.SimpleRNN(20,input_shape=(None,4),return_sequences=True),

layers.SimpleRNN(20,return_sequences=True),

layers.SimpleRNN(2),

])

model.compile(optimizer="Adam",

loss=tf.keras.losses.MeanSquaredError(),

metrics=["accuracy"])

The problem shows up when I fit the model to the data:

history=model.fit(x_train,y_train,

epochs=20,

validation_data=(x_val,y_val),

verbose=2)

I get the following error:

ValueError: Input 0 of layer sequential_12 is incompatible with the layer: expected ndim=3, found ndim=2. Full shape received: (None, 4)

I think it's something related to the input... but I don't understand what.

CodePudding user response:

First thing input should be 3D with shape [batch, timesteps, feature].

x_train and x_val do not follow this rule. You can easily expand their dims by:

x_train = np.expand_dims(x_train, axis = -1) # (265, 4, 1)

x_val= np.expand_dims(x_val, axis = -1) # (10, 4, 1)

Another problem is input_shape. It needs to be input_shape=(4,1) according to the new shape of x_train and x_val. So the correct definition should be:

model=models.Sequential([layers.SimpleRNN(20,input_shape=(4,1),return_sequences=True),

layers.SimpleRNN(20,return_sequences=True),

layers.SimpleRNN(2),

])

If you want to include None in input_shape then you should pass batch_input_shape.

model= tf.keras.Sequential([tf.keras.layers.SimpleRNN(20,batch_input_shape=(None, 4,1),

return_sequences=True),

tf.keras.layers.SimpleRNN(20,return_sequences=True),

tf.keras.layers.SimpleRNN(2),

])

This indicates any batch size is accepted by the model.

Note: If you specify batch_input_shape, like, batch_input_shape=(32, 4,1), then it will throw an error if there is a remaining batch with size less than 32.