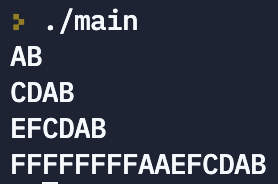

I want to compose the number 0xAAEFCDAB from individual bytes. Everything goes well up to 4 tetrads, and for some reason extra 4 bytes are added with it. What am I doing wrong?

#include <stdio.h>

int main(void) {

unsigned long int a = 0;

a = a | ((0xAB) << 0);

printf("%lX\n", a);

a = a | ((0xCD) << 8);

printf("%lX\n", a);

a = a | ((0xEF) << 16);

printf("%lX\n", a);

a = a | ((0xAA) << 24);

printf("%lX\n", a);

return 0;

}

Output:

CodePudding user response:

a = a | ((0xAA) << 24);

((0xAA) << 24) is a negative number (it is int), then it is converted to long int which adds those 0xffffffff at the beginning.

You need to tell the compiler that you want an unsigned number.

a = a | ((0xAAU) << 24);

int main(void) {

unsigned long int a = 0;

a = a | ((0xAB) << 0);

printf("%lX\n", a);

a = a | ((0xCD) << 8);

printf("%lX\n", a);

a = a | ((0xEF) << 16);

printf("%lX\n", a);

a = a | ((0xAAUL) << 24);

printf("%lX\n", a);

printf("%d\n", ((0xAA) << 24));

return 0;

}

https://gcc.godbolt.org/z/fjv19bKGc

CodePudding user response:

0xAA gets treated as a signed value when it is scaled up during the bit shifting. Since its high bit is 1 (0xAA = 10101010b), the scaled value is sign extended to 0x...FFFFFFAA before you shift and OR it to a.

You need to cast 0xAA to an unsigned value before bit shifting it, so it gets zero extended instead.